Technology

The best Black Friday deals on gaming laptops

/cdn.vox-cdn.com/uploads/chorus_asset/file/23250318/akrales_220215_5022_0166.jpg)

You’re not simply searching for a workhorse. You need a laptop computer that’s received sport. Possibly which means body charges, perhaps even a high-refresh-rate display screen for enhanced responsiveness in much less demanding video games. However above all, you need bang for the buck. That’s why you’re shopping for proper now, throughout Black Friday week, when a number of the greatest offers (and a bunch of predatory faux ones) present up.

These are the offers that caught my eye — those I’d take into account myself or advocate to a pal, relying on their wants and price range. Let’s begin with the least costly and go from there!

Replace November twenty third: Added a number of extra offers.

Technology

Gym teacher accused of using AI voice clone to try to get a high school principal fired

/cdn.vox-cdn.com/uploads/chorus_asset/file/25263505/STK_414_AI_C.jpg)

A physical education teacher and former athletic director of a Baltimore County high school has been arrested and charged with using an AI voice cloning service to frame the school’s principal.

The Baltimore Banner reports that Baltimore County police believe a recording whiich circulated through social media in January with purported audio of Pikesville High School principal Eric Eiswert making racist and antisemitic comments was fake. Experts told The Baltimore Banner and police that the recording, which briefly resulted in Eiswert’s suspension, has a “flat tone, unusually clean background sounds, and lack of consistent breathing sounds or pauses.”

Baltimore County police traced the recording to Dazhon Darien, a former athletic director at the school whose name was also mentioned in the audio clip. He allegedly accessed school computers “to access OpenAI tools and Microsoft Bing Chat services” as reported by WBAL 11 and NBC News. He was also linked to the audio’s release via an email address and associated recovery phone number.

It is not clear what AI voice platform Darien allegedly used.

The police arrested Darien on Thursday at the airport, and said in a statement “It’s believed Mr. Darien, who was an Athletic Director at Pikesville High School, made the recording to retaliate against Mr. Eiswert who at the time was pursuing an investigation into the potential mishandling of school funds.” He has been released after posting bail, and faces charges including theft (for the issue with school funds), disturbing the operations of a school, retaliation against a witness, and stalking.

In this fraught environment, OpenAI decided in March to withhold its AI text-to-voice generation platform, Voice Engine, from public use. The service, which only requires a 15-minute audio clip to clone someone’s voice, is only available to a limited number of researchers due to the lack of guardrails around the technology.

US lawmakers have filed, but not yet passed, several bills like the No Fakes Act and the No AI Fraud Act that seek to prevent technology companies from using an individual’s face, voice, or name without their permission.

Update April 25th: Clarified that Pikesville High School is in the city of Pikesville in Baltimore County, MD, added details about Darien and Thursday’s arrest.

Technology

Sony’s PlayStation Portal handheld is back in stock at multiple retailers

/cdn.vox-cdn.com/uploads/chorus_asset/file/25184512/111323_PlayStation_Portal_ADiBenedetto_0010.jpg)

The Portal isn’t the Nintendo Switch or Steam Deck killer some thought it might be, but it remains a handy tool for gamers who can’t play on their primary TV or just want the ability to enjoy games throughout their home. If you’re not already caught up on what it is, it’s essentially an eight-inch 1080p LCD display that’s sandwiched between two halves of a standard DualSense controller, meaning it features adaptive triggers, haptic feedback, and all the perks of Sony’s latest gamepad. Its main (and only) purpose is to stream games via Remote Play, which requires a PS5 and reliable Wi-Fi network.

We love what the Portal enables, but some curious technical choices can get in the way of your fun. For example, since wireless audio relies on Sony’s proprietary Link protocol, you can only use the Pulse Explore earbuds and Pulse Elite headset with it (sorry, no Bluetooth earbuds allowed). Thankfully, it has a 3.5mm audio jack for using a pair of wired headphones, assuming you still have a pair lying around.

Additionally, you may run into trouble if your network performance isn’t strong. The latency can make the experience utterly unenjoyable, and we certainly wouldn’t recommend trying multiplayer games or fighting titles where precision is paramount. We’ve found it much better when the console is connected via ethernet, however, which is almost mandatory for stable gameplay outside the home. We’re hoping Sony can eventually improve on these pain points with future software updates, but even with the aforementioned caveats, the Portal remains the best all-in-one solution for streaming games via Remote Play.

Technology

11 insider tricks for the tech you use every day

If you’re the person skipping updates on your devices … knock that off. You’re missing out on important security enhancements—like iOS 17.4, which adds better Stolen Device Protection and Android’s new Find My feature to locate your lost phone.

🎉 Win an iPhone 15 worth $799! I’m giving it to one person who tries my free daily tech newsletter. Enter to win now!

It’s nearly impossible to keep up with every update and added feature. That’s what I’m here for. I hope you find a tech tip below that makes life better for you!

Shop open-box deals

US AIRPORTS ADAPT TO TRAVEL SURGE BY EXPANDING USE OF TECHNOLOGY TO PROCESS PASSENGERS

Most sites use grades or ratings, so you know the condition. For example, a “Grade A” smartphone has just a bit of wear. Amazon open-box products are always fully functional and in one of four conditions: “Used/Like New,” “Very Good,” “Good” or “Acceptable.” Here’s a link to Amazon’s open-box deals.

Know what apps are listening

Buried within all the legal mumbo jumbo you said “yes” to when downloading an app, you may have given the app permission to listen using your phone’s microphone and collect data.

(Photo by CHANDAN KHANNA/AFP via Getty Images)

- Have an iPhone? Open Settings > Privacy & Security > Microphone. Disable apps you don’t want picking up on your conversations.

- On Android, go to Settings > Apps Permission Manager. Disable the microphone for any apps you don’t want eavesdropping.

Your Google Doc holds secrets you shouldn’t share

It’ll be called “Copy of” and your original file name by default. Rename it, then share that. Why? Anyone accessing the original doc can review all your edits, changes and versions. Hit File > Make a copy. Pro tip: Reverse this idea to see someone else’s edits and changes.

Make your iPad more useful

SEE WHAT THE HOME YOU GREW UP IN LOOKS LIKE NOW AND OTHER MAPS TRICKS

This is a pro move if you spend time with your Apple tablet on the couch. Go to Settings > General > Keyboard, then enable Split Keyboard. Long-press the keyboard key at the bottom right, slide your finger to Split, then release. Now you can type with your thumbs! To return to normal, long-press the keyboard key, slide your finger to Merge and release.

Don’t say I didn’t warn you

Take off your phone’s case and you’ll see all the grime collected inside. Gross. For plastic, rubber and silicone cases, use an old toothbrush and a bit of warm, soapy water. For leather cases, very lightly dampen a microfiber cloth with water and mild soap.

Stay safe on the road

Get the free NHTS SaferCar app. Enter your car’s VIN and receive automatic alerts about recalls. There have been a lot lately, and it’s easy to miss notifications from your dealership.

For your eyes only

If you have sensitive pics like your driver’s license on your phone, set up a locked folder in Google Photos. Open the Google Photos app > Utilities > Set up Locked Folder. Follow the on-screen directions to finish up. Note: Anything stored there isn’t backed up to the cloud. Wouldn’t be very private that way.

Have an iPhone?

CAN YOU SPOT ELECTION DEEPFAKES? HERE’S HOW NOT TO BE DUPED

You can store secret pics in the Notes app. Open the pic in the Photos app, tap the share icon and select Notes. Go into the note you want to protect, tap the three-dot icon in the upper right corner, then choose Lock.

You made a bad call

And streamed a terrible rom-com. Get it off your Netflix history so it doesn’t influence your future suggestions. On a computer, click your profile, then Viewing activity. By each show or movie, you’ll see a small icon of a circle with a line through it. Click on that to hide it.

(Photographer: Noah Berger/Bloomberg via Getty Images)

I’d rather be safe than sorry

For every study that shows your phone is perfectly safe, there’s another about the impacts of even low-level radiation. I rarely bring my phone to my head or put it in my pocket. AirPods are my favorite way to take a call (I’m an iPhone gal). Go with AirPods Pro if you can. On an Android, here’s a budget earbuds option and the fancy ones. Men, don’t store your phones in your pants pockets. It can hurt your fertility.

Too many tabs and too much noise?

Right-click on a tab in your browser and select Mute Tab or Mute Site. In some browsers, you can also click the microphone on a tab playing noise to stop it.

Get tech-smarter on your schedule

Award-winning host Kim Komando is your secret weapon for navigating tech.

Copyright 2024, WestStar Multimedia Entertainment. All rights reserved.

-

World1 week ago

World1 week agoIf not Ursula, then who? Seven in the wings for Commission top job

-

News1 week ago

News1 week agoGOP senators demand full trial in Mayorkas impeachment

-

Movie Reviews1 week ago

Movie Reviews1 week agoMovie Review: The American Society of Magical Negroes

-

Movie Reviews1 week ago

Movie Reviews1 week agoFilm Review: Season of Terror (1969) by Koji Wakamatsu

-

Movie Reviews1 week ago

Movie Reviews1 week agoShort Film Review: For the Damaged Right Eye (1968) by Toshio Matsumoto

-

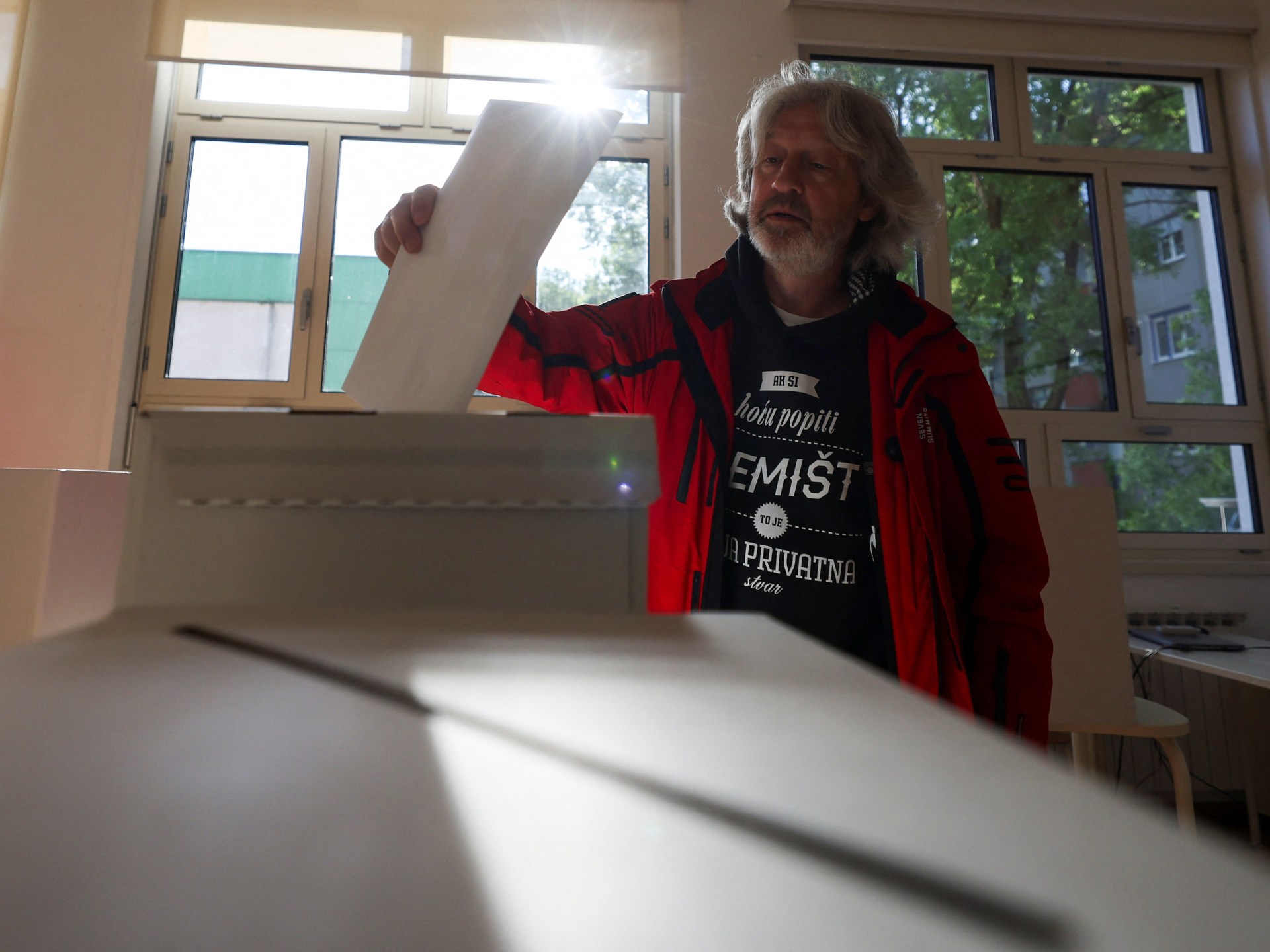

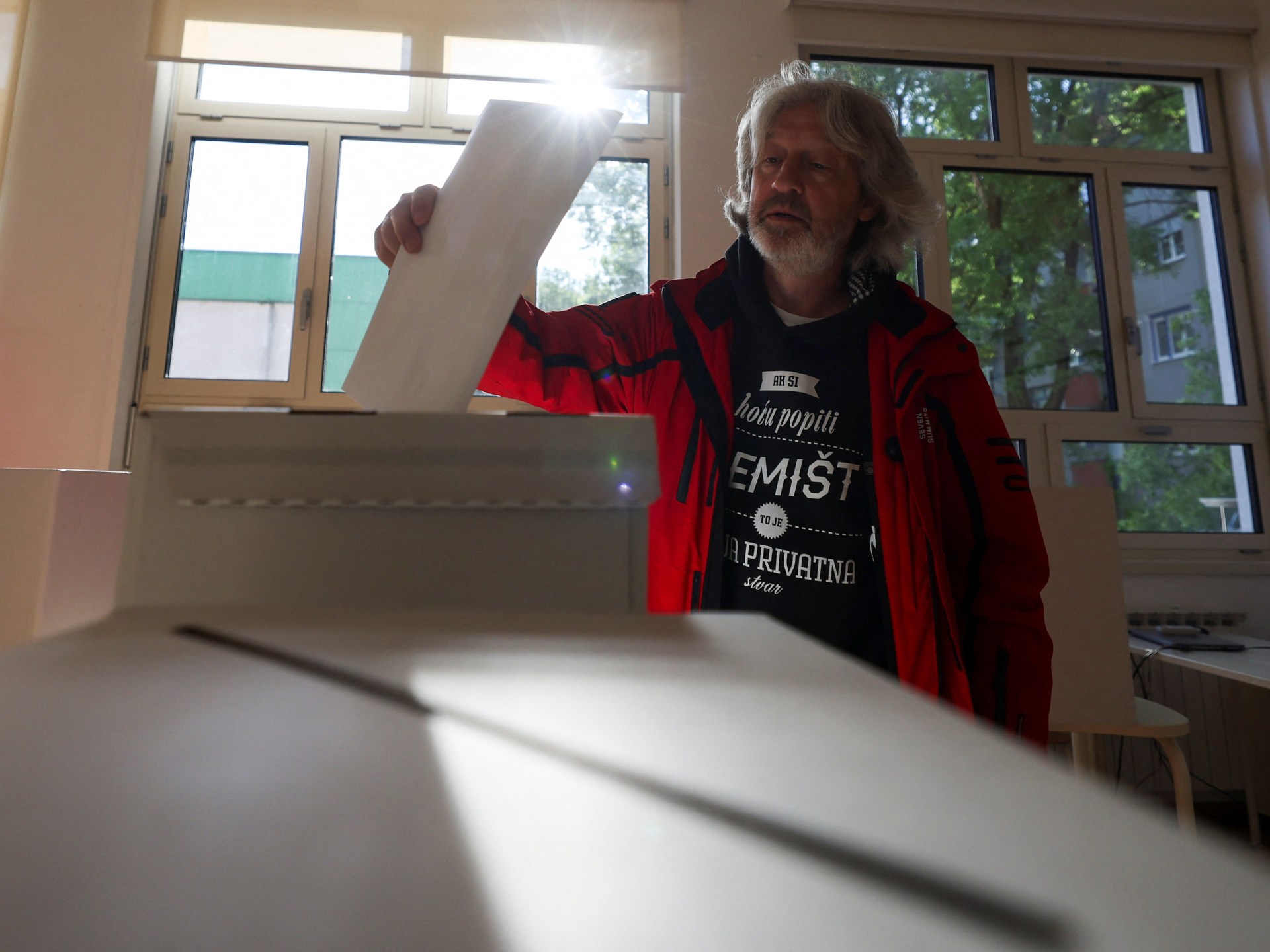

World1 week ago

World1 week agoCroatians vote in election pitting the PM against the country’s president

-

World1 week ago

World1 week ago'You are a criminal!' Heckler blasts von der Leyen's stance on Israel

-

Politics1 week ago

Politics1 week agoTrump trial: Jury selection to resume in New York City for 3rd day in former president's trial