Science

Why the spread of organic farms may prompt growers to use more pesticide, not less

To help California fight climate change, air quality regulators would like to see 20% of the state’s farmland go organic by 2045. That means converting about 65,000 acres of conventional fields to organic practices every year.

But depending on how that transition happens, the change could lead to an overall increase in the amount of pesticide used by growers throughout the state.

So suggests a new study in the journal Science that examined how organic farms influence the behavior of their neighbors. Researchers found that when new organic fields come online, the insects that come with them may prompt conventional growers to boost their pesticide use by an amount large enough to offset the reduction in organic fields — and then some.

“We expect an increase in organic in the future,” said study leader Ashley Larsen, a professor of agricultural and landscape ecology at UC Santa Barbara. “How do we make sure this is not causing unintended harm?”

Organic farming practices help fight climate change by producing healthier soil that can hold on to more carbon and by eschewing synthetic nitrogen fertilizers, which fuel greenhouse gas emissions. Organic methods are also more sustainable for a warming world because they help the soil hold more water, among other benefits.

For their study, Larsen and her colleagues took a deep dive into the farming practices of California’s Kern County, where growers regularly produce more than $7 billion worth of grapes, citrus, almonds, pistachios and other crops. Thanks to the county and the state, there are detailed records going back for years about just how they do it.

The researchers examined about 14,000 individual fields between 2013 and 2019. They were able to see the shapes and locations of these fields, as well as whether they were growing conventional or organic crops and how much pesticide was used.

Indeed, a key difference between conventional and organic agriculture is their approach to dealing with unwanted pests. Traditional farms may deploy toxic chemicals like organophosphates and organochlorines, while organic farms prefer to keep damaging bugs in check by encouraging the growth of their natural enemies, including particular beetles, spiders and birds. They can also use certain pesticides, which typically are made with with natural instead of synthetic ingredients.

These contrasting strategies make for complicated neighbors. If destructive critters migrate from an organic farm to a conventional one, a grower may respond by using more pesticide. That, in turn, would undermine the helpful creatures organic growers rely upon. On the other hand, organic farms nurture beneficial insects that migrate to other fields.

“Organic farms can be both a blessing and a curse if they’re your neighbor,” said David Haviland, an entomologist with the University of California’s integrative pest management program in Bakersfield, who was not involved in the study.

By 2019, about 7.5% of permitted fields in Kern County were used to grow organic products. They were distributed throughout the county’s growing areas, though many were grouped into clusters.

An aerial view of farmland and orchards near Maricopa at the southern end of the San Joaquin Valley in Kern County.

(Al Seib / Los Angeles Times)

With their data in hand, the researchers created a statistical model to see if they could find a relationship between pesticide use in a given field and the presence of organic fields nearby.

In the case of organic fields, they found that a 10% increase in neighboring organic cropland was associated with a 3% decline in pesticide use. For conventional fields, the same 10% bump in organic neighbors came with a 0.3% rise in pesticide use.

Since conventional fields outnumbered organic ones by a wide margin, the net effect in Kern County was a 0.2% increase in pesticide use. Most of that was driven by added insecticides rather than chemicals that targeted invasive weeds or damaging fungi, Larsen said.

“We think it basically comes down to a different reliance on natural pest-control methods,” she said. More bugs are bad for conventional farmers because for them it means more unwanted insects, she explained. But more bugs are good for organic farmers because it means having more natural enemies of those same pests.

The researchers also used their model to simulate different possible farming futures to see if this overall increase in pesticide use could be avoided. The answer, they found, was yes.

One way was to expand the amount of land farmed organically. In their model, going from no organic fields at all to 5% of cropland being organic was associated with a 9% hike in insecticide use in Kern County. However, if 20% of agricultural land held organic crops — as the California Air Resources Board envisions — total insecticide use fell by 17%.

Those figures were based on a simulation in which organic fields were spread out, maximizing the pest-control border skirmishes between organic and conventional fields. In a scenario where organic fields were clustered together instead, increasing their combined footprint from 0% to 5% of total acreage was associated with a 10% cut in insecticide use, and going all the way to 20% of total acreage was linked with a 36% drop in the chemicals, the researchers reported.

“What we basically see in the simulation is that while there could be an increase in insecticide use at low levels of organic, it can be entirely mitigated by spatially clustering organic croplands,” Larsen said.

Making that happen in a simulation is one thing; doing it in the real world is another. An organic almond farmer whose orchard abuts a conventional one can’t easily dig up his mature trees and replant them somewhere else. But as farmers switch more of their conventional fields to organic, these study results could help them decide where to focus their efforts to get the biggest payoff, Larsen said.

Likewise, policymakers might identify certain areas where they’d like to see organic crops and offer incentives to encourage growers to make the leap. In principle, it would be similar to the grants offered by the California Department of Food and Agriculture’s Healthy Soils Program, she said.

Erik Lichtenberg, an agricultural economist at the University of Maryland, said the study made “a convincing case” that organic farms affect their neighbors, but it would be important to know a lot more specifics before concluding that it’s a good idea to segregate organic and conventional farms.

Among other things, “I would want to know more about why the fields are located the way they are, what you plant where, and how that relates to the pest-management strategies the growers are following,” said Lichtenberg, who wrote a a commentary that accompanies the study.

Haviland said the idea of clustering organic farms makes sense in general because it reduces the edges between organic and conventional fields. However, he noted that there are instances where clustering could make things worse.

Consider the glassy-winged sharpshooter, which spreads a disease that kills grapevines. Conventional farmers have tools at their disposal to control them, but organic growers do not. When organic grapevines are more isolated, the chances that an insect flies away from the field and “doesn’t come home” are greater because it will encounter a pesticide nearby, Haviland said. But if all the organic fields were clustered together, they’d be “drastically increasing their own problem by not benefiting from conventional growers around them.”

Haviland also emphasized that “there’s a misconception among the general public that all pesticides are created equal and they’re all bad, and that’s definitely not true.” Reducing total pesticide use is valuable, but it’s more important to consider the types of pesticides being used, he said.

The statistical analysis alone doesn’t prove that the addition of organic fields is responsible for the change in pesticide use, but Larsen said the circumstantial evidence for a causal relationship is compelling. The conventional fields that acquired an organic neighbor used to have the same pattern of pesticide use as their fellow other conventional fields, and they started to diverge only after the nearby field switched to organic.

“This is pretty strong evidence, in our minds,” she said.

Milt McGiffen, a cooperative extension specialist with the Department of Botany and Plant Sciences at UC Riverside, was less sure. He said growers make a point of planting organic crops in places where they know pest control won’t be a big problem since they can’t use conventional pesticides.

“Mostly why you have have a group of organic farms together is because that’s where you have the fewest pests, not the other way around,” said McGiffen, who wasn’t involved in the study.

He said there are many examples of governments trying to accelerate the transition to organic food production, but he is not aware of any effort to encourage growers to locate organic fields in specific places.

“This study has interesting ideas,” McGiffen said, but “some experimentalist needs to go out there and test all this.”

Science

Canny as a crocodile but dumber than a baboon — new research ponders T. rex's brain power

In December 2022, Vanderbilt University neuroscientist Suzana Herculano-Houzel published a paper that caused an uproar in the dinosaur world.

After analyzing previous research on fossilized dinosaur brain cavities and the neuron counts of birds and other related living animals, Herculano-Houzel extrapolated that the fearsome Tyrannosaurus rex may have had more than 3 billion neurons — more than a baboon.

As a result, she argued, the predators could have been smart enough to make and use tools and to form social cultures akin to those seen in present-day primates.

The original “Jurassic Park” film spooked audiences by imagining velociraptors smart enough to open doors. Herculano-Houzel’s paper described T. rex as essentially wily enough to sharpen their own shivs. The bold claims made headlines, and almost immediately attracted scrutiny and skepticism from paleontologists.

In a paper published Monday in “The Anatomical Record,” an international team of paleontologists, neuroscientists and behavioral scientists argue that Herculano-Houzel’s assumptions about brain cavity size and corresponding neuron counts were off-base.

True T. rex intelligence, the scientists say, was probably much closer to that of modern-day crocodiles than primates — a perfectly respectable amount of smarts for a therapod to have.

“What needs to be emphasized is that reptiles are certainly not as dim-witted as is commonly believed,” said Kai Caspar, a biologist at Heinrich Heine University Düsseldorf and co-author of the paper. “So whereas there is no reason to assume that T. rex had primate-like habits, it was certainly a behaviorally sophisticated animal.”

Brain tissue doesn’t fossilize, and so researchers examine the shape and size of the brain cavity in fossilized dinosaur skulls to deduce what their brains may have been like.

In their analysis, the authors took issue with Herculano-Houzel’s assumption that dinosaur brains filled their skull cavities in a proportion similar to bird brains. Herculano-Houzel’s analysis posited that T. rex brains occupied most of their brain cavity, analogous to that of the modern-day ostrich.

But dinosaur brain cases more closely resemble those of modern-day reptiles like crocodiles, Caspar said. For animals like crocodiles, brain matter occupies only 30% to 50% of the brain cavity. Though brain size isn’t a perfect predictor of neuron numbers, a much smaller organ would have far fewer than the 3 billion neurons Herculano-Houzel projected.

“T. rex does come out as the biggest-brained big dinosaur we studied, and the biggest one not closely related to modern birds, but we couldn’t find the 2 to 3 billion neurons she found, even under our most generous estimates,” said co-author Thomas R. Holtz, Jr., a vertebrate paleontologist at University of Maryland, College Park.

What’s more, the research team argued, neuron counts aren’t an ideal indicator of an animal’s intelligence.

Giraffes have roughly the same number of neurons that crows and baboons have, Holtz pointed out, but they don’t use tools or display complex social behavior in the way those species do.

“Obviously in broad strokes you need more neurons to create more thoughts and memories and to solve problems,” Holtz said, but the sheer number of neurons an animal has can’t tell us how the animal will use them.

“Neuronal counts really are comparable to the storage capacity and active memory on your laptop, but cognition and behavior is more like the operating system,” he said. “Not all animal brains are running the same software.”

Based on CT scan reconstructions, the T. rex brain was probably “ a long tube that has very little in terms of the cortical expansion that you see in a primate or a modern bird,” said paleontologist Luis Chiappe, director of the Dinosaur Institute at the Natural History Museum of Los Angeles County.

“The argument that a T. Rex would have been as intelligent as a primate — no. That makes no sense to me,” said Chiappe, who was not involved in the study.

Like many paleontologists, Chiappe and his colleagues at the Dinosaur Institute were skeptical of Herculano-Houzel’s original conclusions. The new paper is more consistent with previous understandings of dinosaur anatomy and intelligence, he said.

“I am delighted to see that my simple study using solid data published by paleontologists opened the way for new studies,” Herculano-Houzel said in an email. “Readers should analyze the evidence and draw their own conclusions. That’s what science is about!”

When thinking about the inner life of T. rex, the most important takeaway is that reptilian intelligence is in fact more sophisticated than our species often assumes, scientists said.

“These animals engage in play, are capable of being trained, and even show excitement when they see their owners,” Holtz said. “What we found doesn’t mean that T. rex was a mindless automaton; but neither was it going to organize a Triceratops rodeo or pass down stories of the duckbill that was THAT BIG but got away.”

Science

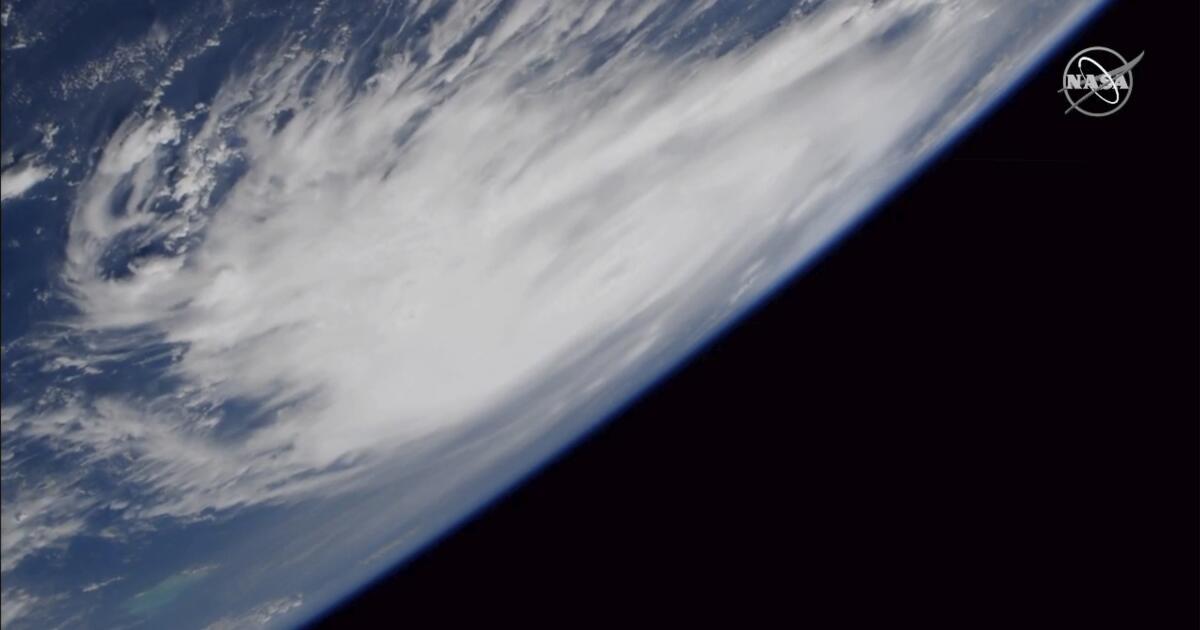

You're gonna need a bigger number: Scientists consider a Category 6 for mega-hurricane era

In 1973, the National Hurricane Center introduced the Saffir-Simpson scale, a five-category rating system that classified hurricanes by wind intensity.

At the bottom of the scale was Category 1, for storms with sustained winds of 74 to 95 mph. At the top was Category 5, for disasters with winds of 157 mph or more.

In the half-century since the scale’s debut, land and ocean temperatures have steadily risen as a result of greenhouse gas emissions. Hurricanes have become more intense, with stronger winds and heavier rainfall.

With catastrophic storms regularly blowing past the 157-mph threshold, some scientists argue, the Saffir-Simpson scale no longer adequately conveys the threat the biggest hurricanes present.

Earlier this year, two climate scientists published a paper that compared historical storm activity to a hypothetical version of the Saffir-Simpson scale that included a Category 6, for storms with sustained winds of 192 mph or more.

Of the 197 hurricanes classified as Category 5 from 1980 to 2021, five fit the description of a hypothetical Category 6 hurricane: Typhoon Haiyan in 2013, Hurricane Patricia in 2015, Typhoon Meranti in 2016, Typhoon Goni in 2020 and Typhoon Surigae in 2021.

Patricia, which made landfall near Jalisco, Mexico, in October 2015, is the most powerful tropical cyclone ever recorded in terms of maximum sustained winds. (While the paper looked at global storms, only storms in the Atlantic Ocean and the northern Pacific Ocean east of the International Date Line are officially ranked on the Saffir-Simpson scale. Other parts of the world use different classification systems.)

Though the storm had weakened to a Category 4 by the time it made landfall, its sustained winds over the Pacific Ocean hit 215 mph.

“That’s kind of incomprehensible,” said Michael F. Wehner, a senior scientist at the Lawrence Berkeley National Laboratory and co-author of the Category 6 paper. “That’s faster than a racing car in a straightaway. It’s a new and dangerous world.”

In their paper, which was published in the Proceedings of the National Academy of Sciences, Wehner and co-author James P. Kossin of the University of Wisconsin–Madison did not explicitly call for the adoption of a Category 6, primarily because the scale is quickly being supplanted by other measurement tools that more accurately gauge the hazard of a specific storm.

“The Saffir-Simpson scale is not all that good for warning the public of the impending danger of a storm,” Wehner said.

The category scale measures only sustained wind speeds, which is just one of the threats a major storm presents. Of the 455 direct fatalities in the U.S. due to hurricanes from 2013 to 2023 — a figure that excludes deaths from 2017’s Hurricane Maria — less than 15% were caused by wind, National Hurricane Center director Mike Brennan said during a recent public meeting. The rest were caused by storm surges, flooding and rip tides.

The Saffir-Simpson scale is a relic of an earlier age in forecasting, Brennan said.

“Thirty years ago, that’s basically all we could tell you about a hurricane, is how strong it was right now. We couldn’t really tell you much about where it was going to go, or how strong it was going to be, or what the hazards were going to look like,” Brennan said during the meeting, which was organized by the American Meteorological Society. “We can tell people a lot more than that now.”

He confirmed the National Hurricane Center has no plans to introduce a Category 6, primarily because it is already trying “to not emphasize the scale very much,” Brennan said. Other meteorologists said that’s the right call.

“I don’t see the value in it at this time,” said Mark Bourassa, a meteorologist at Florida State University’s Center for Ocean-Atmospheric Prediction Studies. “There are other issues that could be better addressed, like the spatial extent of the storm and storm surge, that would convey more useful information [and] help with emergency management as well as individual people’s decisions.”

Simplistic as they are, Herbert Saffir and Robert Simpson’s categories are the first thing many people think of when they try to grasp the scale of a storm. In that sense, the scale’s persistence over the years helps people understand how much the climate has changed since its introduction.

“What the Saffir-Simpson scale is good for is quantifying, showing, that the most intense storms are becoming more intense because of climate change,” Wehner said. “It’s not like it used to be.”

Science

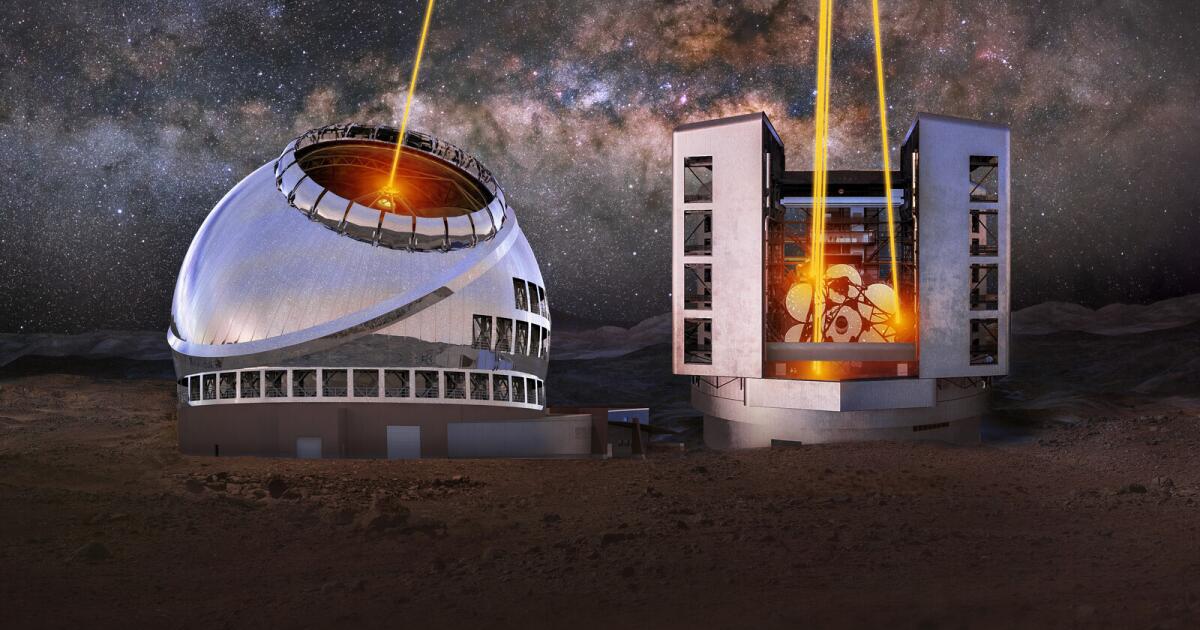

Opinion: America's 'big glass' dominance hangs on the fate of two powerful new telescopes

More than 100 years ago, astronomer George Ellery Hale brought our two Pasadena institutions together to build what was then the largest optical telescope in the world. The Mt. Wilson Observatory changed the conception of humankind’s place in the universe and revealed the mysteries of the heavens to generations of citizens and scientists alike. Ever since then, the United States has been at the forefront of “big glass.”

In fact, our institutions, Carnegie Science and Caltech, still help run some of the largest telescopes for visible-light astronomy ever built.

But that legacy is being threatened as the National Science Foundation, the federal agency that supports basic research in the U.S., considers whether to fund two giant telescope projects. What’s at stake is falling behind in astronomy and cosmology, potentially for half a century, and surrendering the scientific and technological agenda to Europe and China.

In 2021, the National Academy of Sciences released Astro2020. This report, a road map of national priorities, recommended funding the $2.5-billion Giant Magellan Telescope at the peak of Cerro Las Campanas in Chile and the $3.9-billion Thirty Meter Telescope at Mauna Kea in Hawaii. According to those plans, the telescopes would be up and running sometime in the 2030s.

NASA and the Department of Energy backed the plan. Still, the National Science Foundation’s governing board on Feb. 27 said it should limit its contribution to $1.6 billion, enough to move ahead with just one telescope. The NSF intends to present their process for making a final decision in early May, when it will also ask for an update on nongovernmental funding for the two telescopes. The ultimate arbiter is Congress, which sets the agency’s budget.

America has learned the hard way that falling behind in science and technology can be costly. Beginning in the 1970s, the U.S. ceded its powerful manufacturing base, once the nation’s pride, to Asia. Fast forward to 2022, the U.S. government marshaled a genuine effort toward rebuilding and restarting its factories — for advanced manufacturing, clean energy and more — with the Inflation Reduction Act, which is expected to cost more than $1 trillion.

President Biden also signed into law the $280-billion CHIPS and Science Act two years ago to revive domestic research and manufacturing of semiconductors — which the U.S. used to dominate — and narrow the gap with China.

As of 2024, America is the unquestioned leader in astronomy, building powerful telescopes and making significant discoveries. A failure to step up now would cede our dominance in ways that would be difficult to remedy.

The National Science Foundation’s decision will be highly consequential. Europe, which is on the cusp of overtaking the U.S. in astronomy, is building the aptly named Extremely Large Telescope, and the United States hasn’t been invited to partner in the project. Russia aims to create a new space station and link up with China to build an automated nuclear reactor on the moon.

Although we welcome any sizable grant for new telescope projects, it’s crucial to understand that allocating funds sufficient for just one of the two planned telescopes won’t suffice. The Giant Magellan and the Thirty Meter telescopes are designed to work together to create capabilities far greater than the sum of their parts. They are complementary ground stations. The GMT would have an expansive view of the southern hemisphere heavens, and the TMT would do the same for the northern hemisphere.

The goal is “all-sky” observation, a wide-angle view into deep space. Europe’s Extremely Large Telescope won’t have that capability. Besides boosting America’s competitive edge in astronomy, the powerful dual telescopes, with full coverage of both hemispheres, would allow researchers to gain a better understanding of phenomena that come and go quickly, such as colliding black holes and the massive stellar explosions known as supernovas. They would put us on a path to explore Earth-like planets orbiting other suns and address the question: “Are we alone?”

Funding both the GMT and TMT is an investment in basic science research, the kind of fundamental work that typically has led to economic growth and innovation in our uniquely American ecosystem of scientists, investors and entrepreneurs.

Elon Musk’s SpaceX is the most recent example, but the synergy goes back decades. Basic science at the vaunted Bell Labs, in part supported by taxpayer contributions, was responsible for the transistor, the discovery of cosmic microwave background and establishing the basis of modern quantum computing. The internet, in large part, started as a military communications project during the Cold War.

Beyond its economic ripple effect, basic research in space and about the cosmos has played an outsized role in the imagination of Americans. In the 1960s, Dutch-born American astronomer Maarten Schmidt was the first scientist to identify a quasar, a star-like object that emits radio waves, a discovery that supported a new understanding of the creation of the universe: the Big Bang. The first picture of a black hole, seen with the Event Horizon Telescope, was front-page news in 2019.

We understand that competing in astronomy has only gotten more expensive, and there’s a need to concentrate on a limited number of critical projects. But what could get lost in the shuffle are the kind of ambitious projects that have made America the scientific envy of the world, inspiring new generations of researchers and attracting the best minds in math and science to our colleges and universities.

Do we really want to pay that price?

Eric D. Isaacs is the president of Carnegie Science, prime backer of the Giant Magellan Telescope. Thomas F. Rosenbaum is president of Caltech, key developer of the Thirty Meter Telescope.

-

Kentucky1 week ago

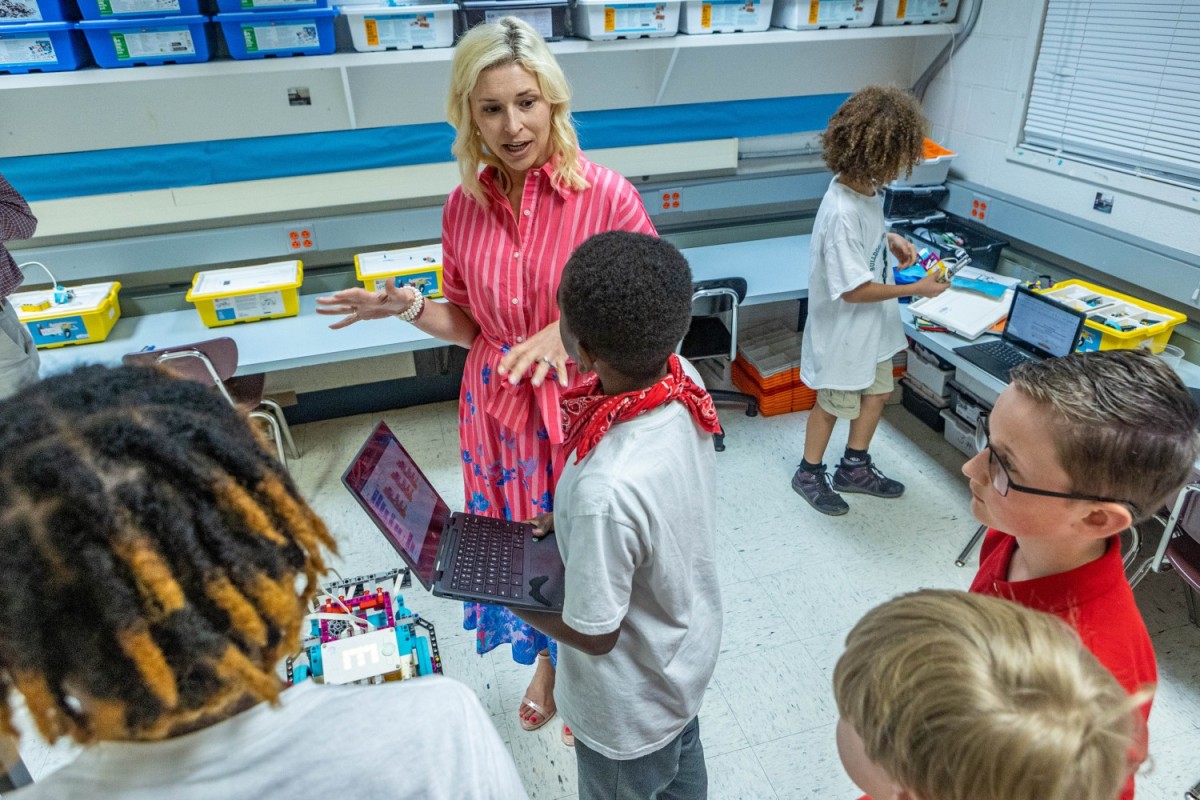

Kentucky1 week agoKentucky first lady visits Fort Knox schools in honor of Month of the Military Child

-

News1 week ago

News1 week agoIs this fictitious civil war closer to reality than we think? : Consider This from NPR

-

World1 week ago

World1 week agoShipping firms plead for UN help amid escalating Middle East conflict

-

Politics1 week ago

Politics1 week agoICE chief says this foreign adversary isn’t taking back its illegal immigrants

-

Politics1 week ago

Politics1 week ago'Nothing more backwards' than US funding Ukraine border security but not our own, conservatives say

-

News1 week ago

News1 week agoThe San Francisco Zoo will receive a pair of pandas from China

-

World1 week ago

World1 week agoTwo Mexican mayoral contenders found dead on same day

-

Politics1 week ago

Politics1 week agoRepublican aims to break decades long Senate election losing streak in this blue state