Technology

Texas is replacing thousands of human exam graders with AI

/cdn.vox-cdn.com/uploads/chorus_asset/file/25263501/STK_414_AI_A.jpg)

Students in Texas taking their state-mandated exams this week are being used as guinea pigs for a new artificial intelligence-powered scoring system set to replace a majority of human graders in the region.

The Texas Tribune reports an “automated scoring engine” that utilizes natural language processing — the technology that enables chatbots like OpenAI’s ChatGPT to understand and communicate with users — is being rolled out by the Texas Education Agency (TEA) to grade open-ended questions on the State of Texas Assessments of Academic Readiness (STAAR) exams. The agency is expecting the system to save $15–20 million per year by reducing the need for temporary human scorers, with plans to hire under 2,000 graders this year compared to the 6,000 required in 2023.

“We wanted to keep as many constructed open-ended responses as we can, but they take an incredible amount of time to score.”

The STAAR exams, which test students between the third and eighth grades on their understanding of the core curriculum, were redesigned last year to include fewer multiple-choice questions. It now contains up to seven times more open-ended questions, with TEA director of student assessment Jose Rios saying the agency “wanted to keep as many constructed open-ended responses as we can, but they take an incredible amount of time to score.”

According to a slideshow hosted on TEA’s website, the new scoring system was trained using 3,000 exam responses that had already received two rounds of human grading. Some safety nets have also been implemented — a quarter of all the computer-graded results will be rescored by humans, for example, as will answers that confuse the AI system (including the use of slang or non-English responses).

While TEA is optimistic that AI will enable it to save buckets of cash, some educators aren’t so keen to see it implemented. Lewisville Independent School District superintendent Lori Rapp said her district saw a “drastic increase” in constructed responses receiving a zero score when the automated grading system was used on a limited basis in December 2023. “At this time, we are unable to determine if there is something wrong with the test question or if it is the new automated scoring system,” Rapp said.

AI essay-scoring engines are nothing new. A 2019 report from Motherboard found that they were being used in at least 21 states to varying degrees of success, though TEA seems determined to avoid the same reputation. Small print on TEA’s slideshow also stresses that its new scoring engine is a closed system that’s inherently different from AI, in that “AI is a computer using progressive learning algorithms to adapt, allowing the data to do the programming and essentially teaching itself.”

The attempt to draw a line between them isn’t surprising — there’s no shortage of teachers despairing online about how generative AI services are being used to cheat on assignments and homework. The students being graded by this new scoring system may have a hard time accepting how they believe “rules for thee and not for me” are being applied here.

Technology

Amazon says its Prime deliveries are getting even faster

/cdn.vox-cdn.com/uploads/chorus_asset/file/23935559/acastro_STK103__02.jpg)

To me, Prime’s promise of two-day shipping is more of an added bonus to Prime Video and stuff like Fallout. But it’s become an expectation, leading other retailers like Walmart and Target to roll out faster shipping options of their own.

Now, Amazon says its deliveries are getting even faster, announcing that it delivered over 2 billion items the same or next day to Prime members during the first three months of 2024, breaking its record for 2023. The company says it delivered almost 60 percent of Prime orders the same or next day in 60 of the biggest metropolitan areas in the US.

If you buy from Amazon, have you noticed any differences lately? Same-day and next-day options seem to be more widely available, but it’s hard to tell how that applies to different items in different places or whether the associated costs are worth it based on reports of warehouse injuries and workers organizing for better conditions.

The control Amazon has over shipping and fulfillment has helped make it the target of a lawsuit from the Federal Trade Commission. The agency alleges Amazon engages in anticompetitive behavior by unfairly limiting which sellers are eligible for Prime shipping and coercing companies into using its fulfillment services. Amazon claims that the FTC’s efforts could result in “slower or less reliable” Prime shipping for customers.

In 2019, Amazon said it was spending billions to build up an in-house fulfillment operation covering planes, trucks, drones, and robots to rival FedEx and UPS and enable these one-day-or-less deliveries. And last year, it introduced a program that allows sellers to ship their products directly from factories — regardless of whether they’re going to one of Amazon’s many warehouses.

Technology

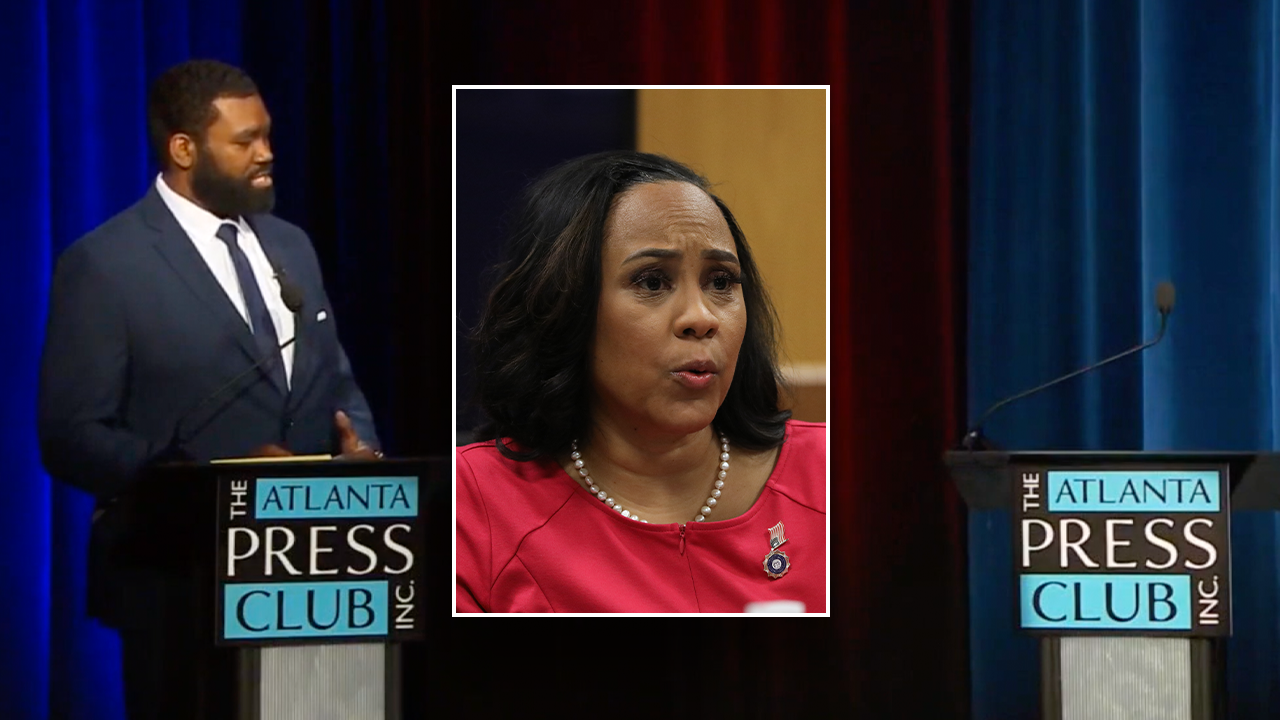

How ‘Yahoo Boys’ use real-time face-swapping to carry out elaborate romance scams

We’ve all heard of catfish scams – when someone pretends to be a lover on the other side of the screen, but instead, they aren’t who they say they are once their real face is revealed. Now, there’s a similar scam on the rise, and it’s much more sophisticated because scammers can fake the face, too. The scam is known as the “Yahoo Boys” scam, and it’s taking “catfishing” to a whole new level.

CLICK TO GET KURT’S FREE CYBERGUY NEWSLETTER WITH SECURITY ALERTS, QUICK VIDEO TIPS, TECH REVIEWS AND EASY HOW-TO’S TO MAKE YOU SMARTER

Woman on laptop making a heart sign (Kurt “CyberGuy” Knutsson)

How does deepfake technology work?

Deepfake technology uses AI to allow people to impersonate others over audio or video. The technology is essentially able to replicate someone’s face, facial expressions, gestures, voice, etc., so that the scammer can pretend to be someone they aren’t with almost perfect accuracy. Although deepfake technology has some intentions for good – like in the film industry or advertising (though there are some debates regarding the ethics of this) – it’s generally used for more malicious purposes than anything else.

Because it’s very difficult to tell whether or not a deepfake is the real person or a deepfake (and also because deepfakes are relatively new), deepfakes can have the potential to do a lot of damage. From the larger implications of it being used to spread inaccurate news stories that can sway public opinion and political processes to it being used to inflict damage on individuals with scams, it’s important to know what to watch out for.

Woman with a scan on her face (Kurt “CyberGuy” Knutsson)

MORE: EXPOSING THE TOP SCAMS TARGETING COSTCO SHOPPERS

What is the ‘Yahoo Boys’ scam?

The “Yahoo Boys” scam involves a group of sophisticated cybercriminals, primarily based in Nigeria, who are using this technology to conduct what’s otherwise known as romance scams. Like some catfishing attempts, they first build trust with victims through personal and romantic interactions over messaging and, eventually, video calls, where they then manipulate their appearances in real time to match the description and profile they may have shared with them up until that point.

By doing this, they can trick the victim into trusting them even more. This is because most of us still use video as a way to verify a person’s identity, when messaging isn’t convincing enough. Finally, when the timing is right, the victims are often persuaded into transferring money based on various fabricated scenarios, leading to significant financial losses. In fact, the FBI reported over $650 million lost to romance scams like these.

Man on his cellphone (Kurt “CyberGuy” Knutsson)

MORE: DON’T FALL FOR THESE SNEAKY TAX SCAMS THAT ARE OUT TO STEAL YOUR IDENTITY AND MONEY

How does it actually work?

In the case of the “Yahoo Boys” scam, the scammers do their dirty work by:

Step 1: The scammer will use two smartphones or a combination of a smartphone and a laptop. One device is used to conduct the video call with the victim, while the other runs face-swapping software.

Step 2: On a secondary device, the scammer activates face-swapping software. This device’s camera films the scammer’s face, and the software adds a digital mask over it. This mask is a realistic replica of another person’s facial features, which the scammer has chosen to impersonate. The software is sophisticated enough to track and mimic the scammer’s facial movements and expressions in real time, altering everything from skin tone and facial structure to hair and gender to match the chosen identity.

Step 3: For the video call, the scammer uses a primary device with its rear camera aimed at the secondary device’s screen. This screen shows the deepfake – the digitally altered face. The rear camera captures this and sends it to the victim, who sees the deepfake as if it’s the scammer’s actual face. To make the illusion more convincing, the devices are stabilized on stands, and ring lights provide even, flattering lighting. This setup ensures that the deepfake appears clear and stable, tricking the victim into believing they’re seeing a real person.

Step 4: Throughout the call, the scammer speaks using their own voice, although in some setups, voice-altering technology might also be used to match the voice to the deep-faked face. This comprehensive disguise allows the scammer to interact naturally with the victim, reinforcing the illusion.

Though each deepfake scam is different, having a basic level of understanding in terms of how scams like these work can help you recognize them.

Scammer typing on a keyboard (Kurt “CyberGuy” Knutsson)

How to stay safe from deepfake scams

To protect yourself from deepfake scams like the “Yahoo Boys” scam, here’s what you can do:

Verify identities: Always confirm the identity of individuals you meet online through video calls by asking them to perform unpredictable actions in real time, like writing a specific word on paper and showing it on camera.

Be skeptical of unusual requests: Be cautious if someone you’ve only met online requests money, personal information or any other sensitive details.

Enhance privacy settings: Adjust privacy settings on social media and other platforms to limit the amount of personal information available publicly, which can be used to create deepfake content.

Use secure communication channels: Prefer secure, encrypted platforms for communications and avoid sharing sensitive content over less secure channels.

Educate yourself about deepfakes: Stay informed about the latest developments in deepfake technology to better recognize potentially manipulated content.

Report suspicious activity: If you encounter a potential scam or deepfake attempt, report it to the relevant authorities or platforms to help prevent further incidents.

By following these guidelines, you can reduce your risk of falling victim to sophisticated digital scams and protect your personal and financial information from falling into the hands of these scammers.

Woman talking on her cellphone (Kurt “CyberGuy” Knutsson)

MORE: CAN AI HELP SOMEONE STAGE A FAKE KIDNAPPING SCAM AGAINST YOUR FAMILY

Protecting your identity in the age of deepfakes

As deepfake technology becomes more accessible and convincing, the risk of identity theft increases. Scammers can use stolen personal information to create more believable deepfakes, making it harder for you to detect fraud. Furthermore, the sophistication of deepfakes may allow criminals to bypass biometric security measures, potentially granting them unauthorized access to your personal accounts and sensitive financial information. This is where identity theft protection services become invaluable.

Identity Theft companies can monitor personal information like your Social Security Number, phone number and email address and alert you if it is being sold on the dark web or being used to open an account. They can also assist you in freezing your bank and credit card accounts to prevent further unauthorized use by criminals.

One of the best parts of using some services is that they might include identity theft insurance of up to $1 million to cover losses and legal fees and a white-glove fraud resolution team where a U.S.-based case manager helps you recover any losses. See my tips and best picks on how to protect yourself from identity theft.

By subscribing to a reputable identity theft protection service, you can add an extra layer of security, ensuring that your digital presence is monitored and protected against the ever-evolving tactics of cybercriminals like the “Yahoo Boys.”

MORE: UNFORGETTABLE MOTHER’S DAY GIFTS 2024

Kurt’s key takeaways

The main targets of the “Yahoo Boys” scam are individuals who establish online romantic relationships with the scammers. Because these victims are often emotionally invested and therefore more susceptible to manipulation, there’s not necessarily a specific age or demographic that can become a victim. And because we know the root of all these scams is the deepfake technology, truly anyone can become a target. So, we hope this helps, but also, be sure to spread the word to friends and family.

Have you heard of any other types of deepfake scams? Or do you know anyone who has been a victim of scams like this? Let us know by writing us at Cyberguy.com/Contact.

For more of my tech tips & security alerts, subscribe to my free CyberGuy Report Newsletter by heading to Cyberguy.com/Newsletter.

Ask Kurt a question or let us know what stories you’d like us to cover.

Answers to the most asked CyberGuy questions:

Copyright 2024 CyberGuy.com. All rights reserved.

Technology

Financial Times signs licensing deal with OpenAI

/cdn.vox-cdn.com/uploads/chorus_asset/file/25362057/STK_414_AI_CHATBOT_R2_CVirginia_B.jpg)

The Financial Times has struck a deal with OpenAI to license its content and develop AI tools, the latest news organization to work with the AI company.

The FT writes in a press release that ChatGPT users will see summaries, quotes, and links to its articles. Any prompt that returns information from the FT will be attributed to the publication.

In return, OpenAI will work with the news organization to develop new AI products. The FT already uses OpenAI products, saying it is a customer of ChatGPT Enterprise. Last month, the FT released a generative AI search function on beta powered by Anthropic’s Claude large language model. Ask FT lets subscribers find information across the publication’s articles.

Financial Times Group CEO John Ridding says that even as the company partners with OpenAI, the publication continues to commit to “human journalism.”

“It’s right, of course, that AI platforms pay publishers for the use of their material,” Ridding says. He adds that “it’s clearly in the interests of users that these products contain reliable sources.”

-

Kentucky1 week ago

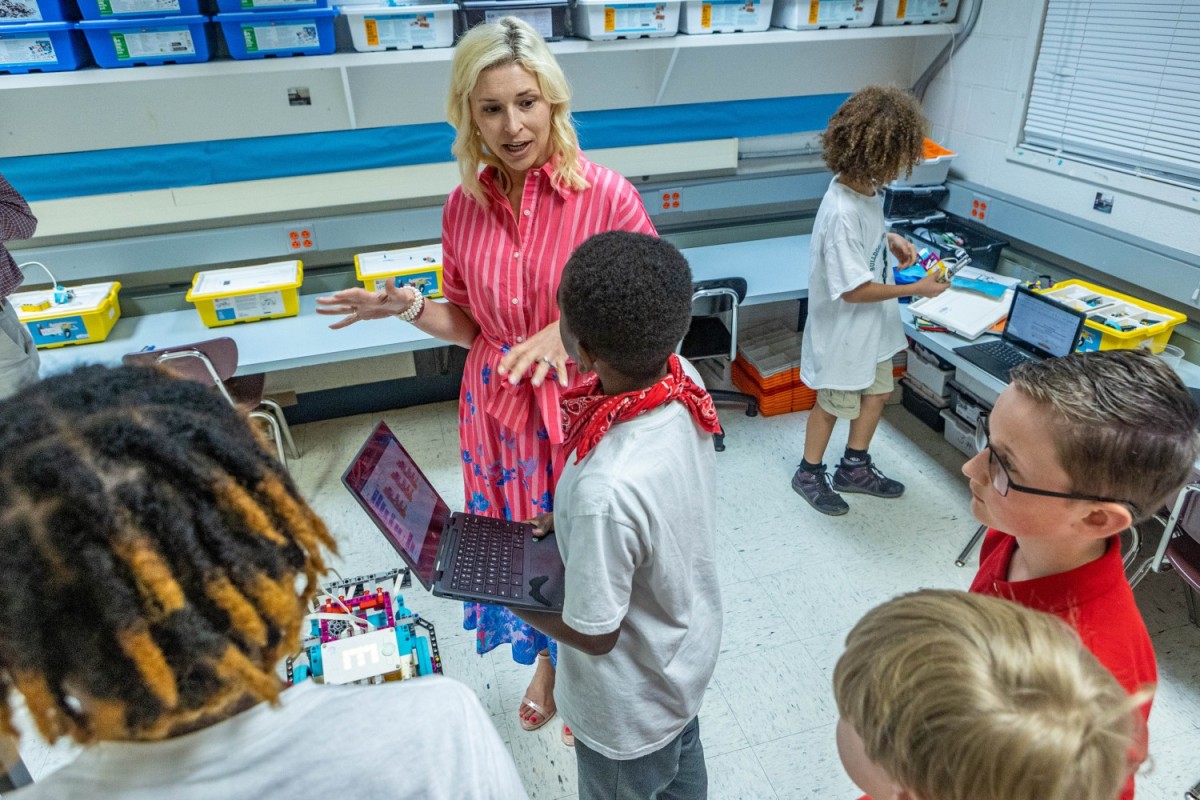

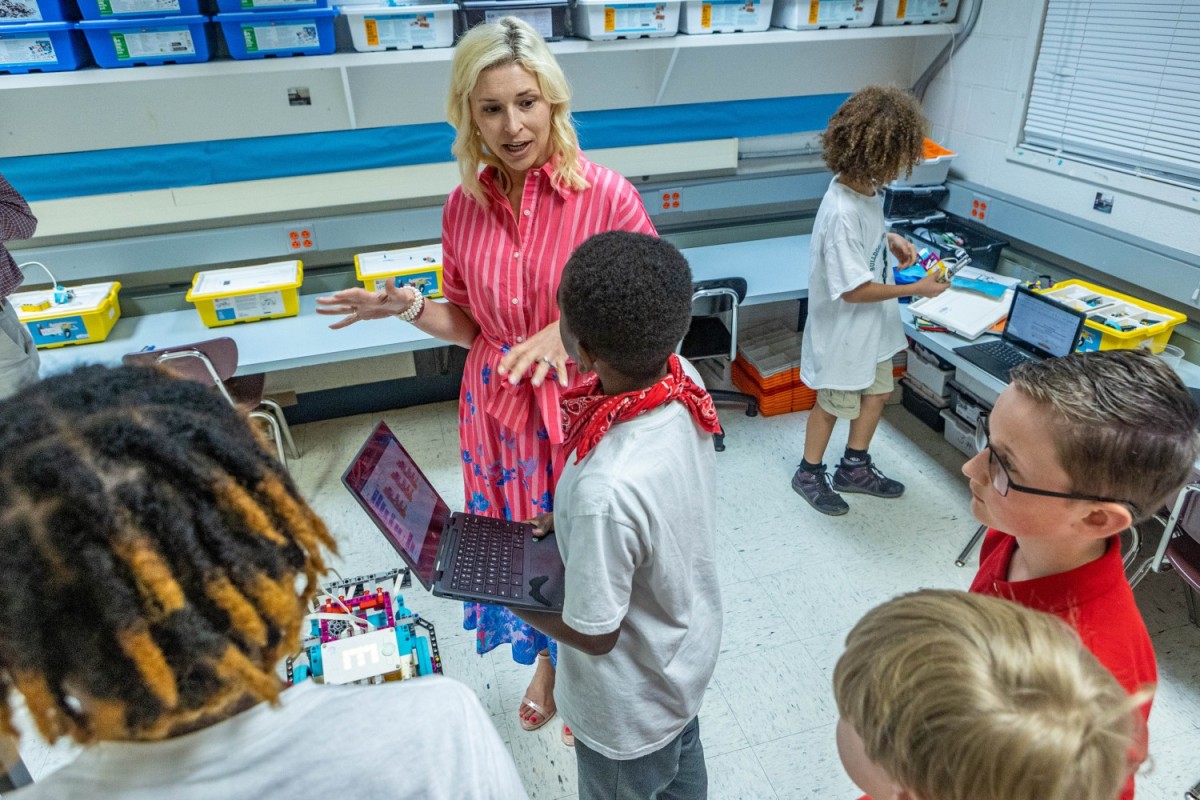

Kentucky1 week agoKentucky first lady visits Fort Knox schools in honor of Month of the Military Child

-

News1 week ago

News1 week agoIs this fictitious civil war closer to reality than we think? : Consider This from NPR

-

World1 week ago

World1 week agoShipping firms plead for UN help amid escalating Middle East conflict

-

Politics1 week ago

Politics1 week agoICE chief says this foreign adversary isn’t taking back its illegal immigrants

-

Politics1 week ago

Politics1 week ago'Nothing more backwards' than US funding Ukraine border security but not our own, conservatives say

-

News1 week ago

News1 week agoThe San Francisco Zoo will receive a pair of pandas from China

-

World1 week ago

World1 week agoTwo Mexican mayoral contenders found dead on same day

-

Politics1 week ago

Politics1 week agoRepublican aims to break decades long Senate election losing streak in this blue state