Technology

iOS 15.4 now live as Universal Control comes to iPads and Macs

iOS 15.4, the most recent model of Apple’s iPhone working system, has been launched and will quickly be obtainable to obtain forward of the Friday launch of Apple’s new third-generation iPhone SE and inexperienced iPhone 13. The replace consists of massive new options like the power to make use of Face ID whereas sporting a masks, alongside smaller additions like a handful of recent emoji. The brand new model of iOS launches alongside iPadOS 15.4 and macOS 12.3, which add help for Apple’s new Common Management function.

Common Management permits you to use a Mac’s keyboard and mouse to manage an iPad fully wirelessly, together with the power to pull and drop recordsdata from the pill again to a pc. Common Management requires your Mac to be operating macOS Monterey 12.3, which was additionally launched Monday, and iPad to be operating iPadOS 15.4.

We have already got a fairly good thought of how Apple’s new mask-friendly Face ID implementation ought to work after attempting it out in beta. You may learn our full ideas right here. Briefly, it does an honest job of recognizing faces even when the nostril and mouth are lined with a masks however can nonetheless get tripped up by glasses, sun shades, and floppy hats. Apple does warn that turning the brand new function on might make Face ID much less correct general.

Different options embody new anti-stalking options for Apple’s AirTag trackers in addition to a brand new, extra gender-neutral Siri voice. There’s additionally help for a brand new Faucet to Pay function that lets iPhones settle for contactless funds, although third-party suppliers like Stripe must add help to make it helpful.

iOS 15.4 can be obtainable for all iOS 15-compatible units, which suggests something from the iPhone 6S up is roofed. iPadOS 15.4, in the meantime, is out there for all iPad Professional fashions, the iPad Air second era and up, the iPad mini fourth era and up, and every little thing from the fifth era of base iPad and later.

Lastly, macOS Monterey 12.3 is supported on the next: iMac (late 2015 and newer), iMac Professional (2017 and newer), Mac Professional (late 2013 and newer), Mac Mini (late 2014 and newer), MacBook Professional (early 2015 and newer), MacBook Air (early 2015 and newer), MacBook (early 2016 and newer).

You may test for the replace in your iPhone or iPad by going to Settings > Normal > Software program Replace. On Mac, you’ll be able to go to System Preferences > Software program Replace.

Replace March 14th, 1:15PM ET: Added details about macOS 12.3, which has additionally been launched.

Technology

Did Stanford just prototype the future of AR glasses?

/cdn.vox-cdn.com/uploads/chorus_asset/file/25441105/20240430_Holographic_AR_Displays_N6A1077.jpg)

For now, the lab version has an anemic field of view — just 11.7 degrees in the lab, far smaller than a Magic Leap 2 or even a Microsoft HoloLens.

But Stanford’s Computational Imaging Lab has an entire page with visual aid after visual aid that suggests it could be onto something special: a thinner stack of holographic components that could nearly fit into standard glasses frames, and be trained to project realistic, full-color, moving 3D images that appear at varying depths.

Like other AR eyeglasses, they use waveguides, which are a component that guides light through glasses and into the wearer’s eyes. But researchers say they’ve developed a unique “nanophotonic metasurface waveguide” that can “eliminate the need for bulky collimation optics,” and a “learned physical waveguide model” that uses AI algorithms to drastically improve image quality. The study says the models “are automatically calibrated using camera feedback”.

Although the Stanford tech is currently just a prototype, with working models that appear to be attached to a bench and 3D-printed frames, the researchers are looking to disrupt the current spatial computing market that also includes bulky passthrough mixed reality headsets like Apple’s Vision Pro, Meta’s Quest 3, and others.

Postdoctoral researcher Gun-Yeal Lee, who helped write the paper published in Nature, says there’s no other AR system that compares both in capability and compactness.

Technology

Apple plans to use M2 Ultra chips in the cloud for AI

/cdn.vox-cdn.com/uploads/chorus_asset/file/24401980/STK071_ACastro_apple_0003.jpg)

Apple plans to start its foray into generative AI by offloading complex queries to M2 Ultra chips running in data centers before moving to its more advanced M4 chips.

Bloomberg reports that Apple plans to put its M2 Ultra on cloud servers to run more complex AI queries, while simple tasks are processed on devices. The Wall Street Journal previously reported that Apple wanted to make custom chips to bring to data centers to ensure security and privacy in a project the publication says is called Project ACDC, or Apple Chips in Data Center. But the company now believes its existing processors already have sufficient security and privacy components.

The chips will be deployed to Apple’s data centers and eventually to servers run by third parties. Apple runs its own servers across the United States and has been working on a new center in Waukee, Iowa, which it first announced in 2017.

While Apple has not moved as fast on generative AI as competitors like Google, Meta, and Microsoft, the company has been putting out research on the technology. In December, Apple’s machine learning research team released MLX, a machine learning framework that can make AI models run efficiently on Apple silicon. The company has also been releasing other research around AI models that hint at what AI could look like on its devices and how existing products, like Siri, may get an upgrade.

Apple put a big emphasis on AI performance in its announcement of the new M4 chip, saying its new neural engine is “an outrageously powerful chip for AI.”

Technology

NASA’s Dragonfly drone cleared for flight to Saturn’s moon, Titan

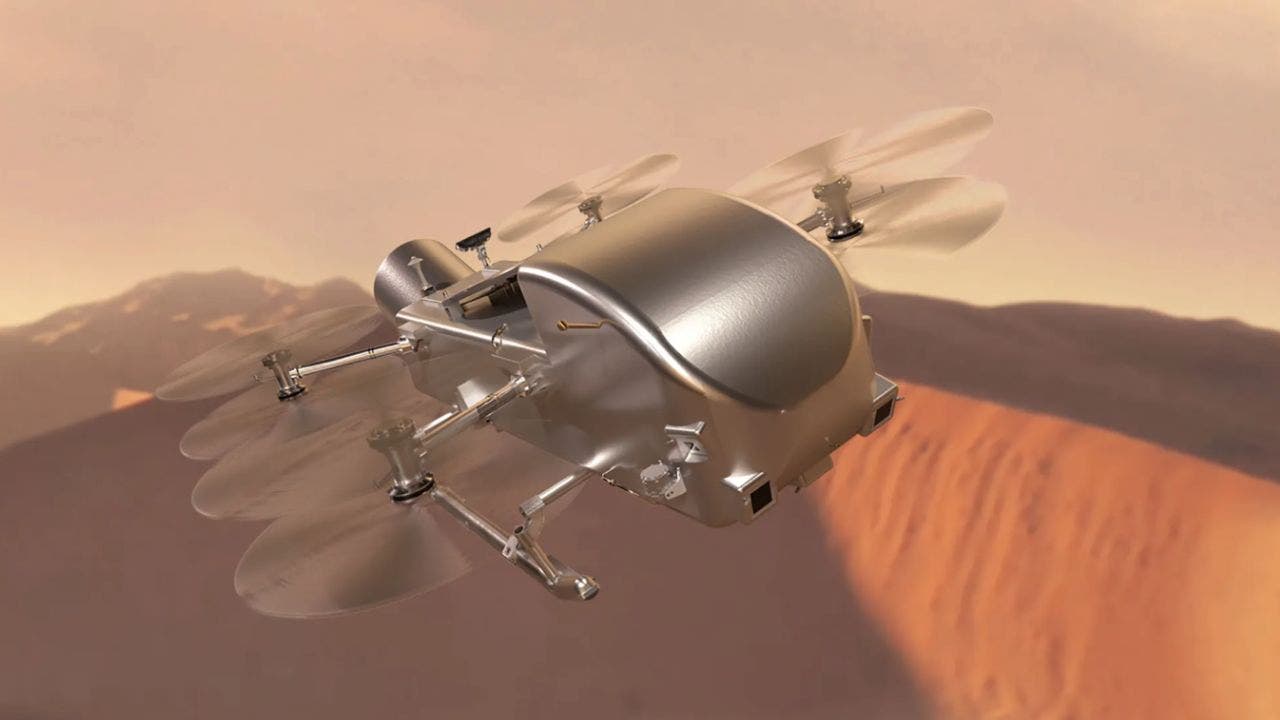

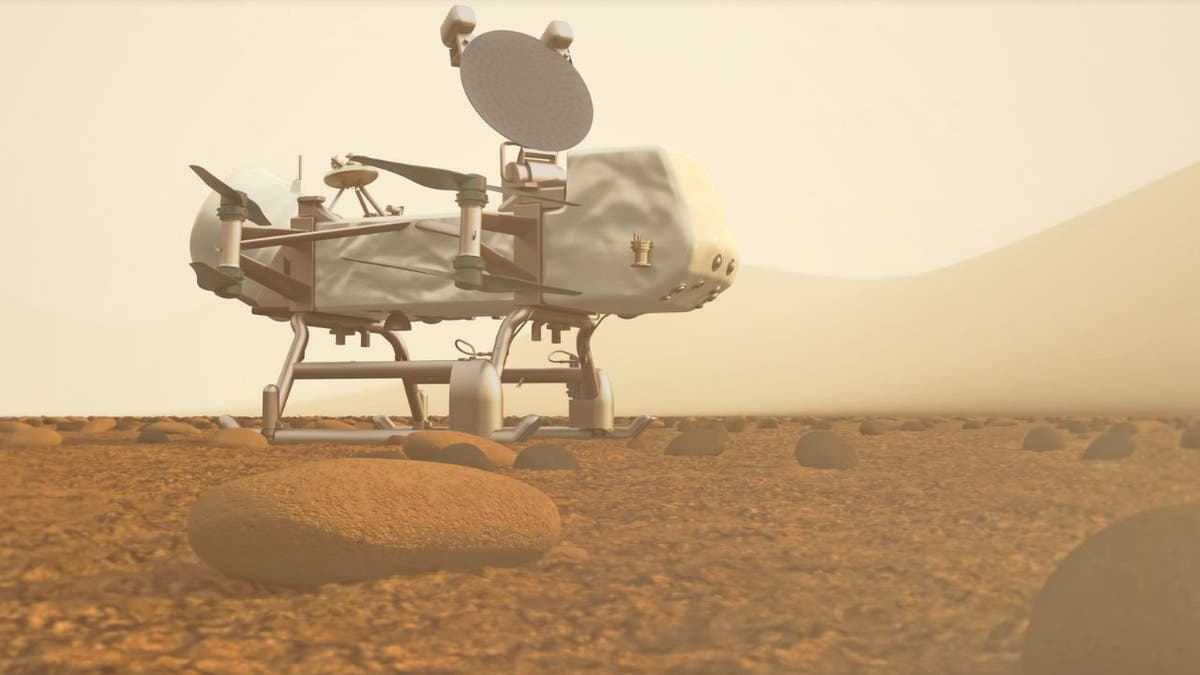

After overcoming the hurdles of COVID-19 delays and budget overruns, NASA has finally given the Dragonfly rotorcraft mission the go-ahead.

This autonomously operated nuclear-powered rotorcraft is set to embark on a groundbreaking journey to Saturn’s largest moon, Titan, in 2028.

Artist’s concept of Dragonfly rotorcraft (NASA/JHU-APL)

Why Titan?

Titan is no ordinary celestial body. Located about 746 million miles from Earth, it’s the second-largest moon in our solar system and the only one with a dense atmosphere besides Earth. But what makes Titan truly unique is its organic chemistry. With an atmosphere rich in nitrogen and methane, it’s a haven for scientists seeking to understand the building blocks of life.

CLICK TO GET KURT’S FREE CYBERGUY NEWSLETTER WITH SECURITY ALERTS, QUICK VIDEO TIPS, TECH REVIEWS AND EASY HOW-TO’S TO MAKE YOU SMARTER

Artist’s concept of Dragonfly rotorcraft (NASA/JHU-APL)

MORE: UNFORGETTABLE MOTHER’S DAY GIFTS 2024

The challenges of exploration

Titan’s swamp-like surface, composed of petroleum byproducts, poses a significant challenge for exploration. Traditional rovers won’t do well there. Enter Dragonfly, a rotorcraft powered by a radio thermal generator. It flies using aluminum/titanium rotors, designed to leap across Titan’s landscape, conducting geological surveys and searching for biosignatures.

Artist’s concept of Dragonfly rotorcraft (NASA/JHU-APL)

MORE: HOW THE DREAM CHASER SPACEPLANE PLANS TO SHAKE UP SPACE TRAVEL IN THE FUTURE

Dragonfly’s quest for life

Dragonfly’s mission is to travel to multiple locations on Saturn’s moon, Titan, to uncover signs of life. The spacecraft will scrutinize the surface and just beneath it, searching for organic compounds and life indicators. Equipped with a neutron spectrometer, a drilling mechanism and a mass spectrometer, Dragonfly will enable researchers to analyze Titan’s complex organic chemistry extensively.

Artist’s concept of Dragonfly rotorcraft (NASA/JHU-APL)

The mission’s journey

Despite financial debates, the mission’s delay necessitates a more powerful rocket to ensure Dragonfly’s arrival on Titan. With a budget of $3.35 billion, the mission represents NASA’s commitment to pushing the boundaries of space exploration.

MORE: THE SMALL BUT MIGHTY HELICOPTER THAT’LL HAVE YOU RETHINKING THE WAY YOU TRAVEL IN THE FUTURE

Kurt’s key takeaways

As NASA’s Dragonfly rotorcraft prepares to take flight, it is a testament to human ingenuity and the relentless pursuit of knowledge. This mission may not only unveil the secrets of Titan but also shed light on the origins of life itself. With the world watching, Dragonfly is poised to soar into the records of space exploration history.

How do you think the Dragonfly mission’s discoveries on Titan could reshape our understanding of life in the universe? Let us know by writing us at Cyberguy.com/Contact.

For more of my tech tips and security alerts, subscribe to my free CyberGuy Report Newsletter by heading to Cyberguy.com/Newsletter.

Ask Kurt a question or let us know what stories you’d like us to cover

Answers to the most asked CyberGuy questions:

Copyright 2024 CyberGuy.com. All rights reserved.

-

Politics1 week ago

Politics1 week agoStefanik hits special counsel Jack Smith with ethics complaint, accuses him of election meddling

-

Politics1 week ago

Politics1 week agoThe White House has a new curator. Donna Hayashi Smith is the first Asian American to hold the post

-

World1 week ago

World1 week agoTurkish police arrest hundreds at Istanbul May Day protests

-

News1 week ago

News1 week agoVideo: Police Arrest Columbia Protesters Occupying Hamilton Hall

-

Politics1 week ago

Politics1 week agoAdams, NYPD cite 'global' effort to 'radicalize young people' after 300 arrested at Columbia, CUNY

-

News1 week ago

News1 week agoPolice enter UCLA anti-war encampment; Arizona repeals Civil War-era abortion ban

-

Politics1 week ago

Politics1 week agoNewsom, state officials silent on anti-Israel protests at UCLA

-

News1 week ago

News1 week agoSome Republicans expected to join Arizona Democrats to pass repeal of 1864 abortion ban