Apple’s been talking about its next generation of CarPlay for two years now with very little to show for it — the system is designed to unify the interfaces on every screen in your car, including the instrument cluster, but so far only Aston Martin and Porsche have said they’ll ship cars with the system, without any specific dates in the mix.

Technology

Apple’s fancy new CarPlay will only work wirelessly

/cdn.vox-cdn.com/uploads/chorus_asset/file/23612558/Apple_WWDC22_iOS16_CarPlay_220606.jpg?ssl=1)

And the public response from the rest of the industry towards next-gen CarPlay has been pretty cool overall. I talk to car CEOs on Decoder quite often, and most of them seem fairly skeptical about allowing Apple to get between them and their customers. “We have Apple CarPlay,” Mercedes-Benz CEO Ola Källenius told me in April. “If, for some of the functions, you feel more comfortable with that and will switch back and forth, be my guest. But to give up the whole cockpit head unit — in our case, a passenger screen and everything — to somebody else? The answer is no.”

That industry skepticism seems to have hit home for Apple, which posted two WWDC 2024 videos detailing the architecture and design of next-gen CarPlay. Both made it clear that automakers will have a lot of control over how things look and work, and even have the ability to just use their own interfaces for various features using something called “punch-through UI.” The result is an approach to CarPlay that’s much less “Apple runs your car” and much more “Apple built a design toolkit for automakers to use however they want.”

See, right now CarPlay is basically just a second monitor for your phone – you connect to your car, and your phone sends a video stream to the car. This is why those cheap wireless CarPlay dongles work – they’re just wireless display adapters, basically.

But if you want to integrate things like speedometers and climate controls, CarPlay needs to actually collect data from your car, display it in realtime, and be able to control various features like HVAC directly. So for next-gen CarPlay, Apple’s split things into what it calls “layers,” some of which run on your iPhone, but others which run locally on the car so they don’t break if your phone disconnects. And phone disconnects are going to be an issue, because next-generation CarPlay only supports wireless connections. “The stability and performance of the wireless connection are essential,” Apple’s Tanya Kancheva says while talking about the next-gen architecture. Given that CarPlay connectivity issues are still the most common issue in new cars and wireless made it worse, that’s something Apple needs to keep an eye on.

There are two layers that run locally on the car, in slightly different ways. There’s the “overlay UI,” which has things like your turn signals and odometer in it. These can be styled, but everything about it is entirely run on your car, and otherwise untouchable. Then there is the “local UI,” which has things like your speedometer and tachometer — things related to driving that need to update all the time, basically. Automakers can customize these in several ways – there are different gauge styles and layouts, from analog to digital, and they can include logos and so on. Interestingly, there’s only one font choice: Apple’s San Francisco, which can be modified in various ways, but can’t be swapped out.

Apple’s goal for next-gen CarPlay is to have it start instantaneously — ideally when the driver opens the door — so the assets for these local UI elements are loaded onto the car from your phone during the pairing process. Carmakers can update how things look and send refreshed assets through the phone over time as well — exactly how and how often is still a bit unclear.

Then there’s what Apple calls “remote UI,” which is all stuff that runs on your phone: maps, music, trip info. This is the most like CarPlay today, except now it can run on any other screen in your car.

The final layer is called “punch-through UI,” and it’s where Apple is ceding the most ground to automakers. Instead of coming up with its own interface ideas for things like backup cameras and advanced driver-assistance features, Apple’s allowing carmakers to simply feed their existing systems through to CarPlay. When you shift to reverse, the interface will simply show you your car’s backup camera screen, for example:

But carmakers can use punch-through UI for basically anything they want, and even deeplink CarPlay buttons to their own interfaces. Apple’s example here is a vision of multiple colliding interface ideas all at once: a button in CarPlay to control massage seats that can either show native CarPlay controls, or simply drop you into the car’s own interface.

Or a hardware button to pick drive modes could send you to either CarPlay settings, deeplink you into the automaker’s iPhone app, or just open the native car settings:

Apple’s approach to HVAC is also what amounts to a compromise: the company isn’t really rethinking anything about how HVAC controls work. Instead, it’s allowing carmakers to customize controls from a toolkit to match the car system and even display previews of a car interior that match trim and color options. If you’ve ever looked at a car with a weird SYNC button that keeps various climate zones paired up, well, the next generation of CarPlay has a weird SYNC button too.

All of this is kept running at 60fps (or higher, if the car system supports it) by a new dedicated UI timing channel, and a lot of the underlying compositing relies on OpenGL running on the car itself.

All in all, it’s a lot of info, and what feels like a lot of Apple realizing that carmakers aren’t going to just give up their interfaces — especially since they’ve already invested in designing these sorts of custom interfaces for their native systems, many of which now run on Unreal Engine with lots of fun animations, and have Google services like Maps integrated right in. Allowing automakers to punch those interfaces through CarPlay might finally speed up adoption – and it also might create a mix-and-match interface nightmare.

All that said, it’s telling that no one has seen anything but renders of next-gen CarPlay anywhere yet. We’ll have to see what it’s like if this Porsche and Aston ever arrive, and if that tips anyone else into adopting it.

Technology

A surprise God of War prequel is out on the PS5 right now

To close out its February 2026 State of Play presentation, Sony revealed God of War Sons of Sparta, a new prequel 2D side scroller in the God of War franchise, and announced that it’s out right now on PlayStation 5.

”God of War Sons of Sparta is a 2D action platformer with a canon story set in Kratos’ youth during his harsh training at the Agoge alongside his brother Deimos,” Sony says. Over the course of the game, Kratos will “learn deadly skills using his spear and shield, as well as harness powerful divine artifacts known as the Gifts of Olympus to take on a wide array of foes.”

Sony’s Santa Monica Studio collaborated on the game with Mega Cat Studios. It costs $29.99, with a Digital Deluxe version available for $39.99.

Sony also announced that it’s working on a remake of the original God of War trilogy, with TC Carson set to return as the voice of Kratos. However, the project is “still very early in development, so we ask for your patience as it will be a while before anything else can be shared,” according to Sony. “When we can come back with an update, we aim to make it a big one!”

Technology

How to safely view your bank and retirement accounts online

NEWYou can now listen to Fox News articles!

Logging into your bank, retirement or investment accounts is now part of everyday life. Still, for many people, it comes with a knot in the stomach. You hear about hacks, scams and stolen identities and wonder if simply checking your balance could open the door to trouble. That concern landed in our inbox from Mary.

“How do I protect my bank accounts, 401K and non-retirement accounts when I view them online?”

Mary’s question is a good one, because protecting your money online is not about one magic setting. It comes down to smart habits layered together.

Sign up for my FREE CyberGuy Report

Get my best tech tips, urgent security alerts and exclusive deals delivered straight to your inbox. Plus, you’ll get instant access to my Ultimate Scam Survival Guide – free when you join my CYBERGUY.COM newsletter.

DATA BREACH EXPOSES 400,000 BANK CUSTOMERS’ INFO

Securing your device with updates and antivirus software is the first step in protecting your financial accounts online. (REUTERS/Andrew Kelly)

Secure your device before logging into financial accounts

Everything begins with the device in your hands. If it isn’t secure, even the strongest password can be exposed. These essentials help lock things down before you ever sign in.

Start with these device security basics:

- Keep your phone, tablet and computer fully updated with the latest operating system and browser versions

- Use strong, always-on antivirus protection to block malware and phishing attempts. Get my picks for the best 2026 antivirus protection winners for your Windows, Mac, Android and iOS devices at Cyberguy.com.

- Avoid public Wi-Fi when accessing financial accounts, or use a trusted VPN if you have no other option. For the best VPN software, see my expert review of the best VPNs for browsing the web privately on your Windows, Mac, Android and iOS devices at Cyberguy.com.

Protect your bank and investment account logins

Your login details are the front door to your money. Strengthening them reduces the chance that anyone else can get inside.

Strengthen your account logins by:

- Using strong, unique passwords for every financial account

- Avoiding saved passwords on shared or older devices

- Relying on a password manager to create and store credentials securely. Our No. 1 pick, includes a built-in breach scanner that alerts you if your information appears in known leaks. If you find a match, change any reused passwords immediately and secure those accounts with new, unique credentials.

- Checking whether your email or passwords have appeared in known data breaches and updating reused passwords immediately. Check out the best expert-reviewed password managers of 2026 at Cyberguy.com.

- Turning on two-factor authentication (2FA) wherever it’s available

Avoid common online banking scams when logging in

Even well-secured accounts can be compromised through careless access. How you log in matters.

Reduce your risk when accessing financial accounts:

- Typing website addresses yourself or using saved bookmarks

- Avoiding login links sent by email or text, even if they look official

- Checking for “https” and the lock icon before entering credentials

- Logging out completely after every session, especially on mobile devices

Add extra layers of protection to financial accounts

Strong, unique passwords and two-factor authentication help stop criminals even if one login is exposed. (Photo by Neil Godwin/Future via Getty Images)

DON’T LET AI PHANTOM HACKERS DRAIN YOUR BANK ACCOUNT

Think of these as early warning systems. They help catch problems quickly, before real damage is done.

Enable financial account alerts and safeguards:

- Setting up alerts for logins, withdrawals, password changes and new payees

- Requiring extra confirmation for large or unusual transactions

- Freezing your credit with the major credit bureaus to block new accounts opened in your name. To learn more about how to do this, go to Cyberguy.com and search “How to freeze your credit.”

Protect your identity beyond your bank accounts

Your financial accounts are only part of the picture. Identity protection helps stop problems before they ever reach your bank.

Go beyond basic banking security:

- Monitoring for identity theft involving your Social Security number, phone number and email

- Using an identity protection service that alerts you if your data appears on the dark web or is used fraudulently. See my tips and best picks on how to protect yourself from identity theft at Cyberguy.com

- Removing your personal information from data broker websites that buy and sell consumer data. A data removal service reduces risk before identity theft happens. Check out my top picks for data removal services, and get a free scan to find out if your personal information is already out on the web by visiting Cyberguy.com.

Review bank and credit statements for early warning signs

Review your bank, credit card and investment statements regularly, even when nothing looks suspicious. Small red flags often appear long before major losses.

Everyday security habits that prevent financial scams

Many successful scams rely on pressure and trust, not advanced technology. Good habits close those gaps.

Practice smart daily security habits:

- Never allow anyone to log into your accounts remotely, even if they claim to be from your bank

- Avoid storing photos of IDs, Social Security cards, or account numbers on your phone or email

- Stop immediately if something feels off, and contact the institution directly using a verified phone number

Logging in the right way, by typing web addresses yourself and avoiding suspicious links, reduces phishing risks. (Martin Bertrand / Hans Lucas / AFP via Getty Images)

Kurt’s key takeaways

Checking your bank or retirement accounts online should feel routine, not risky. With updated devices, strong logins, careful access and smart habits, you can keep control of your money without giving up convenience. Security is not about fear. It is about staying one step ahead.

Have you ever clicked a financial alert and wondered afterward if it was real or a scam? Let us know your thoughts by writing to us at Cyberguy.com

CLICK HERE TO DOWNLOAD THE FOX NEWS APP

Sign up for my FREE CyberGuy Report

Get my best tech tips, urgent security alerts and exclusive deals delivered straight to your inbox. Plus, you’ll get instant access to my Ultimate Scam Survival Guide – free when you join my CYBERGUY.COM newsletter.

Copyright 2026 CyberGuy.com. All rights reserved.

Technology

HP ZBook Ultra G1a review: a business-class workstation that’s got game

Business laptops are typically dull computers foisted on employees en masse. But higher-end enterprise workstation notebooks sometimes get an interesting enough blend of power and features to appeal to enthusiasts. HP’s ZBook Ultra G1a is a nice example. It’s easy to see it as another gray boring-book for spendy business types, until you notice a few key specs: an AMD Strix Halo APU, lots of RAM, an OLED display, and an adequate amount of speedy ports (Thunderbolt 4, even — a rarity on AMD laptops).

I know from my time with the Asus ROG Flow Z13 and Framework Desktop that anything using AMD’s high-end Ryzen AI Max chips should make for a compelling computer. But those two are a gaming tablet and a small form factor PC, respectively. Here, you get Strix Halo and its excellent integrated graphics in a straightforward, portable 14-inch laptop — so far, the only one of its kind. That should mean great performance with solid battery life, and the graphics chops to hang with midlevel gaming laptops — all in a computer that wouldn’t draw a second glance in a stuffy office. It’s a decent Windows (or Linux) alternative to a MacBook Pro, albeit for a very high price.

$3499

The Good

- Great screen, keyboard, and trackpad

- Powerful AMD Strix Halo chip

- Solid port selection with Thunderbolt 4

- Can do the work stuff, the boring stuff, and also game

The Bad

- Expensive

- Strix Halo can be power-hungry

- HP’s enterprise-focused security software is nagging

The HP ZBook Ultra G1a starts around $2,100 for a modest six-core AMD Ryzen AI Max Pro 380 processor, 16GB of shared memory, and basic IPS display. Our review unit is a much higher-spec configuration with a 16-core Ryzen AI Max Plus Pro 395, 2880 x 1800 resolution 120Hz OLED touchscreen, 2TB of storage, and a whopping 128GB of shared memory, costing nearly $4,700. I often see it discounted by $1,000 or more — still expensive, but more realistic for someone seeking a MacBook Pro alternative. Having this much shared memory is mostly useful for hefty local AI inference workloads and serious dataset crunching; most people don’t need it. But with the ongoing memory shortage I’d also understand wanting to futureproof.

- Screen: A

- Webcam: B

- Keyboard: B

- Trackpad: B

- Port selection: B

- Speakers: B

- Number of ugly stickers to remove: 1 (only a Windows sticker on the bottom)

Unlike cheaper HP laptops I’ve tested that made big sacrifices on everyday features like speaker quality, the ZBook Ultra G1a is very good across the board. The OLED is vibrant, with punchy contrast. The keyboard has nice tactility and deep key travel. The mechanical trackpad is smooth, with a good click feel. The 5-megapixel webcam looks solid in most lighting. And the speakers have a full sound that I’m happy to listen to music on all day. I have my gripes, but they’re minor: The 400-nit screen could be a little brighter, the four-speaker audio system doesn’t sound quite as rich as current MacBook Pros, and my accidental presses of the Page Up and Page Down keys above the arrows really get on my nerves. These quibbles aren’t deal-breakers, though for the ZBook’s price I wish HP solved some of them.

The big thing you’re paying for with the ZBook Ultra is that top-end Strix Halo APU, which is so far only found in $2,000+ computers and a sicko-level gaming handheld, though there will be cut-down versions coming to cheaper gaming laptops this year.

The flagship 395 chip in the ZBook offers speedy performance for mixed-use work and enough battery life to eke out an eight-hour workday filled with Chrome tabs and web apps (with power-saving measures). I burned through battery in Adobe Lightroom Classic, but even though Strix Halo is less powerful when disconnected from wall power, the ZBook didn’t get bogged down. I blazed through a hefty batch edit of 47-megapixel RAW images without any particularly long waits on things like AI denoise or automated masking adjustments.

The ZBook stays cool and silent during typical use; pushing it under heavy loads only yields a little warmth in its center and a bit of tolerable fan noise that’s easily drowned out by music, a video, or a game at normal volume.

This isn’t a gaming-focused laptop any more than a MacBook Pro is, as its huge pool of shared memory and graphics cores are meant for workstation duties. However, this thing can game. I spent an entire evening playing Battlefield 6 with friends, with Discord and Chrome open in the background, and the whole time it averaged 70 to 80fps in 1920 x 1200 resolution with Medium preset settings and FSR set to Balanced mode — with peaks above 100fps. Running it at the native 2880 x 1800 got a solid 50-ish fps that’s fine for single-player.

Intel’s new Panther Lake chips also have great integrated graphics for gaming, while being more power-efficient. But Strix Halo edges out Panther Lake in multi-core tasks and graphics, with the flagship 395 version proving as capable as a laptop RTX 4060 discrete GPU. AMD’s beefy mobile chips have also proven great for Linux if you’re looking to get away from Windows.

HP Zbook Ultra G1a / Ryzen AI Max Plus Pro 395 (Strix Halo) / 128GB / 2TB |

Asus Zenbook Duo / Intel Core Ultra X9 388H (Panther Lake) / 32GB / 1TB |

MacBook Pro 14 / Apple M5 / 16GB / 1TB |

MacBook Pro 16 / Apple M4 Pro / 48GB / 2TB |

Asus ROG Flow Z13/ AMD Ryzen AI Max Plus 395 (Strix Halo) / 32GB / 1TB |

Framework Desktop / AMD Ryzen AI Max Plus 395 (Strix Halo) / 128GB / 1TB |

|

|---|---|---|---|---|---|---|

| CPU cores | 16 | 16 | 10 | 14 | 16 | 16 |

| Graphics cores | 40 | 12 | 10 | 20 | 40 | 40 |

| Geekbench 6 CPU Single | 2826 | 3009 | 4208 | 3976 | 2986 | 2961 |

| Geekbench 6 CPU Multi | 18125 | 17268 | 17948 | 22615 | 19845 | 17484 |

| Geekbench 6 GPU (OpenCL) | 85139 | 56839 | 49059 | 70018 | 80819 | 86948 |

| Cinebench 2024 Single | 113 | 129 | 200 | 179 | 116 | 115 |

| Cinebench 2024 Multi | 1614 | 983 | 1085 | 1744 | 1450 | 1927 |

| PugetBench for Photoshop | 10842 | 8773 | 12354 | 12374 | 10515 | 10951 |

| PugetBench for Premiere Pro (version 2.0.0+) | 78151 | 54920 | 71122 | Not tested | Not tested | Not tested |

| Premiere 4K Export (shorter time is better) | 2 minutes, 39 seconds | 3 minutes, 3 seconds | 3 minutes, 14 seconds | 2 minutes, 13 seconds | Not tested | 2 minutes, 34 seconds |

| Blender Classroom test (seconds, lower is better) | 154 | 61 | 44 | Not tested | Not tested | 135 |

| Sustained SSD reads (MB/s) | 6969.04 | 6762.15 | 7049.45 | 6737.84 | 6072.58 | Not tested |

| Sustained SSD writes (MB/s) | 5257.17 | 5679.41 | 7317.6 | 7499.56 | 5403.13 | Not tested |

| 3DMark Time Spy (1080p) | 13257 | 9847 | Not tested | Not tested | 12043 | 17620 |

| Price as tested | $4,689 | $2,299.99 | $1,949 | $3,349 | $2,299.99 | $2,459 |

In addition to Windows 11’s upsells and nagging notifications, the ZBook also has HP’s Wolf Security, designed for deployment on an IT-managed fleet of company laptops. For someone not using this as a work-managed device, its extra layer of protections may be tolerable, but they’re annoying. They range from warning you about files from an “untrusted location” (fine) to pop-ups when plugging in a non-HP USB-C charger (infuriating). You can turn off and uninstall all of this, same as you can for the bloatware AI Companion and Support Assistant apps, but it’s part of what HP charges for on its Z workstation line.

You don’t need to spend this kind of money on a kitted-out ZBook Ultra G1a unless you do the kind of specialized computing (local AI models, mathematical simulations, 3D rendering, etc.) it’s designed for. There’s a more attainable configuration, frequently on sale for around $2,500, but its 12-core CPU, lower-specced GPU, and 64GB of shared memory are a dip in performance.

If you’re mostly interested in gaming, an Asus ROG Zephyrus G14 or even a Razer Blade 16 make a hell of a lot more sense. For about the price of our ZBook Ultra review unit, the Razer gets you an RTX 5090 GPU, with much more powerful gaming performance, while the more modest ROG Zephyrus G14 with an RTX 5060 gets you comparable gaming performance to the ZBook Ultra in a similar form factor for nearly $3,000 less. The biggest knock against those gaming laptops compared to the ZBook is that their fans get much louder under load.

And while it’s easy to think of a MacBook Pro as the lazy answer to all computing needs, it still should be said: If you don’t mind macOS, you can get a whole lot more (non-gaming) performance from an M4 Pro / M4 Max MacBook Pro. Even sticking with Windows and integrated graphics, the Asus Zenbook Duo with Panther Lake at $2,300 is a deal by comparison, once it launches.

1/7

At $4,700, this is a specific machine for specialized workloads. It’s a travel-friendly 14-inch that can do a bit of everything, but it’s a high price for a jack of all trades if you’re spending your own money. The ZBook piqued my interest because it’s one of the earliest examples of Strix Halo in a conventional laptop. After using it, I’m even more excited to see upcoming models at more down-to-earth prices.

2025 HP ZBook Ultra G1a specs (as reviewed)

- Display: 14-inch (2880 x 1800) 120Hz OLED touchscreen

- CPU: AMD Ryzen AI Max Plus Pro 395 (Strix Halo)

- RAM: 128GB LPDDR5x memory, shared with the GPU

- Storage: 2TB PCIe 4.0 M.2 NVMe SSD

- Webcam: 5-megapixel with IR and privacy shutter

- Connectivity: Wi-Fi 7, Bluetooth 5.4

- Ports: 2x Thunderbolt 4 / USB-C (up to 40Gbps with Power Delivery and DisplayPort), 1x USB-C 3.2 Gen 2, 1x USB-A 3.2 Gen 2, HDMI 2.1, 3.5mm combo audio jack

- Biometrics: Windows Hello facial recognition, power button with fingerprint reader

- Weight: 3.46 pounds / 1.57kg

- Dimensions: 12.18 x 8.37 x 0.7 inches / 309.37 x 212.60 x 17.78mm

- Battery: 74.5Whr

- Price: $4,689

Photography by Antonio G. Di Benedetto / The Verge

-

Politics1 week ago

Politics1 week agoWhite House says murder rate plummeted to lowest level since 1900 under Trump administration

-

Alabama6 days ago

Alabama6 days agoGeneva’s Kiera Howell, 16, auditions for ‘American Idol’ season 24

-

Politics1 week ago

Politics1 week agoTrump unveils new rendering of sprawling White House ballroom project

-

San Francisco, CA1 week ago

San Francisco, CA1 week agoExclusive | Super Bowl 2026: Guide to the hottest events, concerts and parties happening in San Francisco

-

Ohio1 week ago

Ohio1 week agoOhio town launching treasure hunt for $10K worth of gold, jewelry

-

Culture1 week ago

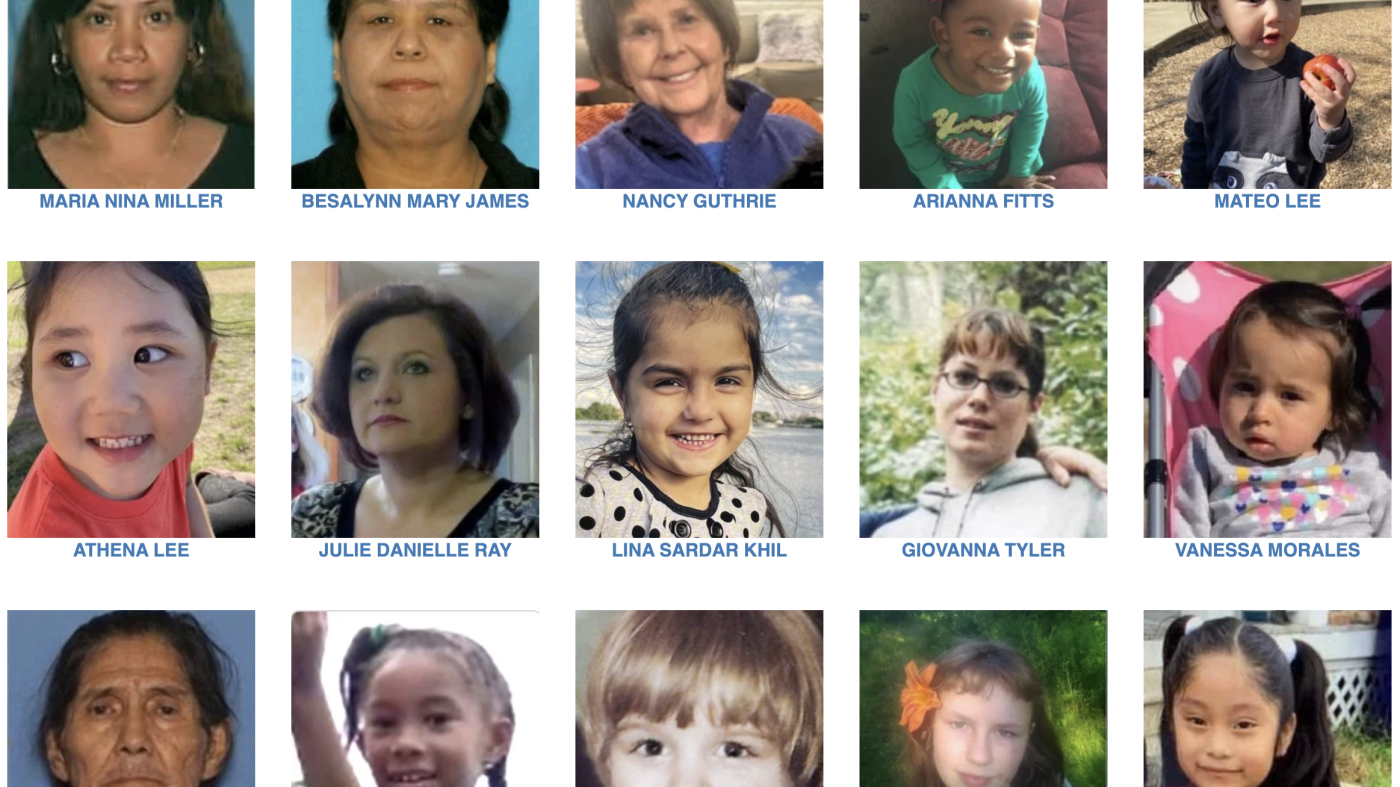

Culture1 week agoAnnotating the Judge’s Decision in the Case of Liam Conejo Ramos, a 5-Year-Old Detained by ICE

-

Culture1 week ago

Culture1 week agoIs Emily Brontë’s ‘Wuthering Heights’ Actually the Greatest Love Story of All Time?

-

News1 week ago

News1 week agoThe Long Goodbye: A California Couple Self-Deports to Mexico