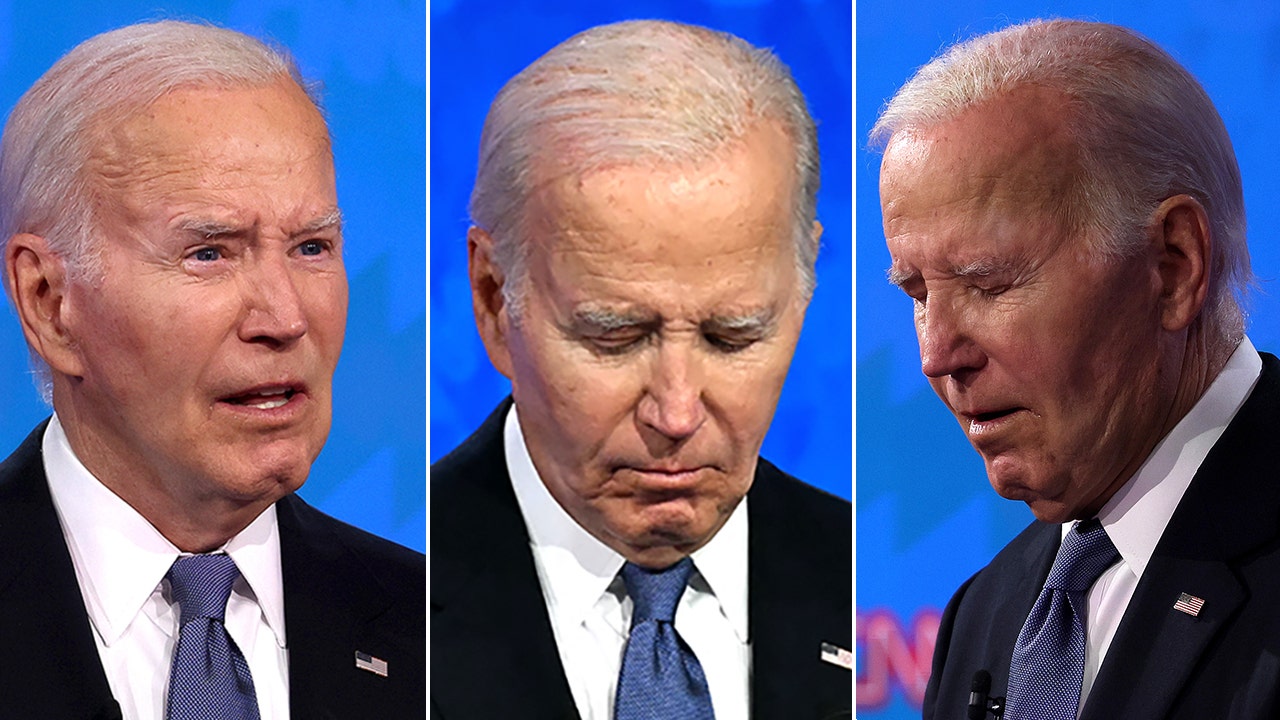

A video of Elizabeth Warren saying Republicans shouldn’t vote went viral in 2023. But it wasn’t Warren. That video of Ron DeSantis wasn’t the Florida governor, either. And nope, Pope Francis was not wearing a white Balenciaga coat.

Technology

Watermarking the future

/cdn.vox-cdn.com/uploads/chorus_asset/file/25277387/246992_AI_at_Work_WATERMARKING_ECarter.jpg)

Generative AI has made it easier to create deepfakes and spread them around the internet. One of the most common proposed solutions involves the idea of a watermark that would identify AI-generated content. The Biden administration has made a big deal out of watermarks as a policy solution, even specifically mandating tech companies to find ways to identify AI-generated content. The president’s executive order on AI, released in November, was built on commitments from AI developers to figure out a way to tag content as AI generated. And it’s not just coming from the White House — legislators, too, are looking at enshrining watermarking requirements as law.

Watermarking can’t be a panacea — for one thing, most systems simply don’t have the capacity to tag text the way it can tag visual media. Still, people are familiar enough with watermarks that the idea of watermarking an AI-generated image feels natural.

Pretty much everyone has seen a watermarked image. Getty Images, which distributes licensed photos taken at events, uses a watermark so ubiquitous and so recognizable that it is its own meta-meme. (In fact, the watermark is now the basis of Getty’s lawsuit against the AI-generation platform Midjourney, with Getty alleging that Midjourney must have taken its copyrighted content since it generates the Getty watermark in its output.) Of course, artists were signing their works long before digital media or even the rise of photography, in order to let people know who created the painting. But watermarking itself — according to A History of Graphic Design — began during the Middle Ages, when monks would change the thickness of the printing paper while it was wet and add their own mark. Digital watermarking rose in the ‘90s as digital content grew in popularity. Companies and governments began putting tags (hidden or otherwise) to make it easier to track ownership, copyright, and authenticity.

Watermarks will, as before, still denote who owns and created the media that people are looking at. But as a policy solution for the problem of deepfakes, this new wave of watermarks would, in essence, tag content as either AI or human generated. Adequate tagging from AI developers would, in theory, also show the provenance of AI-generated content, thus additionally addressing the question of whether copyrighted material was used in its creation.

Tech companies have taken the Biden directive and are slowly releasing their AI watermarking solutions. Watermarking may seem simple, but it has one significant weakness: a watermark pasted on top of an image or video can be easily removed via photo or video editing. The challenge becomes, then, to make a watermark that Photoshop cannot erase.

The challenge becomes, then, to make a watermark that Photoshop cannot erase.

Companies like Adobe and Microsoft — members of the industry group Coalition for Content Provenance and Authenticity, or C2PA — have adopted Content Credentials, a standard that adds features to images and videos of its provenance. Adobe has created a symbol for Content Credentials that gets embedded in the media; Microsoft has its own version as well. Content Credentials embeds certain metadata — like who made the image and what program was used to create it — into the media; ideally, people will be able to click or tap on the symbol to look at that metadata themselves. (Whether this symbol can consistently survive photo editing is yet to be proven.)

Meanwhile, Google has said it’s currently working on what it calls SynthID, a watermark that embeds itself into the pixels of an image. SynthID is invisible to the human eye, but still detectable via a tool. Digimarc, a software company that specializes in digital watermarking, also has its own AI watermarking feature; it adds a machine-readable symbol to an image that stores copyright and ownership information in its metadata.

All of these attempts at watermarking look to either make the watermark unnoticable by the human eye or punt the hard work over to machine-readable metadata. It’s no wonder: this approach is the most surefire way information can be stored without it being removed, and encourages people to look closer at the image’s provenance.

That’s all well and good if what you’re trying to build is a copyright detection system, but what does that mean for deepfakes, where the problem is that fallible human eyes are being deceived? Watermarking puts the burden on the consumer, relying on an individual’s sense that something isn’t right for information. But people generally do not make it a habit to check the provenance of anything they see online. Even if a deepfake is tagged with telltale metadata, people will still fall for it — we’ve seen countless times that when information gets fact-checked online, many people still refuse to believe the fact-checked information.

Experts feel a content tag is not enough to prevent disinformation from reaching consumers, so why would watermarking work against deepfakes?

The best thing you can say about watermarks, it seems, is that at least it’s anything at all. And due to the sheer scale of how much AI-generated content can be quickly and easily produced, a little friction goes a long way.

After all, there’s nothing wrong with the basic idea of watermarking. Visible watermarks signal authenticity and may encourage people to be more skeptical of media without it. And if a viewer does find themselves curious about authenticity, watermarks directly provide that information.

The best thing you can say about watermarks, it seems, is that at least it’s anything at all.

Watermarking can’t be a perfect solution for the reasons I’ve listed (and besides that, researchers have been able to break many of the watermarking systems out there). But it works in tandem with a growing wave of skepticism toward what people see online. I have to confess when I began writing this, I’d believed that it’s easy to fool people into believing really good DALL-E 3 or Midjourney photos were made by humans. However, I realized that discourse around AI art and deepfakes has seeped into the consciousness of many chronically online people. Instead of accepting magazine covers or Instagram posts as authentic, there’s now an undercurrent of doubt. Social media users regularly investigate and call out brands when they use AI. Look at how quickly internet sleuths called out the opening credits of Secret Invasion and the AI-generated posters in True Detective.

It’s still not an excellent strategy to rely on a person’s skepticism, curiosity, or willingness to find out if something is AI-generated. Watermarks can do good, but there has to be something better. People are more dubious of content, but we’re not fully there yet. Someday, we might find a solution that conveys something is made by AI without hoping the viewer wants to find out if it is.

For now, it’s best to learn to recognize if a video isn’t really of a politician.

Technology

Here’s your first look at Amazon’s Like a Dragon: Yakuza

/cdn.vox-cdn.com/uploads/chorus_asset/file/25547838/YAKZA_3840_2160_A_Elogo.jpg)

Amazon says that the show “showcases modern Japan and the dramatic stories of these intense characters, such as the legendary Kazuma Kiryu, that games in the past have not been able to explore.” Kiryu will be played by Ryoma Takeuchi, while Kento Kaku also starts as Akira Nishikiyama. The series is directed by Masaharu Take.

Like a Dragon: Yakuza starts streaming on Prime Video on October 24th with its first three episodes.

Technology

Exciting AI tools and games you can try for free

I’m not an artist. My brain just does not work that way. I tried to learn Photoshop but gave up. Now, I create fun images using AI.

You need a vacation. We’re giving away a $1,000 getaway gift card for your favorite airline. Enter to win now!

Some AI tech is kind of freaky (like this brain-powered robot), but many of the new AI tools out there are just plain fun. Let’s jump into the wide world of freebies that will help you make something cool.

20 TECH TRICKS TO MAKE LIFE BETTER, SAFER OR EASIER

Create custom music tracks

Not everyone is musically inclined, but AI makes it pretty easy to pretend you are. At the very least, you can make a funny tune for a loved one who needs some cheering up.

AI to try: Udio

Perfect for: Experimenting with song styles

Starter prompt: “Heartbreak at the movie theater, ‘80s ballad”

Cheerful man sitting in front of his computer monitor eating and working. (iStock)

Just give Udio a topic for a song and a genre, and it’ll do the rest. I asked it to write a yacht rock song about a guy who loves sunsets, and it came up with two one-minute clips that were surprisingly good. You can customize the lyrics, too.

Produce quick video clips

The built-in software on our phones does a decent job at editing down the videos we shoot (like you and the family at the beach), but have you ever wished you could make something a little snazzier?

AI to try: Invideo

Perfect for: Quick content creation

TIME-SAVING TRICKS USING YOUR KEYBOARD

Starter prompt: “Cats on a train”

Head to Invideo to produce your very own videos, no experience needed. Your text prompts can be simple, but you’ll get better results if you include more detail.

You can add an AI narration over the top (David Attenborough’s AI voice is just too good). FYI, the free account puts a watermark on your videos, but if you’re just doing it for fun, no biggie.

Draft digital artwork

You don’t need to be an AI whiz skilled at a paid program like Midjourney to make digital art. Here’s an option anyone can try.

Closeup shot of an unrecognizable woman using a laptop while working from home. (iStock)

AI to try: OpenArt

Perfect for: Illustrations and animations

Starter prompt: “A lush meadow with blue skies”

OpenArt starts you off with a simple text prompt, but you can tweak it in all kinds of funky ways, from the image style to the output size. You can also upload images of your own for the AI to take its cues from and even include pictures of yourself (or friends and family) in the art.

If you’ve caught the AI creative bug and want more of the same, try the OpenArt Sketch to Image generator. It turns your original drawings into full pieces of digital art.

YOUR BANK WANTS YOUR VOICE. JUST SAY NO.

More free AI fun

Maybe creating videos and works of art isn’t your thing. There’s still lots of fun to be had with AI.

- Good time for kids and adults: Google’s Quick, Draw! Try to get the AI to recognize your scribblings before time runs out in this next-gen Pictionary-style game.

- Expose your kid to different languages: Another option from Google, Thing Translator, lets you snap a photo of something to hear the word for it in a different language. Neat!

- Warm up your vocal chords: Freddimeter uses AI to rate how well you can sing like Freddie Mercury. Options include “Don’t Stop Me Now,” “We Are the Champions,” “Bohemian Rhapsody” and “Somebody To Love.”

A mother uses a laptop while a little boy uses a tablet. (iStock)

If you’re not tech-ahead, you’re tech-behind

Award-winning host Kim Komando is your secret weapon for navigating tech.

Copyright 2024, WestStar Multimedia Entertainment. All rights reserved.

Technology

There is no fix for Intel’s crashing 13th and 14th Gen CPUs — any damage is permanent

/cdn.vox-cdn.com/uploads/chorus_asset/file/25546355/intel_13900k_tomwarren__2_.jpg)

On Monday, it initially seemed like the beginning of the end for Intel’s desktop CPU instability woes — the company confirmed a patch is coming in mid-August that should address the “root cause” of exposure to elevated voltage. But if your 13th or 14th Gen Intel Core processor is already crashing, that patch apparently won’t fix it.

Citing unnamed sources, Tom’s Hardware reports that any degradation of the processor is irreversible, and an Intel spokesperson did not deny that when we asked. Intel is “confident” the patch will keep it from happening in the first place. (As another preventative measure, you should update your BIOS ASAP.) But if your defective CPU has been damaged, your best option is to replace it instead of tweaking BIOS settings to try and alleviate the problems.

And, Intel confirms, too-high voltages aren’t the only reason some of these chips are failing. Intel spokesperson Thomas Hannaford confirms it’s a primary cause, but the company is still investigating. Intel community manager Lex Hoyos also revealed some instability reports can be traced back to an oxidization manufacturing issue that was fixed at an unspecified date last year.

This raises lots of questions. Will Intel recall these chips? Extend their warranty? Replace them no questions asked? Pause sales like AMD just did with its Ryzen 9000? Identify faulty batches with the manufacturing defect?

We asked Intel these questions, and I’m not sure you’re going to like the answers.

Why are these still on sale without so much as an extended warranty?

Intel has not halted sales or clawed back any inventory. It will not do a recall, period. The company is not currently commenting on whether or how it might extend its warranty. It would not share estimates with The Verge of how many chips are likely to be irreversibly impacted, and it did not explain why it’s continuing to sell these chips ahead of any fix.

Intel’s not yet telling us how warranty replacements will work beyond trying customer support again if you’ve previously been rejected. It did not explain how it will contact customers with these chips to warn them about the issue.

But Intel does tell us it’s “confident” that you don’t need to worry about invisible degradation. If you’re not currently experiencing issues, the patch “will be an effective preventative solution for processors already in service.” (If you don’t know if you’re experiencing issues, Intel currently suggests the Robeytech test.)

And, perhaps for the first time, Intel has confirmed just how broad this issue could possibly be. The elevated voltages could potentially affect any 13th or 14th Gen desktop processor that consumes 65W or more power, not just the highest i9-series chips that initially seemed to be experiencing the issue.

Here are the questions we asked Intel and the answers we’ve received by email from Intel’s Hannaford:

How many chips does Intel estimate are likely to be irreversibly impacted by these issues?

Intel Core 13th and 14th Generation desktop processors with 65W or higher base power – including K/KF/KS and 65W non-K variants – could be affected by the elevated voltages issue. However, this does not mean that all processors listed are (or will be) impacted by the elevated voltages issue.

Intel continues validation to ensure that scenarios of instability reported to Intel regarding its Core 13th and 14th Gen desktop processors are addressed.

For customers who are or have been experiencing instability symptoms on their 13th and/or 14th Gen desktop processors, Intel continues advising them to reach out to Intel Customer Support for further assistance. Additionally, if customers have experienced these instability symptoms on their 13th and/or 14th Gen desktop processors but had RMA [return merchandise authorization] requests rejected we ask that they reach out to Intel Customer Support for further assistance and remediation.

Will Intel issue a recall?

Will Intel proactively warn buyers of these chips about the warning signs or that this update is required? If so, how will it warn them?

Intel targets to release a production microcode update to OEM/ODM customers by mid-August or sooner and will share additional details on the microcode patch at that time.

Intel is investigating options to easily identify affected processors on end user systems. In the interim, as a general best practice Intel recommends that users adhere to Intel Default Settings on their desktop processors, along with ensuring their BIOS is up to date.

Has Intel halted sales and / or performed any channel inventory recalls while it validates the update?

Does Intel anticipate the fix will be effective for chips that have already been in service but are not yet experiencing symptoms (i.e., invisible degradation)? Are those CPUs just living on borrowed time?

Intel is confident that the microcode patch will be an effective preventative solution for processors already in service, though validation continues to ensure that scenarios of instability reported to Intel regarding its Core 13th/14th Gen desktop processors are addressed.

Intel is investigating options to easily identify affected or at-risk processors on end user systems.

It is possible the patch will provide some instability improvements to currently impacted processors; however customers experiencing instability on their 13th or 14th Generation desktop processor-based systems should contact Intel customer support for further assistance.

Will Intel extend its warranty on these 13th Gen and 14th Gen parts, and for how long?

Given how difficult this issue was for Intel to pin down, what proof will customers need to share to obtain an RMA? (How lenient will Intel be?)

What will Intel do for 13th Gen buyers after supply of 13th Gen parts runs out? Final shipments were set to end last month, I’m reading.

Intel is committed to making sure all customers who have or are currently experiencing instability symptoms on their 13th and/or 14th Gen desktop processors are supported in the exchange process. This includes working with Intel’s retail and channel customers to ensure end users are taken care of regarding instability symptoms with their Intel Core 13th and/or 14th Gen desktop processors.

What will Intel do for 14th Gen buyers after supply of 14th Gen parts run out?

Will replacement / RMA’d chips ship with the microcode update preapplied beginning in August? Is Intel still shipping replacement chips ahead of that update?

Intel will be applying to microcode to 13th/14th Gen desktop processors that are not yet shipped once the production patch is released to OEM/ODM partners (targeting mid-August or sooner). For 13th /14th Gen desktop processors already in service, users will need to apply the patch via BIOS update once available.

What, if anything, can customers do to slow or stop degradation ahead of the microcode update?

Intel recommends that users adhere to Intel Default Settings on their desktop processors, along with ensuring their BIOS is up to date. Once the microcode patch is released to Intel partners, we advise users check for the relevant BIOS updates.

Will Intel share specific manufacturing dates and serial number ranges for the oxidized processors so mission-critical businesses can selectively rip and replace?

Intel will continue working with its customers on Via Oxidation-related reports and ensure that they are fully supported in the exchange process.

Why does Intel believe the instability issues do not affect mobile laptop chips?

Intel is continuing its investigation to ensure that reported instability scenarios on Intel Core 13th/14th Gen processors are properly addressed.

This includes ongoing analysis to confirm the primary factors preventing 13th / 14th Gen mobile processor exposure to the same instability issue as the 13th/14th Gen desktop processors.

That’s all we’ve heard from Intel so far, though Hannaford assured us more answers are on the way and that the company is working on remedies.

Again, if your CPU is already damaged, you need to get Intel to replace it, and if Intel won’t do so, please let us know. In the meanwhile, you’ll want to update your BIOS as soon as possible because your processor could potentially be invisibly damaging itself — and if you know your way around a BIOS, you may want to adjust your motherboard to Intel’s default performance profiles, too.

Lastly, here is that Robeytech video that Intel is recommending to Redditors to potentially help them identify if their chip has an issue. Intel says it’s looking into other ways to identify that, too.

-

World1 week ago

World1 week agoOne dead after car crashes into restaurant in Paris

-

Midwest1 week ago

Midwest1 week agoMichigan rep posts video response to Stephen Colbert's joke about his RNC speech: 'Touché'

-

News1 week ago

News1 week agoVideo: Young Republicans on Why Their Party Isn’t Reaching Gen Z (And What They Can Do About It)

-

Movie Reviews1 week ago

Movie Reviews1 week agoMovie Review: A new generation drives into the storm in rousing ‘Twisters’

-

News1 week ago

News1 week agoIn Milwaukee, Black Voters Struggle to Find a Home With Either Party

-

Politics1 week ago

Politics1 week agoFox News Politics: The Call is Coming from Inside the House

-

News1 week ago

News1 week agoVideo: J.D. Vance Accepts Vice-Presidential Nomination

-

World1 week ago

World1 week agoTrump to take RNC stage for first speech since assassination attempt