Business

Anita Hill-led Hollywood Commission wants to change how workers report sexual harassment

In the wake of movie mogul Harvey Weinstein’s 2020 rape trial, a survey of nearly 10,000 workers by the Anita Hill-led Hollywood Commission revealed a sobering result: Few people believed perpetrators would ever be held accountable.

The vast majority, however, were interested in new tools to document incidents and access resources and helplines.

Four years later, the Hollywood Commission is trying to make that request a reality.

On Thursday, the nonprofit organization launched MyConnext, an online resource and reporting tool that will allow workers at five major entertainment business organizations to get help with reporting incidents of harassment, discrimination and abuse.

Homepage of MyConnext.

(MyConnext)

The website allows those entertainment industry employees to speak with a live ombudsperson, create time-stamped records and submit those reports to their employer or union. (Any entertainment worker can access the site’s resources section to learn more about what it means to report an incident and understand complicating factors such as mandatory arbitration.)

So far, the commission has partnered with the Directors Guild of America, the Writers Guild of America, certain U.S.-based Amazon productions, all U.S.-based Netflix productions and film/TV producer the Kennedy/Marshall Co., founded by filmmakers Kathleen Kennedy and Frank Marshall. The International Alliance of Theatrical Stage Employees is expected to join later this year, according to the commission.

MyConnext is not intended to replace any of these organizations’ individual reporting platforms. Rather, it’s designed to provide an additional option and serve as a one-stop shop for workers seeking help or resources. The commission did not say what the initiative cost.

One key feature of the MyConnext reporting platform is called “hold for match,” which allows a worker to fill out a record of an incident and instructs the system not to send the report to one of the partner organizations until another report about the same person is detected. At that time, both reports will be sent.

“It is very difficult for an individual to come forward,” said Hill, president of the Hollywood Commission, which was founded in October 2017 to help eradicate abuse in the entertainment industry. “Let’s say, for example, Harvey Weinstein: It was very difficult to prove a case when there was only one person because there was a tendency to turn it into a so-called ‘he-said, she-said’ situation.”

With this feature, however, employers could potentially recognize a pattern of abuse. And that, Hill said, could be a game changer.

“We ultimately hope that [the tool] will elevate the level of accountability, and accountability is ultimately what I think everybody wants,” said Hill. The commission led the 2020 survey, along with a follow-up survey this year that found a similar desire for harassment reporting resources.

“Information, really, is power,” said Hill.

Advocates say such resources have become even more crucial amid what they describe as a pullback in Hollywood’s promised efforts to create a more inclusive industry for women. Fears of backsliding escalated after Weinstein’s New York sex assault conviction was overturned last month by a state appeals court, which ordered a new trial. Weinstein’s conviction in California remains.

“What’s so important even now, in light of the reversal of a conviction, is making sure that individuals who have suffered harm get to choose what makes the most sense for them,” said Malia Arrington, executive director of the Hollywood Commission. “You need to be informed about what all of your different choices may mean to make sure that you’re entering into whatever path with eyes wide open.”

With that in mind, the platform has a multipronged approach. The resources section helps workers understand their options, including the general process for filing a complaint, as well as where to access counseling and emotional or employment support.

Members of the participating organizations also have access to a secure platform through MyConnext that lets them record an incident — regardless of whether they submit it as an official report — send anonymous messages, speak with an independent ombudsperson and submit reports of abuse.

Speaking with an advocate allows workers to get their questions answered confidentially and by a live human, said Lillian Rivera, the ombudsperson who is employed by MyConnext.

“It’s a human that’s going to listen to folks, who’s going to be nonjudgmental, who is going to be supportive and is going to be able to point people toward all of their options, and really put the power in the hands of the worker so they can make the decision that’s best for them,” Rivera said.

Business

After 57 years of open seating, is Southwest changing its brand?

Jim Kingsley of Orange County, who recently flew Southwest on a two-leg journey from Minneapolis to Los Angeles, likened the budget-friendly airline to In-N-Out Burger.

Both brands are affordable, consistent and more simplistic compared with competitors, Kingsley said.

“They’re not trying to offer all the things everybody else offers,” he said, “but they get the quality right and it’s a good value.”

Change, however, is in the air.

Southwest, which since its founding nearly 60 years ago has positioned itself in the cutthroat airline industry as an easygoing, egalitarian option, upended that guiding ethos this week with word that it would get rid of its famous first-come, first-seated policy in favor of traditional assigned seats and a premium class option. They will also offer overnight, red-eye flights in five markets including Los Angeles.

Experts say the changes, especially the switch to assigned seating, are a smart move and will appeal to many as the company tries to stabilize its precarious finances that included a 46% drop in profits in the second quarter from a year earlier to $367 million. But it remains to be seen whether Southwest will pay an intangible cost in making the moves: Will it be able to hold on to its quirky identity or will it put off loyal customers, and in doing so, become just another airline?

“You’re going to hear nostalgia about this, but I think it’s very logical and probably something the company should have done years ago,” said Duane Pfennigwerth, a global airlines analyst at Evercore.

“In many markets away from core Southwest markets, we think open seating is a boarding process that many people avoid,” he said.

That is all well and good, but “I didn’t ask for these changes,” Kingsley said. “Cost and quality is what I care about.”

Open seating has its pros and cons, Kingsley said, though he’s generally a fan. On his trip to Los Angeles, his group wasn’t able to get seats all together. But he likes that preferred seats are available on a first-come, first-served basis, instead of being offered for a high price.

Eighty percent of Southwest customers and 86% of potential customers prefer an assigned seat, the airline said in a statement.

“By moving to an assigned seating model, Southwest expects to broaden its appeal and attract more flying from its current and future customers,” the airline said.

An even bigger draw of Southwest, according to Kingsley, is its policy of including two free checked bags per ticket. This perk often makes Southwest a better bargain, especially for longer trips or bigger groups, he said.

The free bags are a big deal to customers, experts said, and contribute to the airline’s consumer-friendly brand. The airline hasn’t indicated they plan to change their bag policy.

“Southwest has always had a really good, positive vibe,” said Alan Fyall, chair of Tourism Marketing at the University of Central Florida’s College of Hospitality. “It’s free bags, good prices and point-to-point routes. That’s what they stand for and that’s what people love about them.”

Southwest’s change to assigned seating doesn’t mean they’re no longer a budget-friendly airline, Fyall said, but it does differentiate them from the lowest-cost, lowest-amenity options such as Frontier and Spirit.

The move will also require Southwest to update all or a portion of its fleet to include first-class seats. Currently, all seats on a Southwest flight are identical. Fyall said it’s worth the investment.

It’s an appropriate time for Southwest to make adjustments, said Chris Hydock, an assistant professor at Tulane University’s Freeman School of Business.

“They’ve not been profitable the last couple of quarters and they’ve had some activist investor pressure to increase their revenue,” he said.

Costs such as wages and maintenance have risen across the airline industry even as travel increased after the pandemic. Southwest saw a net loss of $231 million in the first quarter of 2024. Wall Street analysts estimate that assigned, premium seating could boost revenue by $2 billion per year.

“This is one of the options where they could potentially increase their revenue and do something that a lot of consumers have a strong preference for anyway,” Hydock said.

For Southwest’s changes to pay off, it has to stick to its roots when it comes to its culture and brand, experts and travelers agreed.

“I love Southwest being different,” Kingsley said. “If they’re trying to be like the other airlines, I think they’re shooting themselves in the foot.”

Business

Column: 99 years after the Scopes 'monkey trial,' religious fundamentalism still infects our schools

Almost a century has passed since a Tennessee schoolteacher was found guilty of teaching evolution to his students. We’ve come a long way since that happened on July 21, 1925. Haven’t we?

No, not really.

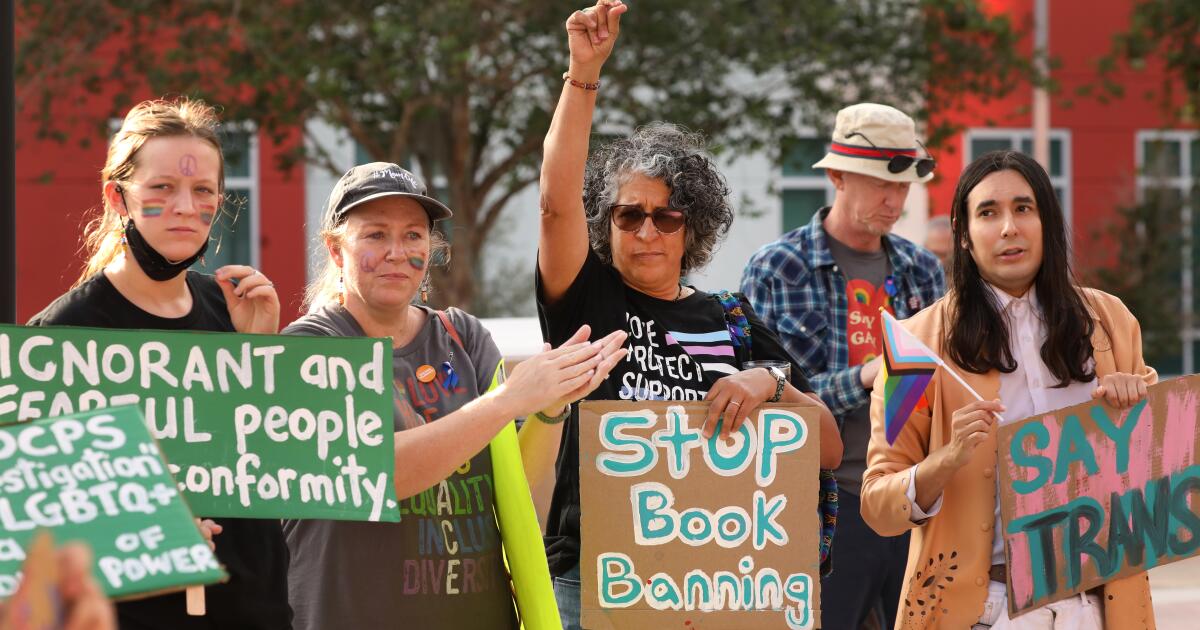

The Christian fundamentalism that begat the state law that John Scopes violated has not gone away. It regularly resurfaces in American politics, including today, when efforts to ban or dilute the teaching of evolution and other scientific concepts are part and parcel of a nationwide book-banning campaign, augmented by an effort to whitewash the teaching of American history.

I knew that education was in danger from the source that has always hampered it—religious fanaticism.

— Clarence Darrow, on why he took on the defense of John Scopes at the ‘monkey trial’

The trial in Dayton, Tenn., that supposedly placed evolution in the dock is seen as a touchstone of the recurrent battle between science and revelation. It is and it isn’t. But the battle is very real.

Let’s take a look.

The Scopes trial was one of the first, if not the very first, to be dubbed “the trial of the century.”

And why not? It pitted the fundamentalist William Jennings Bryan — three-time Democratic presidential candidate, former congressman and secretary of State, once labeled “the great commoner” for his faith in the judgment of ordinary people, but at 65 showing the effects of age — against Clarence Darrow, the most storied defense counsel of his time.

The case has retained its hold on the popular imagination chiefly thanks to “Inherit the Wind,” an inescapably dramatic reconstruction — actually a caricature — of the trial that premiered in 1955, when the play was written as a hooded critique of McCarthyism.

Most people probably know it from the 1960 film version, which starred Frederic March, Spencer Tracy and Gene Kelly as the characters meant to portray Bryan, Darrow and H.L. Mencken, the acerbic Baltimore newspaperman whose coverage of the trial is a genuine landmark of American journalism.

What all this means is that the actual case has become encrusted by myth over the ensuing decades.

One persistent myth is that the anti-evolution law and the trial arose from a focused groundswell of religious fanaticism in Tennessee. In fact, they could be said to have occurred — to repurpose a phrase usually employed to describe how Britain acquired her empire — in “a fit of absence of mind.”

The Legislature passed the measure idly as a meaningless gift to its drafter, John W. Butler, a lay preacher who hadn’t passed any other bill. (The bill “did not amount to a row of pins; let him have it,” a legislator commented, according to Ray Ginger’s definitive 1958 book about the case, “Six Days or Forever?”)

No one bothered to organize an opposition. There was no legislative debate. The lawmakers assumed that Gov. Austin Peay would simply veto the bill. The president of the University of Tennessee disdained it, but kept mum because he didn’t want the issue to complicate a plan for university funding then before the Legislature.

Peay signed the bill, asserting that it was an innocuous law that wouldn’t interfere with anything being taught in the state’s schools. The law “probably … will never be applied,” he said. Bryan, who approved of the law as a symbolic statement of religious principle, had advised legislators to leave out any penalty for violation, lest it be declared unconstitutional.

The lawmakers, however, made it a misdemeanor punishable by a fine for any teacher in the public schools “to teach any theory that denies the story of the Divine Creation of man as taught in the Bible, and to teach instead that man had descended from a lower order of animal.”

Scopes’ arrest and trial proceeded in similarly desultory manner. Scopes, a school football coach and science teacher filling in for an ailing biology teacher, assigned the students to read a textbook that included evolution. He wasn’t a local and didn’t intend to set down roots in Dayton, but his parents were socialists and agnostics, so when a local group sought to bring a test case, he agreed to be the defendant.

The play and movie of “Inherit the Wind” portray the townspeople as religious fanatics, except for a couple of courageous individuals. In fact, they were models of tolerance. Even Mencken, who came to Dayton expecting to find a squalid backwater, instead discovered “a country town full of charm and even beauty.”

Dayton’s civic boosters paid little attention to the profound issues ostensibly at play in the courthouse; they saw the trial as a sort of economic development project, a tool for attracting new residents and businesses to compete with the big city nearby, Chattanooga. They couldn’t have been happier when Bryan signed on as the chief prosecutor and a local group solicited Darrow for the defense.

“I knew that education was in danger from the source that has always hampered it — religious fanaticism,” Darrow wrote in his autobiography. “My only object was to focus the attention of the country on the programme of Mr. Bryan and the other fundamentalists in America.” He wasn’t blind to how the case was being presented in the press: “As a farce instead of a tragedy.” But he judged the press publicity to be priceless.

The press and and the local establishment had diametrically opposed visions of what the trial was about. The former saw it as a fight to protect from rubes the theory of evolution, specifically that humans descended from lower orders of primate, hence the enduring nickname of the “monkey trial.” For the judge and jury, it was about a defendant’s violation of a law written in plain English.

The trial’s elevated position in American culture derives from two sources: Mencken’s coverage for the Baltimore Sun, and “Inherit the Wind.” Notwithstanding his praise for Dayton’s “charm,” Mencken scorned its residents as “yokels,” “morons” and “ignoramuses,” trapped by their “simian imbecility” into swallowing Bryan’s “theologic bilge.”

The play and movie turned a couple of courtroom exchanges into moments of high drama, notably Darrow’s calling Bryan to the witness stand to testify to the truth of the Bible, and Bryan’s humiliation at his hands.

In truth, that exchange was a late-innings sideshow of no significance to the case. Scopes was plainly guilty of violating the law and his conviction preordained. But it was overturned on a technicality (the judge had fined him $100, more than was authorized by state law), leaving nothing for the pro-evolution camp to bring to an appellate court. The whole thing fizzled away.

The idea that despite Scopes’ conviction, the trial was a defeat for fundamentalism, lived on. Scopes was one of its adherents. “I believe that the Dayton trial marked the beginning of the decline of fundamentalism,” he said in a 1965 interview. “I feel that restrictive legislation on academic freedom is forever a thing of the past, … that the Dayton trial had some part in bringing to birth this new era.”

That was untrue then, or now. When the late biologist and science historian Stephen Jay Gould quoted that interview in a 1981 essay, fundamentalist politics were again on the rise. Gould observed that Jerry Falwell had taken up the mountebank’s mission of William Jennings Bryan.

It was harder then to exclude evolution from the class curriculum entirely, Gould wrote, but its enemies had turned to demanding “‘equal time’ for evolution and for old-time religion masquerading under the self-contradictory title of ‘scientific creationism.’”

For the evangelical right, Gould noted, “creationism is a mere stalking horse … in a political program that would ban abortion, erase the political and social gains of women … and reinstitute all the jingoism and distrust of learning that prepares a nation for demagoguery.”

And here we are again. Measures banning the teaching of evolution outright have not lately been passed or introduced at the state level. But those that advocate teaching the “strengths and weaknesses” of scientific hypotheses are common — language that seems innocuous, but that educators know opens the door to undermining pupils’ understanding of science.

In some red states, legislators have tried to bootstrap regulations aimed at narrowing scientific teaching onto laws suppressing discussions of race and gender in the classrooms and stripping books touching those topics from school libraries and public libraries.

The most ringing rejection of creationism as a public school topic was sounded in 2005 by a federal judge in Pennsylvania, who ruled that “intelligent design” — creationism by another name — “cannot uncouple itself from its creationist, and thus religious, antecedents” and therefore is unconstitutional as a topic in public schools. Yet only last year, a bill to allow “intelligent design” to be taught in the state’s public schools was overwhelmingly passed by the state Senate. (It died in a House committee.)

Oklahoma’s reactionary state superintendent of education, Ryan Walters, recently mandated that the Bible should be taught in all K-12 schools, and that a physical copy be present in every classroom, along with the Ten Commandments, the Declaration of Independence and the Constitution. “These documents are mandatory for the holistic education of students in Oklahoma,” he ordered.

It’s clear that these sorts of policies are broadly unpopular across much of the nation: In last year’s state and local elections, ibook-banners and other candidates preaching a distorted vision of “parents’ rights” to undermine educational standards were soundly defeated.

That doesn’t seem to matter to the culture warriors who have expanded their attacks on race and gender teaching to science itself. They’re playing a long game. They conceal their intentions with vague language in laws that force teachers to question whether something they say in class will bring prosecutors to the schoolhouse door.

Gould detected the subtext of these campaigns. So did Mencken, who had Bryan’s number. Crushed by his losses in three presidential campaigns in 1896, 1900 and 1908, Mencken wrote, Bryan had launched a new campaign of cheap religiosity.

“This old buzzard,” Mencken wrote, “having failed to raise the mob against its rulers, now prepares to raise it against its teachers.” Bryan understood instinctively that the way to turn American society from a democracy to a theocracy was to start by destroying its schools. His heirs, right up to the present day, know it too.

Business

NASA identifies Starliner problems but sets no date for astronauts' return to Earth

After weeks of testing, NASA and Boeing officials said Thursday they have identified problems with the Starliner’s propulsion system that have kept two astronauts at the International Space Station for seven weeks — but they didn’t set a date to return them to Earth.

Ground testing conducted on thrusters that maneuver Boeing’s capsule in space found that Teflon used to control the flow of rocket propellant eroded under high heat conditions, while different seals that control helium gas showed bulging, they said.

The testing was conducted after the thrusters malfunctioned when Starliner docked with the space station on June 6 and a helium leak that was detected before launch worsened on the trip to the station. The helium pressurizes the propulsion system.

However, officials said the problems should not prevent astronauts Suni Williams and Butch Wilmore from returning to Earth aboard the Starliner capsule, which lifted off on its maiden human test flight June 5 for what was supposed to be an eight-day mission.

“I am very confident we have a good vehicle to bring the crew back with,” Mark Nappi, program manager of Boeing’s Commercial Crew Program, said at a news conference.

NASA and Boeing officials have said previously that the Starliner could transport the astronauts to Earth if there were an emergency aboard the space station, but they opted to conduct the ground tests to ensure a safe, planned return.

Decisions on whether and when to use Starliner or another vehicle will be made by NASA leaders after they are presented next week with all the information collected from the testing, which will include a “hot fire” test of the engines of the Starliner docked at the space station, Nappi said.

Rigorous ground testing conducted at NASA’s White Sands Test Facility on a thruster identical to the ones on the Starliner found that, despite the issues with Teflon degradation, the thruster was able to perform the maneuvers that would be needed to return Starliner to Earth, said Steve Stich, program manager for NASA’s Commercial Crew Program.

Official also have said that the Starliner still has about 10 times more helium than is needed to bring the capsule back to Earth.

The problems that have cropped up have been an embarrassment for Boeing, which along with SpaceX was given a multibillion-dollar contract in 2014 to service the station with crew and cargo flights after the end of the space shuttle program. Since then, Elon Musk’s Hawthorne-based company has sent more than a half-dozen crews up, while Boeing is still in its testing phase — with the current flight delayed for weeks by the helium leak and other issues that arose even before the thrusters malfunctioned.

Should NASA make a decision not to bring the crew home on the Starliner — which could still return to Earth remotely — the astronauts could be retrieved by SpaceX’s Crew Dragon capsule, though SpaceX’s workhorse Falcon 9 rocket is currently grounded after a failure this month.

The Russian Soyuz spacecraft also services the station and carries American astronauts.

-

World1 week ago

World1 week agoOne dead after car crashes into restaurant in Paris

-

Midwest1 week ago

Midwest1 week agoMichigan rep posts video response to Stephen Colbert's joke about his RNC speech: 'Touché'

-

News1 week ago

News1 week agoVideo: Young Republicans on Why Their Party Isn’t Reaching Gen Z (And What They Can Do About It)

-

Movie Reviews1 week ago

Movie Reviews1 week agoMovie Review: A new generation drives into the storm in rousing ‘Twisters’

-

News1 week ago

News1 week agoIn Milwaukee, Black Voters Struggle to Find a Home With Either Party

-

Politics1 week ago

Politics1 week agoFox News Politics: The Call is Coming from Inside the House

-

News1 week ago

News1 week agoVideo: J.D. Vance Accepts Vice-Presidential Nomination

-

World1 week ago

World1 week agoTrump to take RNC stage for first speech since assassination attempt