Sign up for our Boston Marathon newsletter

Get Boston Marathon registration information, start times, live runner tracking, road closures, live updates from race day, special features, and more.

A surprise in Boston brought people to Lovejoy Wharf on Thursday.

It was a huge deal in the rap world, as Tyler, the Creator played a 30-minute pop-up show on the roof of a conference building. Fans only had to pay $5 to hear the performer play songs off his latest album, “Chromakopia” which just dropped on Monday.

The Boston stop was the latest in what seems like a series. He played a surprise show in Atlanta, Georgia, on Tuesday.

Boston Marathon

In our “Why I’m Running” series, Boston Marathon athletes share what’s inspiring them to make the 26.2-mile trek from Hopkinton to Boston. Looking for more race day content? Sign up for Boston.com’s pop-up Boston Marathon newsletter.

Name: Brianna Poehler

City/State: Granby, Mass.

I am running the 2026 Boston Marathon with Miles for Miracles in support of Boston Children’s Hospital. The Boston Marathon is deeply personal to me and my family.

My daughter is a liver transplant survivor, and at just 11 months old, she received a life-saving liver transplant at Boston Children’s Hospital.

What could have been the most devastating chapter of our lives became a story of hope, resilience, and extraordinary care because of the BCH team.

When our daughter was so small and so sick, the doctors, nurses, and staff at Boston Children’s carried us through the unimaginable.

They combined world-class medical expertise with compassion that went far beyond treatment plans and hospital rooms. They cared for our daughter as if she were their own. They supported us as anxious, exhausted parents. They gave us answers when we had questions, and reassurance when we were overwhelmed.

Most importantly, they gave our daughter a second chance at life.

Today, she is thriving because of that gift. Every milestone she reaches is a reminder of the miracle she received and the team that made it possible. Running the Boston Marathon is my way of honoring that gift and saying thank you in the most meaningful way I can.

The marathon is a test of endurance, determination, and heart — qualities I saw in my daughter during her fight and in the Boston Children’s team every single day.

With every mile I run, I will be thinking of her strength, her transplant journey, and the families who are walking similar paths right now.

By running with Miles for Miracles, I hope to raise funds that will support groundbreaking research, life-saving treatments, and compassionate care for children like my daughter. This race is more than 26.2 miles — it is a celebration of survival, gratitude, and hope.

Editor’s note: This entry may have been lightly edited for clarity or grammar.

Get Boston Marathon registration information, start times, live runner tracking, road closures, live updates from race day, special features, and more.

Charlotte Hornets (31-31, ninth in the Eastern Conference) vs. Boston Celtics (41-20, second in the Eastern Conference)

Boston; Wednesday, 7:30 p.m. EST

BETMGM SPORTSBOOK LINE: Celtics -6.5; over/under is 214.5

BOTTOM LINE: Charlotte is looking to keep its five-game win streak alive when the Hornets take on Boston.

The Celtics are 27-13 against Eastern Conference opponents. Boston is sixth in the NBA with 46.2 rebounds led by Nikola Vucevic averaging 8.8.

The Hornets are 19-21 in conference matchups. Charlotte is 7-8 when it turns the ball over less than its opponents and averages 15.0 turnovers per game.

The Celtics average 15.5 made 3-pointers per game this season, 2.7 more made shots on average than the 12.8 per game the Hornets allow. The Hornets average 16.0 made 3-pointers per game this season, 2.1 more made shots on average than the 13.9 per game the Celtics allow.

TOP PERFORMERS: Jaylen Brown is averaging 29 points, 7.1 rebounds and five assists for the Celtics. Payton Pritchard is averaging 17 points and 5.8 assists over the past 10 games.

Kon Knueppel is averaging 19.2 points, 5.5 rebounds and 3.5 assists for the Hornets. Brandon Miller is averaging 22.7 points, 5.3 rebounds and 3.6 assists over the past 10 games.

LAST 10 GAMES: Celtics: 8-2, averaging 109.4 points, 50.7 rebounds, 27.1 assists, 6.1 steals and 6.4 blocks per game while shooting 45.7% from the field. Their opponents have averaged 98.5 points per game.

Hornets: 7-3, averaging 117.3 points, 47.8 rebounds, 27.4 assists, 8.5 steals and 4.2 blocks per game while shooting 45.6% from the field. Their opponents have averaged 106.2 points.

INJURIES: Celtics: Jayson Tatum: out (achilles), Neemias Queta: day to day (rest).

Hornets: Coby White: day to day (injury management).

___

The Associated Press created this story using technology provided by Data Skrive and data from Sportradar.

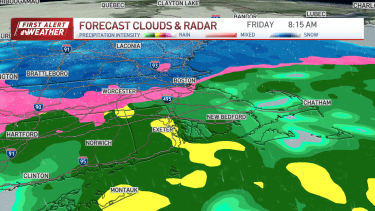

Today is a First Alert weather day. A system to our south is pushing mix of snow and rain into southern New England through this evening and tonight.

For us here in Greater Boston, expect snow to continue spreading over our area through the afternoon/evening commute. In fact, parts our area could see up to 1 to 2 inches of snow accumulation before the sleet and rain move in.

Much of Greater Boston will likely see snow amounts on the lower end. Higher snow amounts are expected toward southern New Hampshire and along and north of outer Route 2. Also, some ice accumulations are possible, up to a tenth of an inch, creating a thin glaze here and there.

Dozens of schools in Connecticut and Massachusetts have already announced early dismissals as a result of the storm.

While this system won’t cripple our area, conditions could still create a mess on the roads during the evening commute through tonight. Be careful while driving. A Winter Weather Advisory remains in effect for parts of our area through early Wednesday morning. High temperatures will be in the mid to upper 30s today. Overnight lows will drop into the low 30s.

We’ll wake up to patchy fog Wednesday morning before the sun returns. High temperatures will be in the upper 40s. We’ll stay in the 40s on Thursday with increasing clouds. But by late Thursday night into Friday, wet weather returns. Some snow could mix with the rain into Friday morning. Highs will be in the upper 30s Friday.

Warmer weather is expected this weekend. Highs will be in the 50s Saturday and possibly near 60 on Sunday.

Exclusive: DeepSeek withholds latest AI model from US chipmakers including Nvidia, sources say

Mother and daughter injured in Taunton house explosion

Setting sail on iceboats across a frozen lake in Wisconsin

AM showers Sunday in Maryland

10 acres charred, 5 injured in Thornton grass fire, evacuation orders lifted

Florida man rescued after being stuck in shoulder-deep mud for days

2026 OSAA Oregon Wrestling State Championship Results And Brackets – FloWrestling

Massachusetts man awaits word from family in Iran after attacks