At this point, it’s becoming easier to say which AI startups Mark Zuckerberg hasn’t looked at acquiring.

Technology

GTA Online’s next-gen version is prettier and easier to get into

With this week’s launch of the next-gen variations of GTA On-line for the Xbox Sequence X and PS5, Rockstar has made it simpler and quicker to jumpstart your lifetime of crime within the best-looking and best-playing model of its on-line open-world mode. The entire identical graphical and quality-of-life adjustments that just lately arrived in GTA V, which my colleague Andrew Webster reported on right here, will also be present in GTA On-line.

Whether or not you’re a brand new participant, otherwise you’re migrating a personality from a earlier console, certainly one of my favourite enhancements is that it’s simpler to be funneled into the type of content material you wish to play. From the primary menu, you may enter heists, free mode (the place you may simply roam about with no rapid goal), or be directed to new content material, like “The Contract” missions that includes Dr. Dre and GTA V’s Franklin.

New gamers will first want to finish just a few small, get-your-feet-wet missions earlier than they will entry the broader slew of content material sorts. The sport retains out different on-line gamers till you attain degree 5, which requires about 25 minutes of play. That will annoy some who’re hoping to leap in instantly with mates, nevertheless it could possibly be key for holding new gamers engaged as a substitute of pissed off by repeat kills from extra skilled and kitted-out gamers.

Picture: Rockstar Video games

These adjustments present a transparent sense of what GTA On-line gives at any given time, and it’s nice that Rockstar is delivering the web mode’s heap of content material in a extra streamlined method. Beforehand, leaping into GTA On-line felt too aimless, but concurrently overwhelming for me, as the sport tried to level me in a number of instructions directly.

However after leaping in for the primary time in a few years, it feels extra guided with the introduction of the brand new mode choose display, in addition to intelligent onboarding for brand spanking new gamers. And with the myriad graphical and quality-of-life enhancements surrounding load instances, it appears like a minor reinvention for an internet sport that basically wanted one.

Picture: Rockstar Video games

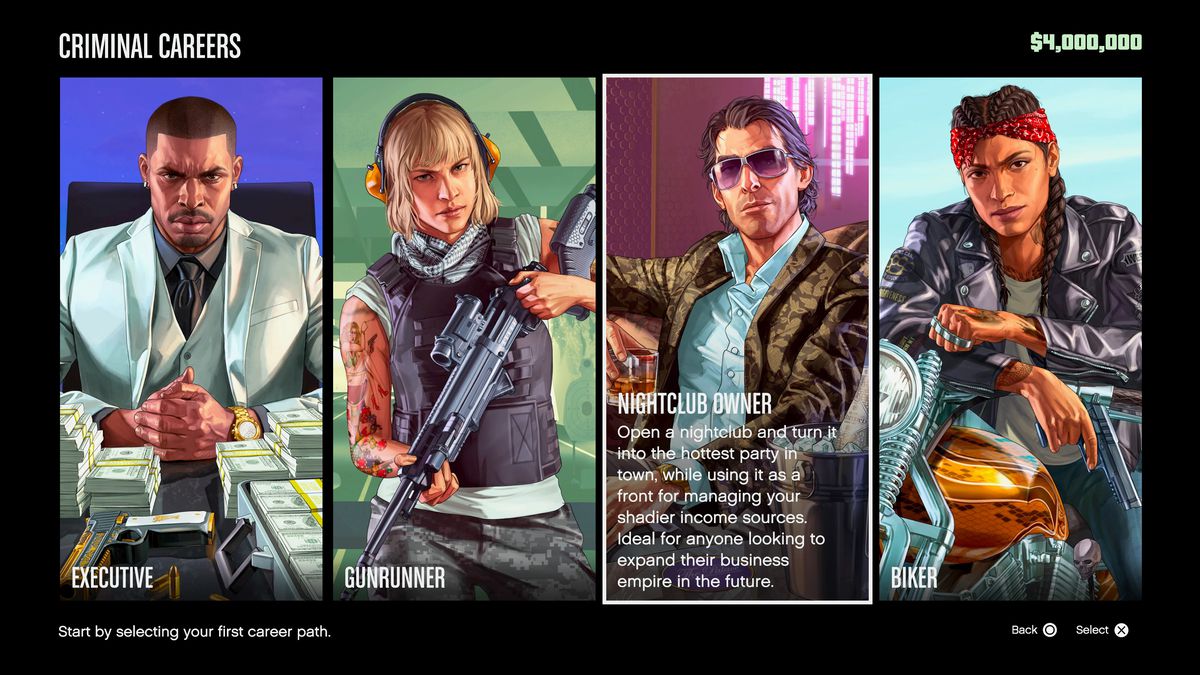

One other large replace to GTA On-line will probably be seen instantly by new gamers, or those that wish to begin a brand new character. As a substitute of being minimize free from the beginning and, kind of, having to search out your individual enjoyable, you’ll first select a profession path (principally, a category), starting from an actual estate-focused government and nightclub proprietor to a straight crime-focused biker or gunrunner — and that can put you on a quick observe for accessing the type of gameplay model and content material that you could be like most.

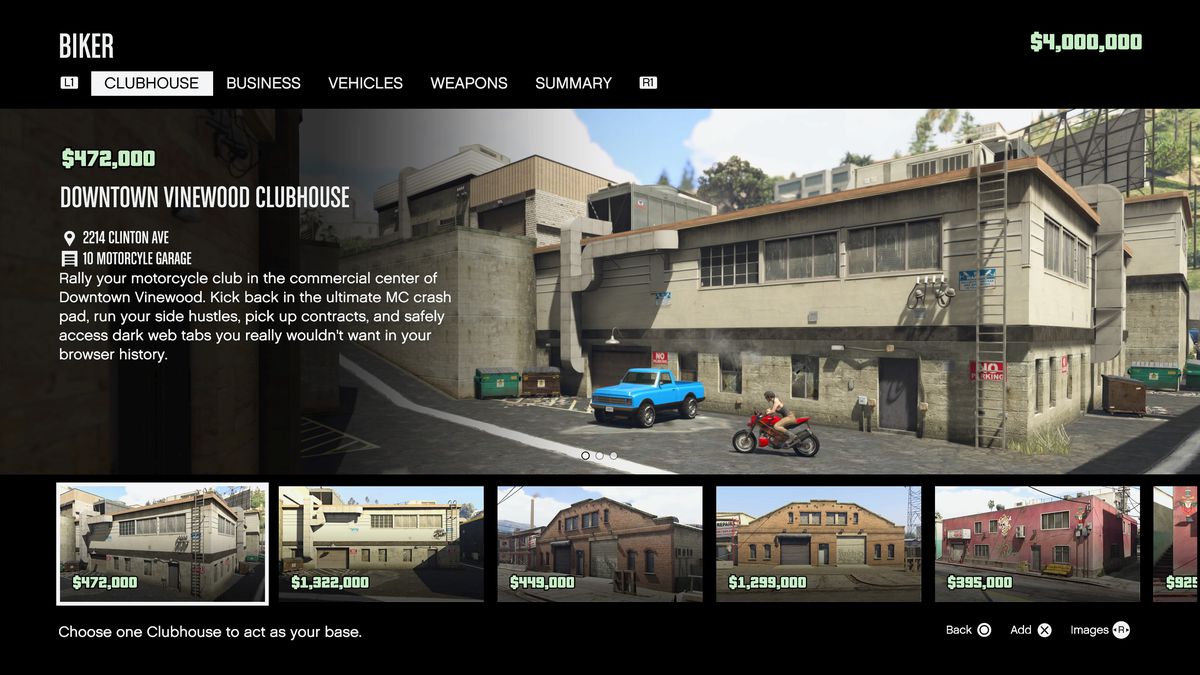

I selected the biker class, and the sport tasked me with beginning a weed farm and making transactions on the darkish internet. Throughout the first 10 minutes of taking part in, I’m advised that I’m the president of my biker gang, which I’ve named “Schweppes” in honor of the very best seltzer model, and I can appoint a number of others to run the circus with me, if I need.

You’ll get $4 million GTA bucks from the beginning, which you’ll be able to spend on a clubhouse, enterprise, automobile, weaponry, and extra earlier than you even get out of jail. The sport truly gained’t allow you to proceed except you spend not less than $3 million of their startup money injection, presumably to maintain some semblance of steadiness for its on-line economic system. It’s nice to have weapons, automobiles, and a motivation to maintain going after 5 minutes of taking part in the sport.

Picture: Rockstar Video games

I don’t understand how critically I wish to decide to the biker pathway that GTA On-line has put me on, nevertheless it’s having me check out issues I’d have by no means thought to do earlier than. In comparison with my earlier experiences with GTA On-line (first, at launch in 2013, then once more when the PC model debuted in 2015), I truly wish to preserve taking part in this time. I’m nonetheless much less fascinated with investing and managing property than I’m in racing and fascinating in gun fights, but the underlying construction and variance within the gameplay provides GTA On-line extra depth to match its new coat of paint.

For PS5 and Xbox Sequence X / S house owners who wish to try the most recent model of GTA On-line, it’s out there as a standalone buy for the primary time since its 2013 debut. It’s free to play for PS5 house owners till June 14th, 2022 (and $9.99 for Xbox till that date), however it would ultimately value $19.99 for each console platforms.

Technology

Meta held talks to buy Thinking Machines, Perplexity, and Safe Superintelligence

In addition to Ilya Sutskever’s Safe Superintelligence (SSI), sources tell me the Meta CEO recently discussed buying ex-OpenAI CTO Mira Murati’s Thinking Machines Lab and Perplexity, the AI-native Google rival. None of these talks progressed to the formal offer stage for various reasons, including disagreements over deal prices and strategy, but together they illustrate how aggressively Zuckerberg has been canvassing the industry to reboot his AI efforts.

Now, details about the team Zuckerberg is assembling are starting to come into view: SSI co-founder and CEO Daniel Gross, along with ex-Github CEO Nat Friedman, are poised to co-lead the Meta AI assistant. Both men will report to Alexandr Wang, the former Scale CEO Zuckerberg just paid over $14 billion to quickly hire. Wang told his Scale team goodbye last Friday and was in the Meta office on Monday. This week, he has been meeting with top Meta leaders (more on that below) and continuing to recruit for the new AI team Zuckerberg has tasked him with building. I expect the team to be unveiled as soon as next week.

Rather than join Meta, Sutskever, Murati, and Perplexity CEO Aravind Srinivas have all gone on to raise more money at higher valuations. Sutskever, a titan of the AI research community who co-founded OpenAI, recently raised a couple of billion dollars for SSI. Both Meta and Google are investors in his company, I’m told. Murati also just raised a couple of billion dollars. Neither she nor Sutskever is close to releasing a product. Srinivas, meanwhile, is in the process of raising around $500 million for Perplexity.

Spokespeople for all the companies involved either declined to comment or didn’t respond in time for publication. The Information and CNBC first reported Zuckerberg’s talks with Safe Superintelligence, while Bloomberg first reported the Perplexity talks.

While Zuckerberg’s recruiting drive is motivated by the urgency he feels to fix Meta’s AI strategy, the situation also highlights the fierce competition for top AI talent these days. In my conversations this week, those on the inside of the industry aren’t surprised by Zuckerberg making nine-figure — or even, yes, 10-figure — compensation offers for the best AI talent. There are certain senior people at OpenAI, for example, who are already compensated in that ballpark, thanks to the company’s meteoric increase in valuation over the last few years.

Speaking of OpenAI, it’s clear that CEO Sam Altman is at least a bit rattled by Zuckerberg’s hiring spree. His decision to appear on his brother’s podcast this week and say that “none of our best people” are leaving for Meta was probably meant to convey a position of strength, but in reality, it looks like he is throwing his former colleagues under the bus. I was confused by Altman’s suggestion that Meta paying a lot upfront for talent won’t “set up a great culture.” After all, didn’t OpenAI just pay $6.5 billion to hire Jony Ive and his small hardware team?

“We think that glasses are the best form factor for AI”

When I joined a Zoom call with Alex Himel, Meta’s VP of wearables, this week, he had just gotten off a call with Zuckerberg’s new AI chief, Alexandr Wang.

“There’s an increasing number of Alexes that I talk to on a regular basis,” Himel joked as we started our conversation about Meta’s new glasses release with Oakley. “I was just in my first meeting with him. There were like three people in a room with the camera real far away, and I was like, ‘Who is talking right now?’ And then I was like, ‘Oh, hey, it’s Alex.’”

The following Q&A has been edited for length and clarity:

How did your meeting with Alex just now go?

The meeting was about how to make AI as awesome as it can be for glasses. Obviously, there are some unique use cases in the glasses that aren’t stuff you do on a phone. The thing we’re trying to figure out is how to balance it all, because AI can be everything to everyone or it could be amazing for more specific use cases.

We’re trying to figure out how to strike the right balance because there’s a ton of stuff in the underlying Llama models and that whole pipeline that we don’t care about on glasses. Then there’s stuff we really, really care about, like egocentric view and trying to feed video into the models to help with some of the really aspirational use cases that we wouldn’t build otherwise.

You are referring to this new lineup with Oakley as “AI glasses.” Is that the new branding for this category? They are AI glasses, not smart glasses?

We refer to the category as AI glasses. You saw Orion. You used it for longer than anyone else in the demo, which I commend you for. We used to think that’s what you needed to hit scale for this new category. You needed the big field of view and display to overlay virtual content. Our opinion of that has definitely changed. We think we can hit scale faster, and AI is the reason we think that’s possible.

Right now, the top two use cases for the glasses are audio — phone calls, music, podcasts — and taking photos and videos. We look at participation rates of our active users, and those have been one and two since launch. Audio is one. A very close second is photos and videos.

AI has been number three from the start. As we’ve been launching more markets — we’re now in 18 — and we’ve been adding more features, AI is creeping up. Our biggest investment by a mile on the software side is AI functionality, because we think that glasses are the best form factor for AI. They are something you’re already wearing all the time. They can see what you see. They can hear what you hear. They’re super accessible.

Is your goal to have AI supersede audio and photo to be the most used feature for glasses, or is that not how you think about it?

From a math standpoint, at best, you could tie. We do want AI to be something that’s increasingly used by more people more frequently. We think there’s definitely room for the audio to get better. There’s definitely room for image quality to get better. The AI stuff has much more headroom.

How much of the AI is onboard the glasses versus the cloud? I imagine you have lots of physical constraints with this kind of device.

We’ve now got one billion-parameter models that can run on the frame. So, increasingly, there’s stuff there. Then we have stuff running on the phone.

If you were watching WWDC, Apple made a couple of announcements that we haven’t had a chance to test yet, but we’re excited about. One is the Wi-Fi Aware APIs. We should be able to transfer photos and videos without having people tap that annoying dialogue box every time. That’d be great. The second one was processor background access, which should allow us to do image processing when you transfer the media over. Syncing would work just like it does on Android.

Do you think the market for these new Oakley glasses will be as big as the Ray-Bans? Or is it more niche because they are more outdoors and athlete-focused?

We work with EssilorLuxottica, which is a great partner. Ray-Ban is their largest brand. Within that, the most popular style is Wayfair. When we launched the original Ray-Ban Meta glasses, we went with the most popular style for the most popular brand.

Their second biggest brand is Oakley. A lot of people wear them. The Holbrook is really popular. The HSTN, which is what we’re launching, is a really popular analog frame. We increasingly see people using the Ray-Ban Meta glasses for active use cases. This is our first step into the performance category. There’s more to come.

What’s your reaction to Google’s announcements at I/O for their XR glasses platform and eyewear partnerships?

We’ve been working with EssilorLuxottica for like five years now. That’s a long time for a partnership. It takes a while to get really in sync. I feel very good about the state of our partnership. We’re able to work quickly. The Oakley Meta glasses are the fastest program we’ve had by quite a bit. It took less than nine months.

I thought the demos they [Google] did were pretty good. I thought some of those were pretty compelling. They didn’t announce a product, so I can’t react specifically to what they’re doing. It’s flattering that people see the traction we’re getting and want to jump in as well.

On the AR glasses front, what have you been learning from Orion now that you’ve been showing it to the outside world?

We’ve been going full speed on that. We’ve actually hit some pretty good internal milestones for the next version of it, which is the one we plan to sell. The biggest learning from using them is that we feel increasingly good about the input and interaction model with eye tracking and the neural band. I wore mine during March Madness in the office. I was literally watching the games. Picture yourself sitting at a table with a virtual TV just above people’s heads. It was amazing.

- TikTok gets to keep operating illegally. As expected, President Trump extended his enforcement deadline for the law that has banned a China-owned TikTok in the US. It’s essential to understand what is really happening here: Trump is instructing his Attorney General not to enforce earth-shattering fines on Apple, Google, and every other American company that helps operate TikTok. The idea that he wouldn’t use this immense leverage to extract whatever he wants from these companies is naive, and this whole process makes a mockery of everyone involved, not to mention the US legal system.

- Amazon will hire fewer people because of AI. When you make an employee memo a press release, you’re trying to tell the whole world what’s coming. In this case, Amazon CEO Andy Jassy wants to make clear that he’s going to fully embrace AI to cut costs. Roughly 30 percent of Amazon’s code is already written by AI, and I’m sure Jassy is looking at human-intensive areas, such as sales and customer service, to further automate.

If you haven’t already, don’t forget to subscribe to The Verge, which includes unlimited access to Command Line and all of our reporting.

As always, I welcome your feedback, especially if you’ve also turned down Zuck. You can respond here or ping me securely on Signal.

Technology

What AI's insatiable appetite for power means for our future

NEWYou can now listen to Fox News articles!

Every time you ask ChatGPT a question, to generate an image or let artificial intelligence summarize your email, something big is happening behind the scenes. Not on your device, but in sprawling data centers filled with servers, GPUs and cooling systems that require massive amounts of electricity.

The modern AI boom is pushing our power grid to its limits. ChatGPT alone processes roughly 1 billion queries per day, each requiring data center resources far beyond what’s on your device.

In fact, the energy needed to support artificial intelligence is rising so quickly that it has already delayed the retirement of several coal plants in the U.S., with more delays expected. Some experts warn that the AI arms race is outpacing the infrastructure meant to support it. Others argue it could spark long-overdue clean energy innovation.

AI isn’t just reshaping apps and search engines. It’s also reshaping how we build, fuel and regulate the digital world. The race to scale up AI capabilities is accelerating faster than most infrastructure can handle, and energy is becoming the next major bottleneck.

TRUMP’S NUCLEAR STRATEGY TAKES SHAPE AS FORMER MANHATTAN PROJECT SITE POWERS UP FOR AI RACE AGAINST CHINA

Here’s a look at how AI is changing the energy equation, and what it might mean for our climate future.

Sign up for my FREE CyberGuy Report

Get my best tech tips, urgent security alerts and exclusive deals delivered straight to your inbox. Plus, you’ll get instant access to my Ultimate Scam Survival Guide – free when you join.

ChatGPT on a computer (Kurt “CyberGuy” Knutsson)

Why AI uses so much power, and what drives the demand

Running artificial intelligence at scale requires enormous computational power. Unlike traditional internet activity, which mostly involves pulling up stored information, AI tools perform intensive real-time processing. Whether training massive language models or responding to user prompts, AI systems rely on specialized hardware like GPUs (graphics processing unit) that consume far more power than legacy servers. GPUs are designed to handle many calculations in parallel, which is perfect for the matrix-heavy workloads that power generative AI and deep learning systems.

To give you an idea of scale: one Nvidia H100 GPU, commonly used in AI training, consumes up to 700 watts on its own. Training a single large AI model like GPT-4 may require thousands of these GPUs running continuously for weeks. Multiply that across dozens of models and hundreds of data centers, and the numbers escalate quickly. A traditional data center rack might use around 8 kilowatts (kW) of power. An AI-optimized rack using GPUs can demand 45-55 kW or more. Multiply that across an entire building or campus of racks, and the difference is staggering.

WHAT IS ARTIFICIAL INTELLIGENCE (AI)?

Cooling all that hardware adds another layer of energy demand. Keeping AI servers from overheating accounts for 30-55% of a data center’s total power use. Advanced cooling methods like liquid immersion are helping, but scaling those across the industry will take time.

On the upside, AI researchers are developing more efficient ways to run these systems. One promising approach is the “mixture of experts” model architecture, which activates only a portion of the full model for each task. This method can significantly reduce the amount of energy required without sacrificing performance.

How much power are we talking about?

In 2023, global data centers consumed about 500 terawatt-hours (TWh) of electricity. That is enough to power every home in California, Texas and Florida combined for an entire year. By 2030, the number could triple, with AI as the main driver.

To put it into perspective, the average home uses about 30 kilowatt-hours per day. One terawatt-hour is a billion times larger than a kilowatt-hour. That means 1 TWh could power 33 million homes for a day.

Data center (Kurt “CyberGuy” Knutsson)

5 AI TERMS YOU KEEP HEARING AND WHAT THEY ACTUALLY MEAN

AI’s energy demand is outpacing the power grid

The demand for AI is growing faster than the energy grid can adapt. In the U.S., data center electricity use is expected to surpass 600 TWh by 2030, tripling current levels. Meeting that demand requires the equivalent of adding 14 large power plants to the grid. Large AI data centers can each require 100–500 megawatts (MW), and the largest facilities may soon exceed 1 gigawatt (GW), which is about as much as a nuclear power plant or a small U.S. state. One 1 GW data center could consume more power than the entire city of San Francisco. Multiply that by a few dozen campuses across the country, and you start to see how quickly this demand adds up.

To keep up, utilities across the country are delaying coal plant retirements, expanding natural gas infrastructure and shelving clean energy projects. In states like Utah, Georgia and Wisconsin, energy regulators have approved new fossil fuel investments directly linked to data center growth. By 2035, data centers could account for 8.6% of all U.S. electricity demand, up from 3.5% today.

Despite public pledges to support sustainability, tech companies are inadvertently driving a fossil fuel resurgence. For the average person, this shift could increase electricity costs, strain regional energy supplies and complicate state-level clean energy goals.

Power grid facility (Kurt “CyberGuy” Knutsson)

Can big tech keep its green energy promises?

Tech giants Microsoft, Google, Amazon and Meta all claim they are working toward a net-zero emissions future. In simple terms, this means balancing the amount of greenhouse gases they emit with the amount they remove or offset, ideally bringing their net contribution to climate change down to zero.

These companies purchase large amounts of renewable energy to offset their usage and invest in next-generation energy solutions. For example, Microsoft has a contract with fusion start-up Helion to supply clean electricity by 2028.

However, critics argue these clean energy purchases do not reflect the reality on the ground. Because the grid is shared, even if a tech company buys solar or wind power on paper, fossil fuels often fill the gap for everyone else.

Some researchers say this model is more beneficial for company accounting than for climate progress. While the numbers might look clean on a corporate emissions report, the actual energy powering the grid still includes coal and gas. Microsoft, Google and Amazon have pledged to power their data centers with 100% renewable energy, but because the grid is shared, fossil fuels often fill the gap when renewables aren’t available.

Some critics argue that voluntary pledges alone are not enough. Unlike traditional industries, there is no standardized regulatory framework requiring tech companies to disclose detailed energy usage from AI operations. This lack of transparency makes it harder to track whether green pledges are translating into meaningful action, especially as workloads shift to third-party contractors or overseas operations.

A wind energy farm (Kurt “CyberGuy” Knutsson)

AI CYBERSECURITY RISKS AND DEEPFAKE SCAMS ON THE RISE

The future of clean energy for AI and its limits

To meet soaring energy needs without worsening emissions, tech companies are investing in advanced energy projects. These include small nuclear reactors built directly next to data centers, deep geothermal systems and nuclear fusion.

While promising, these technologies face enormous technical and regulatory hurdles. Fusion, for example, has never reached commercial break-even, meaning it has yet to produce more energy than it consumes. Even the most optimistic experts say we may not see scalable fusion before the 2030s.

Beyond the technical barriers, many people have concerns about the safety, cost and long-term waste management of new nuclear systems. While proponents argue these designs are safer and more efficient, public skepticism remains a real hurdle. Community resistance is also a factor. In some regions, proposals for nuclear microreactors or geothermal drilling have faced delays due to concerns over safety, noise and environmental harm. Building new data centers and associated power infrastructure can take up to seven years, due to permitting, land acquisition and construction challenges.

Google recently activated a geothermal project in Nevada, but it only generates enough power for a few thousand homes. The next phase may be able to power a single data center by 2028. Meanwhile, companies like Amazon and Microsoft continue building sites that consume more power than entire citie.

SCAMMERS CAN EXPLOIT YOUR DATA FROM JUST ONE CHATGPT SEARCH

Will AI help or harm the environment?

This is the central debate. Advocates argue that AI could ultimately help accelerate climate progress by optimizing energy grids, modeling emissions patterns and inventing better clean technology. Microsoft and Google have both cited these uses in their public statements. But critics warn that the current trajectory is unsustainable. Without major breakthroughs or stricter policy frameworks, the energy cost of AI may overwhelm climate gains. A recent forecast estimated that AI could add 1.7 gigatons of carbon dioxide to global emissions between 2025 and 2030, roughly 4% more than the entire annual emissions of the U.S.

Water use, rare mineral demand and land-use conflicts are also emerging concerns as AI infrastructure expands. Large data centers often require millions of gallons of water for cooling each year, which can strain local water supplies. The demand for critical minerals like lithium, cobalt and rare earth elements — used in servers, cooling systems and power electronics — creates additional pressure on supply chains and mining operations. In some areas, communities are pushing back against land being rezoned for large-scale tech development.

Rapid hardware turnover is also adding to the environmental toll. As AI systems evolve quickly, older GPUs and accelerators are replaced more frequently, creating significant electronic waste. Without strong recycling programs in place, much of this equipment ends up in landfills or is exported to developing countries.

The question isn’t just whether AI can become cleaner over time. It’s whether we can scale the infrastructure needed to support it without falling back on fossil fuels. Meeting that challenge will require tighter collaboration between tech companies, utilities and policymakers. Some experts warn that AI could either help fight climate change or make it worse, and the outcome depends entirely on how we choose to power the future of computing.

HOW TO LOWER YOUR CAR INSURANCE COSTS IN 2025

Kurt’s key takeaways

AI is revolutionizing how we work, but it is also transforming how we use energy. Data centers powering AI systems are becoming some of the world’s largest electricity consumers. Tech companies are betting big on futuristic solutions, but the reality is that many fossil fuel plants are staying online longer just to meet AI’s rising energy demand. Whether AI ends up helping or hurting the climate may depend on how quickly clean energy breakthroughs catch up and how honestly we measure progress.

Is artificial intelligence worth the real-world cost of fossil resurgence? Let us know your thoughts by writing to us at Cyberguy.com/Contact.

For more of my tech tips and security alerts, subscribe to my free CyberGuy Report Newsletter by heading to Cyberguy.com/Newsletter

Ask Kurt a question or let us know what stories you’d like us to cover

Follow Kurt on his social channels

Answers to the most asked CyberGuy questions:

New from Kurt:

Copyright 2025 CyberGuy.com. All rights reserved.

Technology

SpaceX Starship explodes again, this time on the ground

Late Wednesday night at about 11PM CT, SpaceX was about to perform a static fire test of Ship 36, ahead of a planned 10th flight test for its Starship, when there was suddenly a massive explosion at the Massey’s Testing Center site. SpaceX says “A safety clear area around the site was maintained throughout the operation and all personnel are safe and accounted for,” and that there are no hazards to residents in the area of its recently incorporated town of Starbase, Texas.

“After completing a single-engine static fire earlier this week, the vehicle was in the process of loading cryogenic propellant for a six-engine static fire when a sudden energetic event resulted in the complete loss of Starship and damage to the immediate area surrounding the stand,” according to an update on SpaceX’s website. “The explosion ignited several fires at the test site which remains clear of personnel and will be assessed once it has been determined to be safe to approach. Individuals should not attempt to approach the area while safing operations continue.”

The explosion follows others during the seventh, eighth, and ninth Starship flight tests earlier this year. “Initial analysis indicates the potential failure of a pressurized tank known as a COPV, or composite overwrapped pressure vessel, containing gaseous nitrogen in Starship’s nosecone area, but the full data review is ongoing,” SpaceX says. On X, the company called the explosion a “major anomaly.”

Fox 26 Houston says that, according to authorities, there have been no injuries reported. SpaceX also says no injuries have been reported.

This flight test would’ve continued using SpaceX’s “V2” Starship design, which Musk said in 2023, “holds more propellant, reduces dry mass and improves reliability.” SpaceX is also preparing a new V3 design that, according to Musk, was tracking toward a rate of launching once a week in about 12 months.

Update, June 19th: Added information from SpaceX.

-

Culture1 week ago

Culture1 week agoA Murdered Journalist’s Unfinished Book About the Amazon Gets Completed and Published

-

Education1 week ago

Education1 week agoWhat Happens to Harvard if Trump Successfully Bars Its International Students?

-

Arizona2 days ago

Arizona2 days agoSuspect in Arizona Rangers' death killed by Missouri troopers

-

News1 week ago

News1 week agoTrumps to Attend ‘Les Misérables’ at Kennedy Center

-

World1 week ago

World1 week agoSudan’s paramilitary RSF say they seized key zone bordering Egypt, Libya

-

Technology1 week ago

Technology1 week agoGoogle is shutting down Android Instant Apps over ‘low’ usage

-

News1 week ago

News1 week agoElon Musk says some of his social media posts about Trump 'went too far'

-

Culture1 week ago

Culture1 week agoSlow and Steady, Kay Ryan’s “Turtle” Poem Will Win Your Heart