Science

How might JPL look for life on watery worlds? With the help of this slithering robot

Engineers at NASA’s Jet Propulsion Laboratory are taking artificial intelligence to the next level — by sending it into space disguised as a robotic snake.

With the sun beating down on JPL’s Mars Yard, the robot lifts its “head” off a glossy surface of faux ice to scan the world around it. It maps its surroundings, analyzes potential obstacles and chooses the safest path through a valley of fake boulders to the destination it has been instructed to reach.

EELS raises its head unit to scan its surroundings.

(Brian van der Brug / Los Angeles Times)

Once it has a plan in place, the 14-foot-long robot lowers its head, engages its 48 motors and slowly slithers forward. Its cautious movements are propelled by the clockwise or counterclockwise turns of the spiral connectors that link its 10 body segments, sending the cyborg in a specific direction. The entire time, sensors all along its body continue to reevaluate the environs, allowing the robot to make adjustments if needed.

JPL engineers have created spacecraft to orbit distant planets and built rovers that rumble around Mars as though they’re commuting to the office. But EELS — short for Exobiology Extant Life Surveyor — is designed to go places that have never been accessible to humans or robots before.

The lava tubes on the moon? EELS could scope out the underground tunnels, which may provide shelter to future astronauts.

The polar ice caps on Mars? EELS would be able to explore them and deploy instruments to collect chemical and structural information about the frozen carbon dioxide.

The liquid ocean beneath the frozen surface of Enceladus? EELS could tunnel its way there and look for evidence that the Saturnian moon might be hospitable to life.

“You’re talking about a snake robot that can do surface traversal on ice, go through holes and swim underwater — one robot that can conquer all three worlds,” Rohan Thakker, a robotics technologist at JPL. “No one has done that before.”

And if things go according to plan, the slithering space explorer developed with grant money from Caltech will do all of these things autonomously, without having to wait for detailed commands from handlers at the NASA lab in La Cañada Flintridge. Though still years away from its first official deployment, EELS is already learning how to hone its decision-making skills so it can navigate even dangerous terrain independently.

Hiro Ono, leader of JPL’s Robotic Surface Mobility Group, started out seven years ago with a different vision for exploring Enceladus and another watery moon in orbit around Jupiter called Europa. He imagined a three-part system consisting of a surface module that generated power and communicated with Earth; a descent module that picked its way through a moon’s icy crust; and an autonomous underwater vehicle that explored the subsurface ocean.

EELS replaces all of that.

EELS has spiral treads for traction and multiple body segments for flexibility. Its design allows it to wiggle out of all sorts of tricky terrain.

(Brian van der Brug / Los Angeles Times)

Thanks to its serpentine anatomy, this new space explorer can go forward and backward in a straight line, slither like a snake, move its entire body like a windshield wiper, curl itself into a circle, and lift its head and tail. The result is a robot that can’t be stymied by deep craters, icy terrain or small spaces.

“The most interesting science is sometimes in places that are difficult to reach,” said Matt Robinson, the project manager for EELS. Rovers struggle with steep slopes and irregular surfaces. But a snake-like robot would be able to reach places such as an underground lunar cave or the near-vertical wall of a crater, he said.

The farther away a spacecraft is, the longer it takes for human commands to reach it. The rovers on Mars are remote-controlled by humans at JPL, and depending on the relative positions of Earth and Mars, it can take five to 20 minutes for messages to travel between them.

Enceladus, on the other hand, can be anywhere from 746 million to more than 1 billion miles from Earth. A radio transmission from way out there would take at least an hour to arrive, and perhaps as long as an hour and a half. If EELS found itself in jeopardy and needed human help to get out of it, its fate might be sealed by the time its SOS received a reply.

“Most people get frustrated when their video game has a few-second lag,” Robinson said. “Imagine controlling a spacecraft that’s in a dangerous area and has a 50-minute lag.”

That’s why EELS is learning how to make its own choices about getting from Point A to Point B.

EELS autonomy lead Rohan Thakker, second from left, confers with engineers as they put the robot through its paces.

(Brian van der Brug / Los Angeles Times)

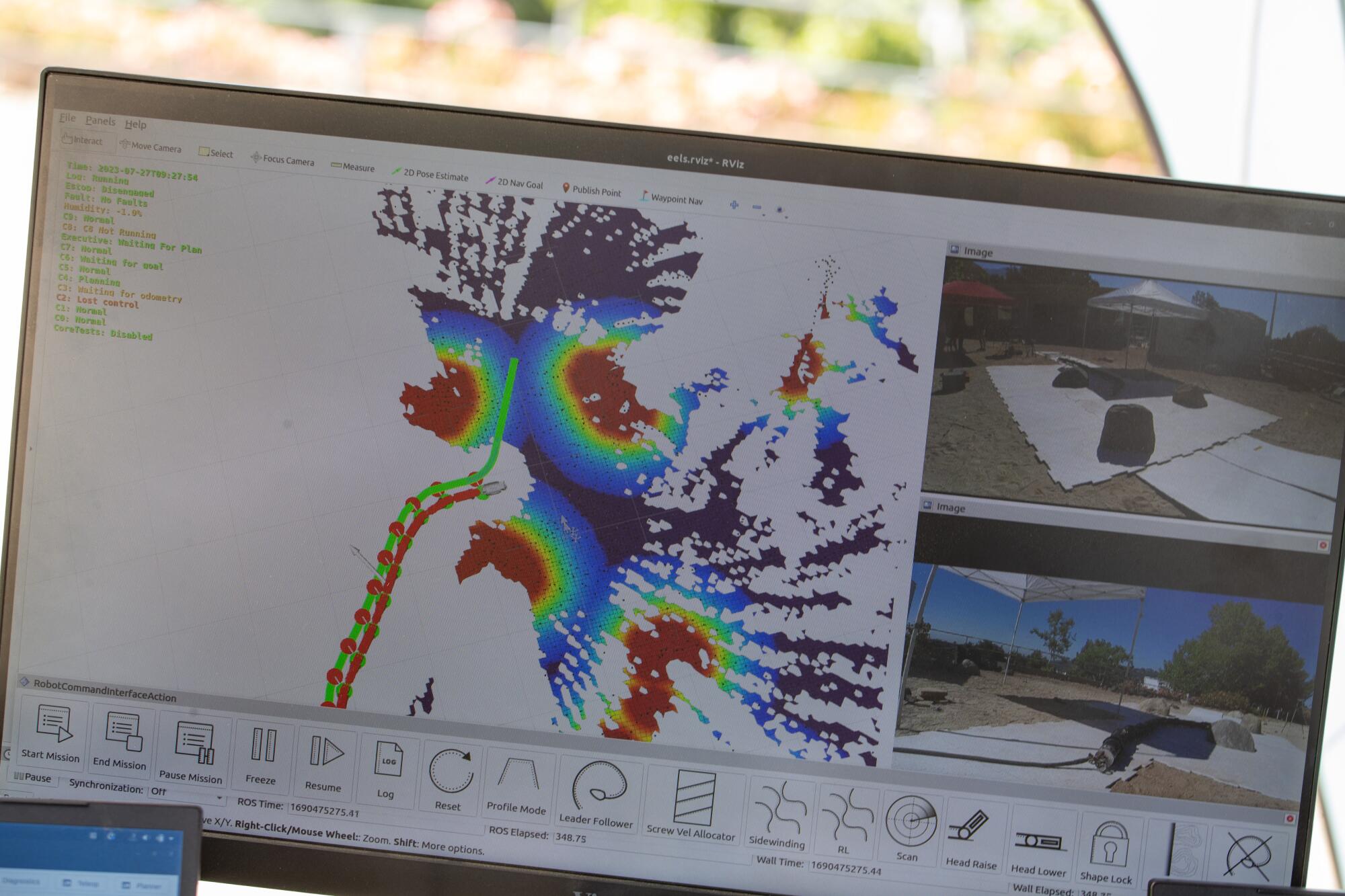

A computer screen shows EELS’ actual position as compared with its programmed position.

(Brian van der Brug / Los Angeles Times)

Teaching the robot how to assess its environment and make decisions quickly is a multi-step process.

First, EELS is taught to be safe. With the help of software that calculates the probability of failures — such as crashing into something or getting stuck — EELS is learning to identify potentially dangerous situations. For instance, it is figuring out that when something like fog interferes with its ability to map the world around it, it should respond by proceeding more cautiously, said Thakker, the autonomy lead for the project.

It also relies on its array of built-in sensors. Some can detect a change in its orientation with respect to gravity — the robot equivalent of feeling as though you’re falling. Others measure the stability of the ground and can tell whether hard ice suddenly turns into loose snow, so that EELS can maneuver itself to a more navigable surface, Thakker said.

In addition, EELS is able to incorporate past experiences into its decision-making process — in other words, it learns. But it does so a little differently than a typical robot powered by artificial intelligence.

For example, if an AI robot were to spot a puddle of water, it may investigate a bit before jumping in. The next time it encounters a puddle, it would recognize it, remember that it was safe and jump in.

But that could be deadly in a dynamic environment. Thanks to EELS’ extra programming, it would know to evaluate the puddle every single time— just because it was safe once doesn’t guarantee it will be safe again.

In the Mars Yard, a half-acre rocky sandbox used to test rovers, Thakker and the team assign EELS a specific destination. Then it’s up to the robot to use its sensors to scan the world around it and plot the best path forward, whether it’s directly in the dirt or on white mats made to mimic ice.

JPL engineers tested EELS on glossy mats that served as a stand-in for ice.

(Brian van der Brug / Los Angeles Times)

It’s similar to the navigation of a self-driving car, except there are no stop signs or speed limits to help EELS develop its strategy, Thakker said.

EELS has also been tested on an ice rink, on a glacier and in snow. With its spiral treads for traction and multiple body segments for flexibility, it can wiggle itself out of all sorts of tricky terrain.

Mechanical engineer Sarah Yaericks holds the emergency stop as EELS moves around obstacles in the Mars Yard at JPL.

(Brian van der Brug / Los Angeles Times)

The robot isn’t the only one learning. As its human handlers monitor EELS’ progress, they adjust its software to help it make better assessments of its surroundings, Robinson said.

“It’s not like an equation you can just solve,” Ono said. “It can often be more of an art than science. … A lot comes from experience.”

The goal is for EELS to gain enough experience to be sent out on its own in any kind of setting.

“We aren’t there yet,” Ono said. But EELS’ recent advances amount to “one small step for the robot and one large step for mankind.”

Science

Cluster of farmworkers diagnosed with rare animal-borne disease in Ventura County

A cluster of workers at Ventura County berry farms have been diagnosed with a rare disease often transmitted through sick animals’ urine, according to a public health advisory distributed to local doctors by county health officials Tuesday.

The bacterial infection, leptospirosis, has resulted in severe symptoms for some workers, including meningitis, an inflammation of the brain lining and spinal cord. Symptoms for mild cases included headaches and fevers.

The disease, which can be fatal, rarely spreads from human to human, according to the U.S. Centers for Disease Control and Prevention.

Ventura County Public Health has not given an official case count but said it had not identified any cases outside of the agriculture sector. The county’s agriculture commissioner was aware of 18 cases, the Ventura County Star reported.

The health department said it was first contacted by a local physician in October, who reported an unusual trend in symptoms among hospital patients.

After launching an investigation, the department identified leptospirosis as a probable cause of the illness and found most patients worked on caneberry farms that utilize hoop houses — greenhouse structures to shelter the crops.

As the investigation to identify any additional cases and the exact sources of exposure continues, Ventura County Public Health has asked healthcare providers to consider a leptospirosis diagnosis for sick agricultural workers, particularly berry harvesters.

Rodents are a common source and transmitter of disease, though other mammals — including livestock, cats and dogs — can transmit it as well.

The disease is spread through bodily fluids, such as urine, and is often contracted through cuts and abrasions that contact contaminated water and soil, where the bacteria can survive for months.

Humans can also contract the illness through contaminated food; however, the county health agency has found no known health risks to the general public, including through the contact or consumption of caneberries such as raspberries and blackberries.

Symptom onset typically occurs between two and 30 days after exposure, and symptoms can last for months if untreated, according to the CDC.

The illness often begins with mild symptoms, with fevers, chills, vomiting and headaches. Some cases can then enter a second, more severe phase that can result in kidney or liver failure.

Ventura County Public Health recommends agriculture and berry harvesters regularly rinse any cuts with soap and water and cover them with bandages. They also recommend wearing waterproof clothing and protection while working outdoors, including gloves and long-sleeve shirts and pants.

While there is no evidence of spread to the larger community, according to the department, residents should wash hands frequently and work to control rodents around their property if possible.

Pet owners can consult a veterinarian about leptospirosis vaccinations and should keep pets away from ponds, lakes and other natural bodies of water.

Science

Political stress: Can you stay engaged without sacrificing your mental health?

It’s been two weeks since Donald Trump won the presidential election, but Stacey Lamirand’s brain hasn’t stopped churning.

“I still think about the election all the time,” said the 60-year-old Bay Area resident, who wanted a Kamala Harris victory so badly that she flew to Pennsylvania and knocked on voters’ doors in the final days of the campaign. “I honestly don’t know what to do about that.”

Neither do the psychologists and political scientists who have been tracking the country’s slide toward toxic levels of partisanship.

Fully 69% of U.S. adults found the presidential election a significant source of stress in their lives, the American Psychological Assn. said in its latest Stress in America report.

The distress was present across the political spectrum, with 80% of Republicans, 79% of Democrats and 73% of independents surveyed saying they were stressed about the country’s future.

That’s unhealthy for the body politic — and for voters themselves. Stress can cause muscle tension, headaches, sleep problems and loss of appetite. Chronic stress can inflict more serious damage to the immune system and make people more vulnerable to heart attacks, strokes, diabetes, infertility, clinical anxiety, depression and other ailments.

In most circumstances, the sound medical advice is to disengage from the source of stress, therapists said. But when stress is coming from politics, that prescription pits the health of the individual against the health of the nation.

“I’m worried about people totally withdrawing from politics because it’s unpleasant,” said Aaron Weinschenk, a political scientist at the University of Wisconsin–Green Bay who studies political behavior and elections. “We don’t want them to do that. But we also don’t want them to feel sick.”

Modern life is full of stressors of all kinds: paying bills, pleasing difficult bosses, getting along with frenemies, caring for children or aging parents (or both).

The stress that stems from politics isn’t fundamentally different from other kinds of stress. What’s unique about it is the way it encompasses and enhances other sources of stress, said Brett Ford, a social psychologist at the University of Toronto who studies the link between emotions and political engagement.

For instance, she said, elections have the potential to make everyday stressors like money and health concerns more difficult to manage as candidates debate policies that could raise the price of gas or cut off access to certain kinds of medical care.

Layered on top of that is the fact that political disagreements have morphed into moral conflicts that are perceived as pitting good against evil.

“When someone comes into power who is not on the same page as you morally, that can hit very deeply,” Ford said.

Partisanship and polarization have raised the stakes as well. Voters who feel a strong connection to a political party become more invested in its success. That can make a loss at the ballot box feel like a personal defeat, she said.

There’s also the fact that we have limited control over the outcome of an election. A patient with heart disease can improve their prognosis by taking medicine, changing their diet, getting more exercise or quitting smoking. But a person with political stress is largely at the mercy of others.

“Politics is many forms of stress all rolled into one,” Ford said.

Weinschenk observed this firsthand the day after the election.

“I could feel it when I went into my classroom,” said the professor, whose research has found that people with political anxiety aren’t necessarily anxious in general. “I have a student who’s transgender and a couple of students who are gay. Their emotional state was so closed down.”

That’s almost to be expected in a place like Wisconsin, whose swing-state status caused residents to be bombarded with political messages. The more campaign ads a person is exposed to, the greater the risk of being diagnosed with anxiety, depression or another psychological ailment, according to a 2022 study in the journal PLOS One.

Political messages seem designed to keep voters “emotionally on edge,” said Vaile Wright, a licensed psychologist in Villa Park, Ill., and a member of the APA’s Stress in America team.

“It encourages emotion to drive our decision-making behavior, as opposed to logic,” Wright said. “When we’re really emotionally stimulated, it makes it so much more challenging to have civil conversation. For politicians, I think that’s powerful, because emotions can be very easily manipulated.”

Making voters feel anxious is a tried-and-true way to grab their attention, said Christopher Ojeda, a political scientist at UC Merced who studies mental health and politics.

“Feelings of anxiety can be mobilizing, definitely,” he said. “That’s why politicians make fear appeals — they want people to get engaged.”

On the other hand, “feelings of depression are demobilizing and take you out of the political system,” said Ojeda, author of “The Sad Citizen: How Politics is Depressing and Why it Matters.”

“What [these feelings] can tell you is, ‘Things aren’t going the way I want them to. Maybe I need to step back,’” he said.

Genessa Krasnow has been seeing a lot of that since the election.

The Seattle entrepreneur, who also campaigned for Harris, said it grates on her to see people laughing in restaurants “as if nothing had happened.” At a recent book club meeting, her fellow group members were willing to let her vent about politics for five minutes, but they weren’t interested in discussing ways they could counteract the incoming president.

“They’re in a state of disengagement,” said Krasnow, who is 56. She, meanwhile, is looking for new ways to reach young voters.

“I am exhausted. I am so sad,” she said. “But I don’t believe that disengaging is the answer.”

That’s the fundamental trade-off, Ojeda said, and there’s no one-size-fits-all solution.

“Everyone has to make a decision about how much engagement they can tolerate without undermining their psychological well-being,” he said.

Lamirand took steps to protect her mental health by cutting social media ties with people whose values aren’t aligned with hers. But she will remain politically active and expects to volunteer for phone-banking duty soon.

“Doing something is the only thing that allows me to feel better,” Lamirand said. “It allows me to feel some level of control.”

Ideally, Ford said, people would not have to choose between being politically active and preserving their mental health. She is investigating ways to help people feel hopeful, inspired and compassionate about political challenges, since these emotions can motivate action without triggering stress and anxiety.

“We want to counteract this pattern where the more involved you are, the worse you are,” Ford said.

The benefits would be felt across the political spectrum. In the APA survey, similar shares of Democrats, Republicans and independents agreed with statements like, “It causes me stress that politicians aren’t talking about the things that are most important to me,” and, “The political climate has caused strain between my family members and me.”

“Both sides are very invested in this country, and that is a good thing,” Wright said. “Antipathy and hopelessness really doesn’t serve us in the long run.”

Science

Video: SpaceX Unable to Recover Booster Stage During Sixth Test Flight

President-elect Donald Trump joined Elon Musk in Texas and watched the launch from a nearby location on Tuesday. While the Starship’s giant booster stage was unable to repeat a “chopsticks” landing, the vehicle’s upper stage successfully splashed down in the Indian Ocean.

-

Business1 week ago

Business1 week agoColumn: Molly White's message for journalists going freelance — be ready for the pitfalls

-

Science6 days ago

Science6 days agoTrump nominates Dr. Oz to head Medicare and Medicaid and help take on 'illness industrial complex'

-

Politics1 week ago

Politics1 week agoTrump taps FCC member Brendan Carr to lead agency: 'Warrior for Free Speech'

-

/cdn.vox-cdn.com/uploads/chorus_asset/file/25739950/247386_Elon_Musk_Open_AI_CVirginia.jpg)

/cdn.vox-cdn.com/uploads/chorus_asset/file/25739950/247386_Elon_Musk_Open_AI_CVirginia.jpg) Technology1 week ago

Technology1 week agoInside Elon Musk’s messy breakup with OpenAI

-

Lifestyle1 week ago

Lifestyle1 week agoSome in the U.S. farm industry are alarmed by Trump's embrace of RFK Jr. and tariffs

-

World1 week ago

World1 week agoProtesters in Slovakia rally against Robert Fico’s populist government

-

Health3 days ago

Health3 days agoHoliday gatherings can lead to stress eating: Try these 5 tips to control it

-

News1 week ago

News1 week agoThey disagree about a lot, but these singers figure out how to stay in harmony