Twitter has launched the code that chooses which tweets present up in your timeline to GitHub and has put out a blog post explaining the decision. It breaks down what the algorithm appears to be like at when figuring out which tweets to characteristic within the For You timeline and the way it ranks and filters them.

Technology

Twitter takes its algorithm “open-source,” as Elon Musk promised

/cdn.vox-cdn.com/uploads/chorus_asset/file/23951428/acastro_STK050_02.jpg)

In response to Twitter’s weblog submit, “the advice pipeline is made up of three principal phases.” First, it gathers “the most effective Tweets from completely different advice sources,” then it ranks these tweets with “a machine studying mannequin.” Lastly, it filters out tweets from folks you’ve blocked, tweets you’ve already seen, or tweets that aren’t protected for work, earlier than placing them in your timeline.

The submit additionally goes in-depth to every step of the method. For instance, it notes that step one appears to be like at round 1,500 tweets, and that the aim is to make the For You timeline round 50 % tweets from folks that you just observe (who’re known as “In-Community”), and 50 % tweets from “out-of-network” accounts that you just don’t observe. It additionally says that the rating is supposed to “optimize for optimistic engagement (e.g. Likes, Retweets, and Replies),” and that the ultimate step will attempt to just remember to’re not seeing too many tweets from the identical particular person.

In fact, probably the most element will likely be out there by selecting via the code, which researchers are already doing.

CEO Elon Musk has been promising the transfer for some time — on March twenty fourth, 2022, earlier than he owned the positioning, he polled his followers about whether or not Twitter’s algorithm ought to be open-source, and round 83 % of the responses stated “sure.” In February he promised it could occur inside per week, earlier than pushing back the deadline to March thirty first earlier this month.

Musk tweeted that Friday’s launch was “a lot of the advice algorithm,” and stated that the remainder could be launched sooner or later. He additionally stated that the hope is “that impartial third events ought to be capable to decide, with cheap accuracy, what is going to most likely be proven to customers.” In a Space discussing the algorithm’s release, he stated the plan was to make it “the least gameable system on the web,” and to make it as sturdy as Linux, maybe probably the most well-known and profitable open-source challenge. “The general aim is to maximise on unregretted consumer minutes,” he added.

Musk has been preparing his audience to be disappointed within the algorithm after they see it (which is, after all, making a giant assumption that folks will really be perceive the complicated code). He’s said it’s “overly complicated & not absolutely understood internally,” and that folks will “uncover many foolish issues,” however has promised to repair points as they’re found. “Offering code transparency will likely be extremely embarrassing at first, however it ought to result in fast enchancment in advice high quality,” he tweeted.

There’s a distinction between code transparency, the place customers will be capable to see the mechanisms that select tweets for his or her timelines, and code being open-source, the place the group can really submit its personal code for consideration, and use the algorithm in different tasks. Whereas Musk has stated it’ll be open supply, Twitter must really do the work if it needs to earn that label. That includes determining techniques for governance that resolve what pull requests to approve, what user-raised points deserve consideration, and cease dangerous actors from attempting to sabotage the code for their very own functions.

Twitter says folks can submit pull requests that will finally find yourself in its codebase

The corporate does say it’s engaged on this — the readme for the GitHub says “we invite the group to submit GitHub points and pull requests for strategies on bettering the advice algorithm.” It does, nonetheless, go on to say that Twitter’s nonetheless within the means of constructing “instruments to handle these strategies and sync modifications to our inside repository.” However Musk’s Twitter has promised to do many issues (like polling customers earlier than making main choices) that it hasn’t caught with, so the proof will likely be in whether or not it really accepts any group code.

The choice to extend transparency round its suggestions isn’t taking place in a bubble. Musk has been brazenly important of how Twitter’s earlier administration dealt with moderation and advice, and orchestrated a barrage of tales that he claimed would expose the platform’s “free speech suppression.” (Largely, it simply served to point out how regular content material moderation works.)

However now that he’s in cost, he’s confronted lots of backlash as effectively — from customers irritated about their For You pages shoving his tweets of their faces, to his conservative boosters rising more and more involved about how little engagement they’re getting. He’s argued that adverse and hate content material are being “max deboosted” within the website’s new advice algorithms, a declare outdoors analysts with out entry to the code have disputed.

Twitter can also be probably dealing with some competitors from the open-source group. Mastodon, a decentralized social community, has been gaining traction in some circles, and Twitter co-founder Jack Dorsey is backing one other related challenge known as Bluesky, which is constructed on prime of an open-source protocol.

Technology

The smells and tastes of a great video game

/cdn.vox-cdn.com/uploads/chorus_asset/file/25430225/247065_Vergecast_Gaming_Senses_Smell_SHaddad.png)

As video games and movies become more immersive, it may start to become apparent what sensations are missing in the experience. Is there a point in Gran Turismo that you wish you could smell the burning rubber and engine exhaust? Would an experience playing beer pong in Horizon Worlds not be complete unless you could taste the hops?

On this episode of The Vergecast, the latest in our miniseries about the five senses of video games, we’re tackling the topics of smell and taste in video games — and whether either could actually enhance the virtual experience for gamers. In other words: Smellovision is back for a new generation of media.

First, we try out a product (actually available to buy today) called the GameScent, an AI-powered scent machine that syncs with your gaming and movie-watching experience. The GameScent works by listening in on the sound design of the content you’re playing or watching and deploying GameScent-approved fragrances that accompany those sounds. We tried the GameScent with games like Mario Kart and Animal Crossing to see if this is really hinting at a scent-infused gaming future.

On the taste side, we speak to Nimesha Ranasinghe, an assistant professor at the University of Maine working on taste sensations and taste simulation in virtual reality experiences. Ranasinghe walks us through his research on sending electrical pulses to your tongue to manipulate different taste sensations like salty, sweet, sour, and bitter. He also talks about how his research led to experimental gadgets like a “virtual cocktail,” which would allow you to send curated tasting and drinking experiences through digital signals.

If you want to know more about the world of smelling and tasting digital content, here are some links to get you started:

Technology

7 things Google just announced that are worth keeping a close eye on

Google’s flagship developer conference called I/O just wrapped up with interesting leaps in how the big tech giant is planning to change the world.

Here are the seven biggest things we learned from Google at I/O 2024.

Google’s injecting AI into nearly every aspect of its products and services

Google’s I/O 2024 conference (Google)

Google’s I/O event was largely an opportunity for it to make its case to developers — and, to a lesser extent, consumers — as to why its artificial intelligence is ahead of rivals Microsoft and OpenAI. Here’s a rundown of the seven highlights to keep an eye on. Google’s AI, named Gemini, was featured prominently at the I/O conference and is now available to developers worldwide.

CLICK TO GET KURT’S FREE CYBERGUY NEWSLETTER WITH SECURITY ALERTS, QUICK VIDEO TIPS, TECH REVIEWS AND EASY HOW-TO’S TO MAKE YOU SMARTER

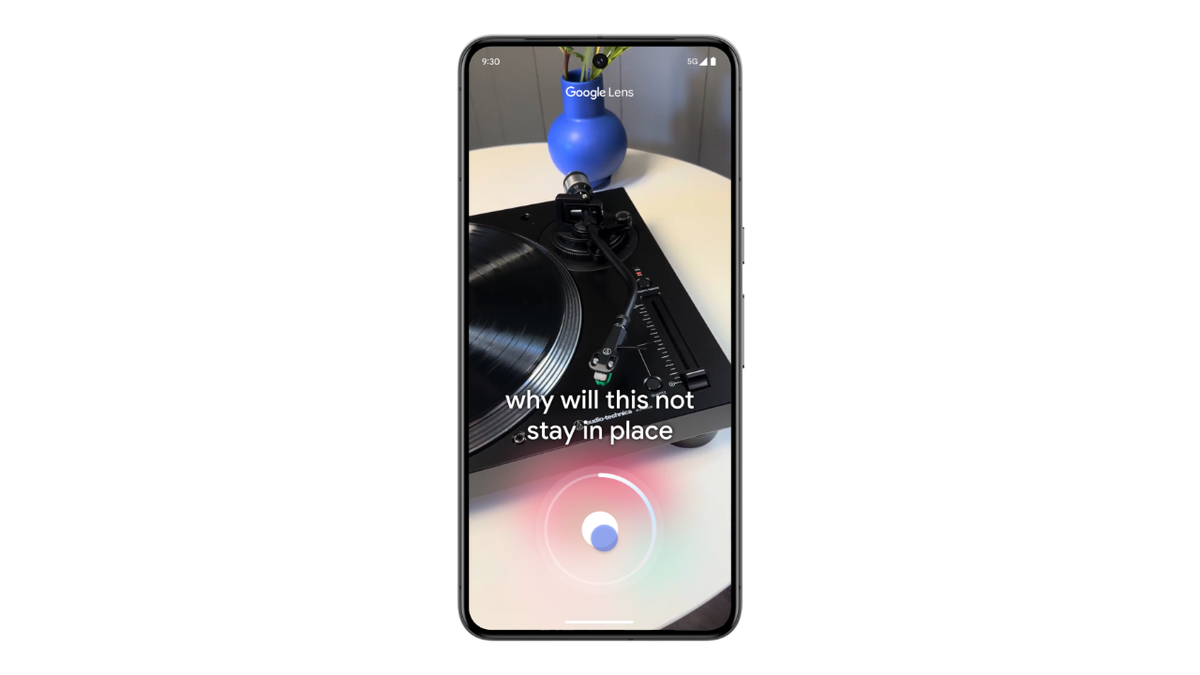

According to the conference, Gemini is now capable of pulling information from text, photos, audio, web pages and live videos from your phone’s camera and is able to synthesize the information it receives and answer questions you may have about it. Here’s what the Gemini improvements look like in practice.

1. Phone call scam detection coming to Android could compromise your privacy

Google showed a demo for its phone call scam detection feature, which the company says will be coming to a future version of Android. How it works is revolutionary and concerning. The feature will scan voice calls as they occur in real time, and it’s already drawing enormous privacy concerns.

It would be like allowing your phone calls to be tapped and monitored by big tech instead of big brother. Apple had planned a similar feature on iOS back in 2021 but abandoned it after backlash from privacy advocates. Google is under similar pressure, with privacy advocates worried that the company notorious for harvesting and profiting from personal data might soon misuse AI voice scanning technology.

IS THE FTC CALLING YOU? PROBABLY NOT. HERE’S HOW TO AVOID A NEW PHONE SCAM TARGETING YOU.

2. ‘Ask Photos’ will let AI help you find out about specific things in photos

The Ask Photos feature (Google)

Google unveiled a new feature called Ask Photos, in which users can ask Gemini to search for their photos and deliver exact results. One example showcased was the use of Gemini to locate images of your car in your photo album by telling it your license plate number.

QUICK TIPS. EXPERT INSIGHTS. CLICK TO GET THE FREE CYBERGUY REPORT NEWSLETTER

3. An AI button is coming to many of Google’s most popular productivity tools

Starting immediately, Google has added a button to toggle Gemini AI in the side panel of several of its Google Suite apps, including Gmail, Google Drive, Docs, Sheets and Slides. Similar to Microsoft’s Co-Pilot AI function, the Gemini button can help answer questions, craft emails and provide summaries of documents and email threads.

HOW TO CREATE A CUSTOM GMAIL SIGNATURE

4. AI tool called ‘Veo’ makes video from text

Music AI Sandbox (Google)

WHAT IS ARTIFICIAL INTELLIGENCE (AI)?

On a more experimental note, Google also unveiled its VideoFX feature, a generative video model based on Google’s DeepMind video generator. Veo. VideoFX can create Full HD (1080p) videos from text prompts, and we also saw improvements made to ImageFX, Google’s high-resolution AI image generator.

For musicians, Google also showed their new DJ Mode in MusicFX, an AI music generator that can be used to create loops and samples from prompts.

QUICK TIPS. EXPERT INSIGHTS. CLICK TO GET THE FREE CYBERGUY REPORT NEWSLETTER

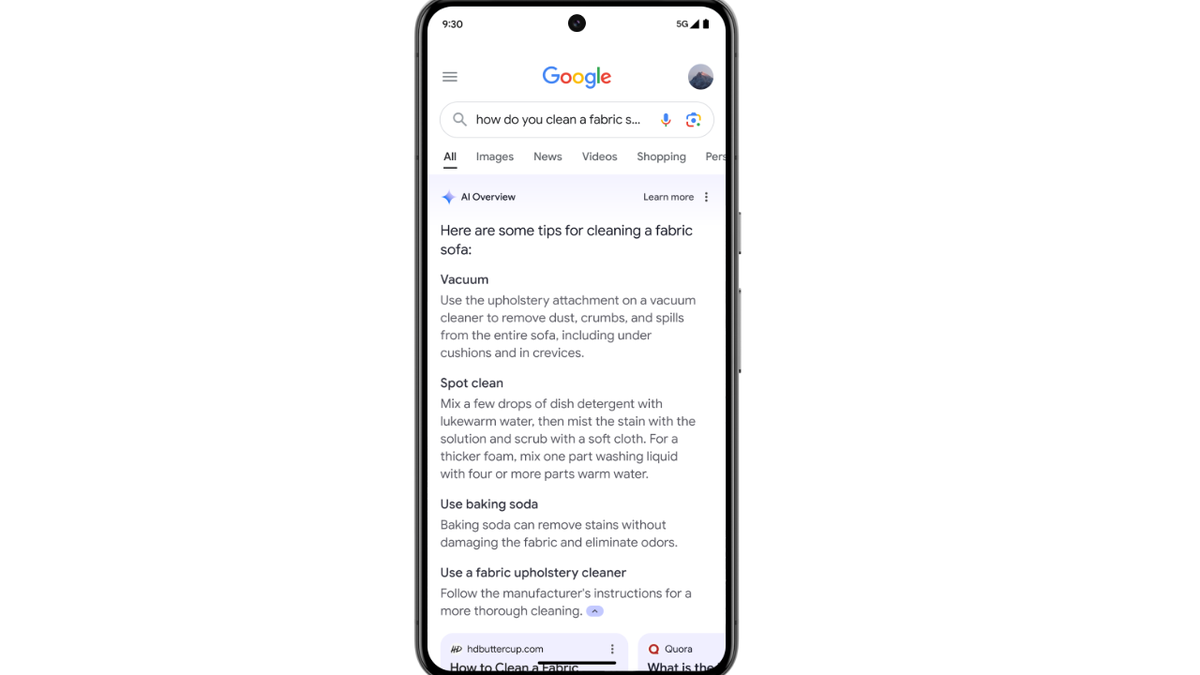

5. AI summaries will replace search results

A Google search (Google)

There’s been a lot of press lately regarding how difficult searching for things on Google has become. Constant changes to search engine optimization as well as a new wave of bots and AI-created content has disrupted the once monolithic search engine. However, Google showed off its new AI-organized search, which promises more readable search results.

Google also showed off how it is using AI to create overviews, which are short summaries to help you answer questions posed in the search box. These summaries will appear at the top of the search results page, so you don’t even need to visit another website to get answers you may be looking for.

6. Google TV gets the AI treatment

Google managed to work its Gemini AI into its Google TV smart TV operating system, allowing it to generate descriptions for movies and TV shows. When you are viewing content that is missing a description, Gemini will fill it in automatically. Gemini on Google TV will also now translate descriptions into the viewers’ native language, making it easier to find international shows and movies to watch.

7. AI for educational purposes

HOW TO DELETE EVERYTHING FROM YOUR GOOGLE SEARCHES

Google also unveiled LearnLM, a new generative AI model that is designed for education. It comes as a collaboration between Google’s DeepMind AI research division and Google’s Research lab. LearnLM is designed as a chatbot that looks to tutor students on a range of subjects, from mathematics to English grammar.

EXPERT TIPS, LEGIT REVIEWS. GET THE FREE CYBERGUY REPORT NEWSLETTER

Kurt’s key takeaways

If you missed Google I/O 2024, here’s the scoop: Google’s AI, Gemini, stole the show with its ability to integrate information from various media and answer your queries on the fly. Noteworthy features include a call scam detection for Android, a photo search tool that can find your car using your license plate number and the integration of Gemini into Google’s Workspace suite for smarter document handling.

Plus, Google’s new AI-powered search promises more readable results, and Google TV now boasts AI-generated content descriptions. For creatives and learners, Google introduced VideoFX for AI-generated videos, MusicFX’s DJ Mode for music creation and LearnLM, an AI tutor for students. It’s clear that Google is betting big on AI to keep ahead of the competition.

Are there any concerns that you believe should be addressed as these technologies become more integrated into our personal and professional environments? Let us know by writing us at Cyberguy.com/Contact

For more of my tech tips and security alerts, subscribe to my free CyberGuy Report Newsletter by heading to Cyberguy.com/Newsletter

Ask Kurt a question or let us know what stories you’d like us to cover

Follow Kurt on Facebook, YouTube and Instagram

Answers to the most asked CyberGuy questions:

Copyright 2024 CyberGuy.com. All rights reserved.

Technology

Replacing the OLED iPad Pro’s battery is easier than ever

/cdn.vox-cdn.com/uploads/chorus_asset/file/25454244/Screenshot_2024_05_18_at_12.02.42_PM.png)

Apple’s newest iPad Pro is remarkably rigid for how thin it is, and apparently also a step forward when it comes to repairability. iFixit shows during its teardown of the tablet that the iPad Pro’s 38.99Wh battery, which will inevitably wear down and need replacement, is actually easy to get to. It’s a change iFixit’s Shahram Mokhtari says during the video “could save hours in repair time” compared to past iPad Pro models.

Getting to it still requires removing the glued-in tandem OLED screen, which iFixit notes in the video and its accompanying blog isn’t two panels smashed together, but a single OLED board with more electroluminescence layers per OLED diode. With the screen out of the way, iFixit was essentially able to pull the battery almost immediately (after removing the camera assembly and dealing with an aluminum lip beneath that, which made some of the tabs hard to get to). For previous models, he notes, you have to pull out “every major component.”

After that, though, the thinness proves to be an issue for iFixit, as many of the parts are glued in, including the tablet’s logic board. In the blog, the site goes into more detail here, mentioning that the glue means removing the speakers destroys them, and the tablet’s daughter board is very easy to accidentally bend.

The site also found that the 256GB model uses only one NAND storage chip, meaning it’s technically slower than dual-chip storage. As some Verge readers may recall, that’s also the case for M2 MacBook Air’s entry-level storage tier. But as we noted then (and as iFixit says in its blog), that’s not something people who aren’t pushing the device will notice, and those who are may want more storage, regardless.

But you can’t say the same for Apple’s new $129 Apple Pencil Pro, which shouldn’t shock anyone. Mokhtari was forced to cut into the pencil using an ultrasonic cutter, a moment he presented as “the world’s worst ASMR video.” (That happens just after the five-minute mark, in case you want to mute the video right there to avoid the ear-piercing squeal of the tool.) Unlike the iPad Pro itself, the Pencil Pro’s battery was the last thing he could get to.

By the time Mokhtari is done, the pencil is utterly destroyed, of course. He says the site will have a full chip ID soon that will include images of the MEMS sensor that drives the pencil’s barrel roll feature that lets you twist the pencil to adjust the rotation of on-screen art tools.

-

World1 week ago

World1 week agoIndia Lok Sabha election 2024 Phase 4: Who votes and what’s at stake?

-

Politics1 week ago

Politics1 week agoTales from the trail: The blue states Trump eyes to turn red in November

-

World1 week ago

World1 week agoBorrell: Spain, Ireland and others could recognise Palestine on 21 May

-

Politics1 week ago

Politics1 week agoFox News Politics: No calm after the Stormy

-

World1 week ago

World1 week agoUkraine’s Zelenskyy fires head of state guard over assassination plot

-

Politics1 week ago

Politics1 week agoUS Border Patrol agents come under fire in 'use of force' while working southern border

-

World1 week ago

World1 week agoCatalans vote in crucial regional election for the separatist movement

-

News7 days ago

News7 days agoSkeletal remains found almost 40 years ago identified as woman who disappeared in 1968