This is Hot Pod, The Verge’s newsletter about podcasting and the audio industry. Sign up here for more.

Technology

Spotify’s podcast future isn’t very original

/cdn.vox-cdn.com/uploads/chorus_asset/file/23951394/STK088_VRG_Illo_N_Barclay_1_spotify.jpg)

When Spotify announced yesterday that it would lay off 200 employees from its podcast unit and combine Gimlet and Parcast into a single operation, it came as a shock to outside observers. But former and current podcast employees at Spotify have seen the writing on the wall for some time.

“We definitely have expected for several months now that they’d be axing people since the vibe at Gimlet had been very much one of walking on eggshells for months now,” one former Gimlet employee who was a part of yesterday’s layoffs told Hot Pod. “Zero joy. [The layoffs] were more just a matter of when. The fact that it was yesterday, that was the surprise.”

“Zero joy. [The layoffs] were more just a matter of when.”

It’s been more than a year since Spotify first eliminated its namesake podcast production unit. Last fall, Spotify laid off dozens of Gimlet and Parcast workers and pulled 11 original shows from production. It began this year by axing 600 jobs companywide (including a number of ad and business jobs under Podsights and Chartable). High-profile executives such as content chief Dawn Ostroff (who steered Spotify’s podcast operations) have left. Prominent names, including Barack and Michelle Obama’s Higher Ground Productions, Brené Brown, and Esther Perel, have exited deals with the platform. Jemele Hill hasn’t left yet but is weighing other options.

As Bloomberg reported last week, neither Parcast nor Gimlet had received annual budgets, so they hadn’t been able to greenlight new shows or approve travel expenses. It was only this week that we found out Spotify’s reasoning for this: both Gimlet and Parcast will be combined to form a new Spotify Originals studio focused on original productions, which will include producing shows like Stolen, The Journal, Science Vs, Heavyweight, Serial Killers, and Conspiracy Theories.

Another Gimlet employee who was laid off noted that production staff — producers, reporters, and engineers — seem to be most heavily hit by the job cuts.

Both nonfiction and fiction shows were impacted. Spotify spokesperson Grey Munford confirmed that Case 63, which dropped its first season last fall, will be continuing. The show is produced by Gimlet along with Julianne Moore and Oscar Issac’s production companies, FortySixty and Mad Gene Media. Much of the Gimlet fiction team behind Case 63, the chart-topping fiction podcast starring Moore and Issac, is now under Spotify’s head of development, Liz Gateley, according to Munford.

As far as what Spotify wants the remaining chunk of Gimlet and Parcast to be doing, it appears to be along the lines of not getting in the way as it embraces creators and third-party deals. The company spelled out clearly that the next phase of its podcast strategy was to focus on creators and users, including the Spotify for Podcasters — the company’s ad and monetization platform.

“We know that creators have embraced the global audience on our platform but want improved discovery to help them grow their audience. We also know that they appreciate our tools and creator support programs but want more optionality and flexibility in terms of monetization. Fortunately, Spotify is not a company that ever sits still. Given these learnings and our leadership position, we recently embarked on the next phase of our podcast strategy, which is focused on delivering even more value for creators (and users!),” wrote Sahar Elhabashi, Spotify’s head of podcast business.

“They’re very different styles of production and development.”

The merging of Gimlet and Parcast seems to be an unnatural one, according to a number of former employees. Parcast, which Spotify acquired in 2019, focuses largely on true-crime podcasts, with shows like Criminal Passion and Criminal Couples. Gimlet is known for its lineup of audio journalism series and interview podcasts, as well as scripted audio dramas. Gimlet won its first Pulitzer Prize in audio reporting earlier this year for Stolen: Surviving St. Michael’s by investigative journalist Connie Walker. A second season of Stolen has been greenlit.

“I’m not sure what folding Parcast and Gimlet together in one team means. They’re very different styles of production and development, so they need different kinds of support in terms of marketing, PR, development, and other skill sets,” said one former Gimlet employee, who left prior to this week’s layoffs.

Gimlet blew up largely due to its original shows, which helped define the podcast boom. Series like Reply All, The Nod, Heavyweight, and StartUp helped push the bar on what audio storytelling could be, and both advertisers and investors lined up to get involved. But under the leadership of Spotify, both Gimlet and Parcast struggled to find direction. Reply All came to an inglorious end just over a year ago, and Gimlet under Spotify hasn’t produced another equivalent hit.

The blame is at least partly due to Spotify’s inability to fully understand what it was buying for a combined total of roughly $300 million. Unifying Parcast and Gimlet was a good example of that.

“Our shows and content are very different,” said one Gimlet worker who was laid off yesterday. “The fact that Spotify is merging them so clumsily only further illustrates that they never really understood or appreciated either of us fully.”

The hasty merger and axing of original programming echo similar tactics from the world of streaming video, such as Warner Bros. Discovery’s decision to combine HBO Max and Discovery Plus’ offerings into one streaming platform or Paramount’s decision to merge Showtime (which generates premium scripted series like Yellowjackets) with Paramount Plus, which is home to shows from CBS, BET, and TV Land — as well as live sports.

Such decisions reflect the reality of today’s cash-strapped streaming environment. Much like how Netflix would once go on buying sprees at Cannes and now makes reality shows like Too Hot to Handle, Spotify is moving away from pricey originals and embracing amateur podcasters and creator partnerships (not to mention its highest-value celebrity audio deals, such as that with Joe Rogan). In both cases, companies are trimming their original programming in favor of content that is cheaper to produce and generates more eyeballs and downloads.

Less prestigious content won’t make a difference to advertisers, says Max Willens, a senior analyst at Insider Intelligence. “I would say that advertisers will welcome this decision in the sense that it may give them more inventory to advertise against, possibly at a more attractive price. The longform, highly produced content that Gimlet made its name creating was costlier and took longer to produce, and often commanded premium ad prices, which advertisers sometimes chafed at.”

But for those who work in the audio industry, Spotify’s hasty exit from the world of podcasting and original audio journalism aligns with the behavior they’ve grown to expect from the tech company.

“[The individuals laid off] are some of the most talented, experienced producers in the entire industry,” said a Gimlet staffer who left prior to this week’s layoffs. “It’s disappointing that Spotify never understood that — and how to harness that creativity and experience.”

Audiobooks and podcasts may become a haven in the event of a SAG strike

SAG-AFTRA overwhelmingly voted in support of a strike if they don’t reach a deal with the studios, union leadership announced on Monday night. Although SAG-AFTRA is known traditionally as Hollywood’s actor union, its 160,000-strong membership includes DJs, news anchors, voiceover artists — as well as podcast hosts and audiobook narrators.

The looming SAG-AFTRA strike is with the Alliance of Motion Picture and Television Producers (AMPTP) and would only impact contracts bargained with them. Productions covered by SAG’s TV and theatrical contracts would be considered off-limits.

“Only productions that are covered by the TV/Theatrical Codified Basic Agreement and Television Agreement would be struck in […in the event of a strike]. Scripted dramatic live action entertainment production that is covered by the SAG-AFTRA TV/Theatrical Contracts would be considered struck work,” wrote SAG-AFTRA’s chief communications officer Pamela Greenwalt in an email.

In other words, most podcast and audiobook contracts under SAG-AFTRA would not be considered “struck” work. This is in contrast to the ongoing WGA strike, where writing on scripted, fiction podcasts covered by WGA isn’t kosher and striking members are not allowed to work on non-union projects.

“So while work by any member (celebrity or otherwise) under SAG-AFTRA’s Audiobook Contracts would NOT be covered by a TV/Theatrical strike, all members will honor that action in the areas of work that are impacted should a strike need to be called,” clarified Greenwalt.

Which means that for performers looking to work during a Hollywood strike, the audio world may become their go-to destination. Celebrity audio dramas have certainly become in vogue lately, with the likes of Demi Moore, Chris Pine, Rami Malek, Matthew McConaughey, and others contributing their voice talents to fiction podcasts. Audible has showcased a number of celebrity-narrated audiobooks by Meryl Streep, Tom Hanks, Nicole Kidman, Thandiwe Newton, and others.

It’s still uncertain whether SAG-AFTRA will even call a strike. The union is scheduled to start contract negotiations with AMPTP on June 7th. In the event that they’re unable to reach a deal with the studios, SAG-AFTRA can then take steps to go on strike.

Technology

Tesla recalls all 3,878 Cybertrucks over faulty accelerator pedal

/cdn.vox-cdn.com/uploads/chorus_asset/file/25073829/IMG_0618.jpg)

Tesla has issued a recall for effectively every Cybertruck it’s delivered to customers due to a fault that’s causing the vehicle’s accelerator pedals to get stuck.

The fault was caused by an “unapproved change” that introduced “lubricant (soap)” during the assembly of the accelerator pedals, which reduced the retention of the pad, the recall notice states. The truck’s brakes will still function if the accelerator pedal becomes trapped, though this obviously isn’t an ideal workaround.

The recall impacts “all Model Year (‘MY’) 2024 Cybertruck vehicles manufactured from November 13, 2023, to April 4, 2024,” with the fault estimated to be present in 100 percent of the total 3,878 vehicles. This is essentially every Cybertruck delivered to customers since its launch event last year.

A recall seemed to be inevitable after Cybertruck customers were reportedly notified earlier this week that their deliveries were being delayed, with at least one owner being informed by their vehicle dealership that the truck was being recalled over its accelerator pedal. The issue was also highlighted by another Cybertruck owner on TikTok, showing how the fault “held the accelerator down 100 percent, full throttle.”

The timeline reported in the NHTSA filing says that Tesla was first notified of the defective accelerator pedals on March 31st, followed by a second report on April 3rd. The company completed internal assessments to find the cause on April 12th before voluntarily issuing a recall. As of Monday this week, Tesla said it isn’t aware of any “collisions, injuries, or deaths” attributed to the pedal fault.

Tesla is notifying its stores and service centers of the issue “on or around” April 19th and has committed to replacing or reworking the pedals on recalled vehicles at no charge to Cybertruck owners. Any trucks produced from April 17th onward will also be equipped with a new accelerator pedal component and part number.

This is actually the second of Tesla’s many recalls to affect the Cybertruck, but it is the most significant. The company issued a recall for 2 million Tesla vehicles in the US back in February due to the font on the warning lights panel being too small to comply with safety standards, though this was resolved with a software update.

Tesla fans have taken issue with the word “recall” in the past when the company has proven adept at fixing its problems through over-the-air software updates. But they likely will have to admit that, in this case, the terminology applies.

Technology

How to zoom in and out on PC

Have you ever found yourself squinting at your computer screen to decipher tiny text or make out the details of an image? Well, you’re not alone.

Fortunately, there’s a nifty trick that can save your eyes: zooming in. It’s a simple yet effective way to enhance your browsing experience, whether you’re working, shopping or just surfing the web.

If you want to zoom in and out on browser text on a Mac, we’ve got those step-by-step instructions here.

CLICK TO GET KURT’S FREE CYBERGUY NEWSLETTER WITH SECURITY ALERTS, QUICK VIDEO TIPS, TECH REVIEWS AND EASY HOW-TO’S TO MAKE YOU SMARTER

A man on a PC (Kurt “CyberGuy” Knutsson)

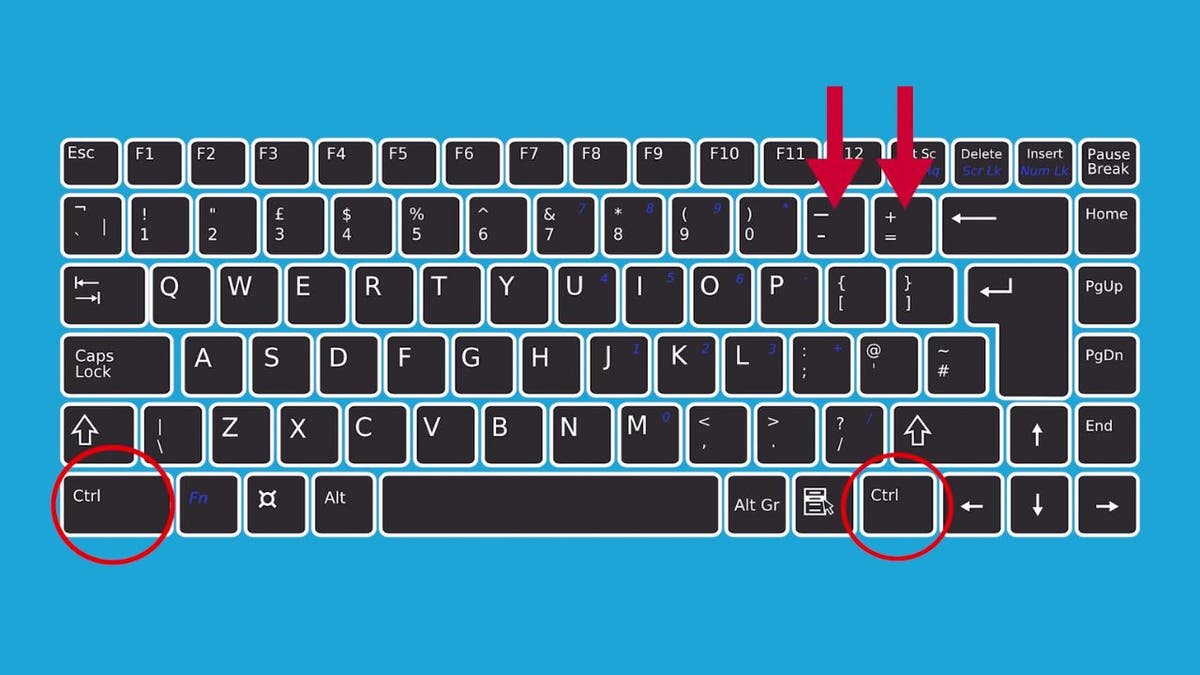

How to use the keyboard to zoom in and out on a PC

Let’s start with the basics. If you need a quick zoom in or out on your browser window on your PC, here’s how to do it.

- Just hold down one of the Control keys and press the Plus (+) or Minus (-) key to zoom in and out, respectively.

10 TIPS TO SPEED UP YOUR PC’S PERFORMANCE

Using the keyboard to zoom in and out on PC (Kurt “CyberGuy” Knutsson)

MORE: FIRST THINGS TO DO IF YOU GOT A NEW PC

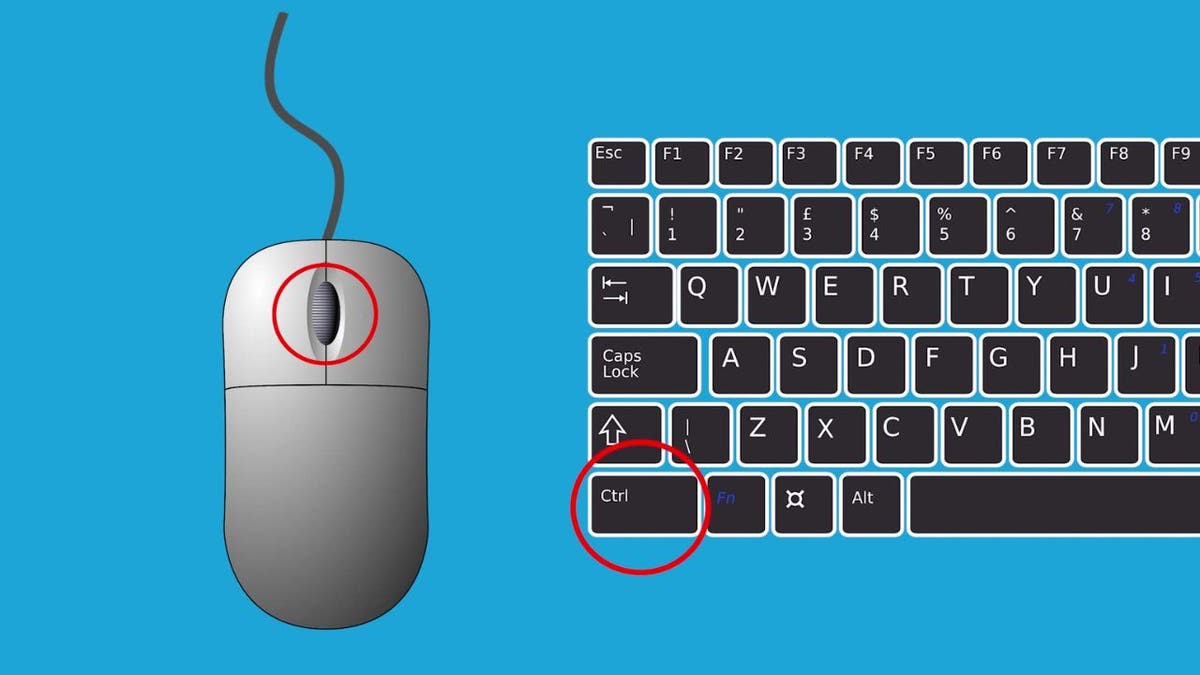

How to use your mouse wheel to zoom in and out on a PC

Prefer using your mouse to zoom in and out? No problem.

- Hold down Control again, but this time, use your mouse wheel.

- Scroll up to zoom in and down to zoom out. This method gives you the same control as the keyboard method, with a twist of your wrist.

Using the keyboard and the mouse to zoom in and out on PC (Kurt “CyberGuy” Knutsson)

MORE: 10 TIPS TO SPEED UP YOUR PC’S PERFORMANCE

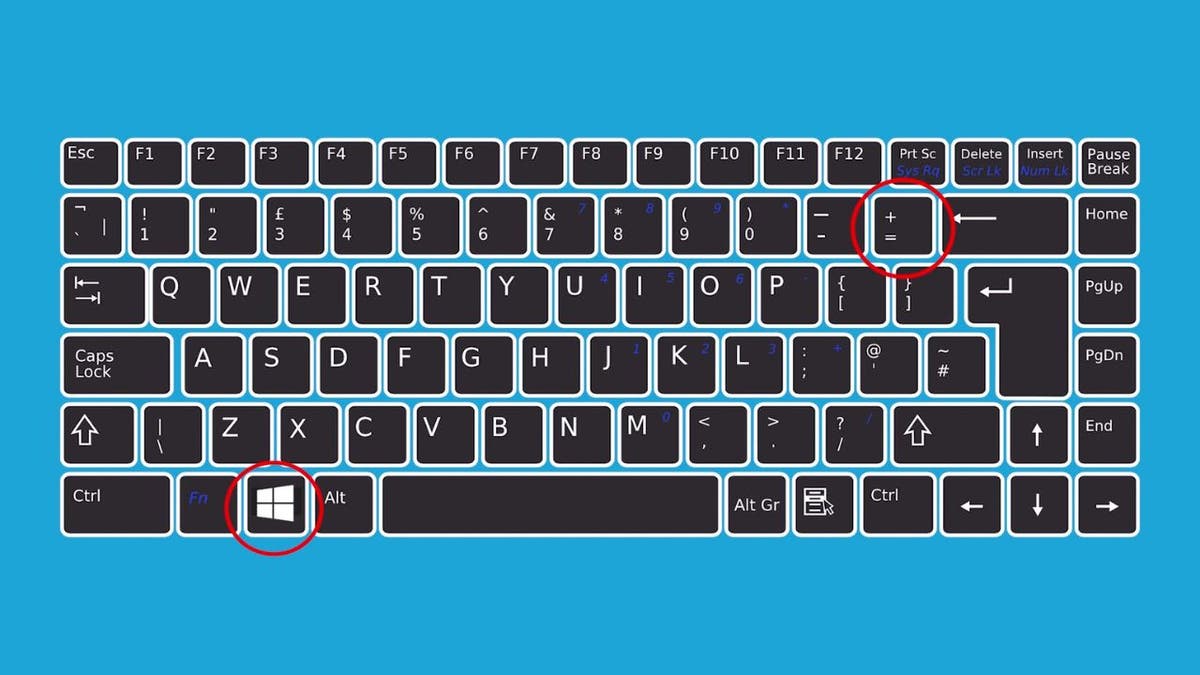

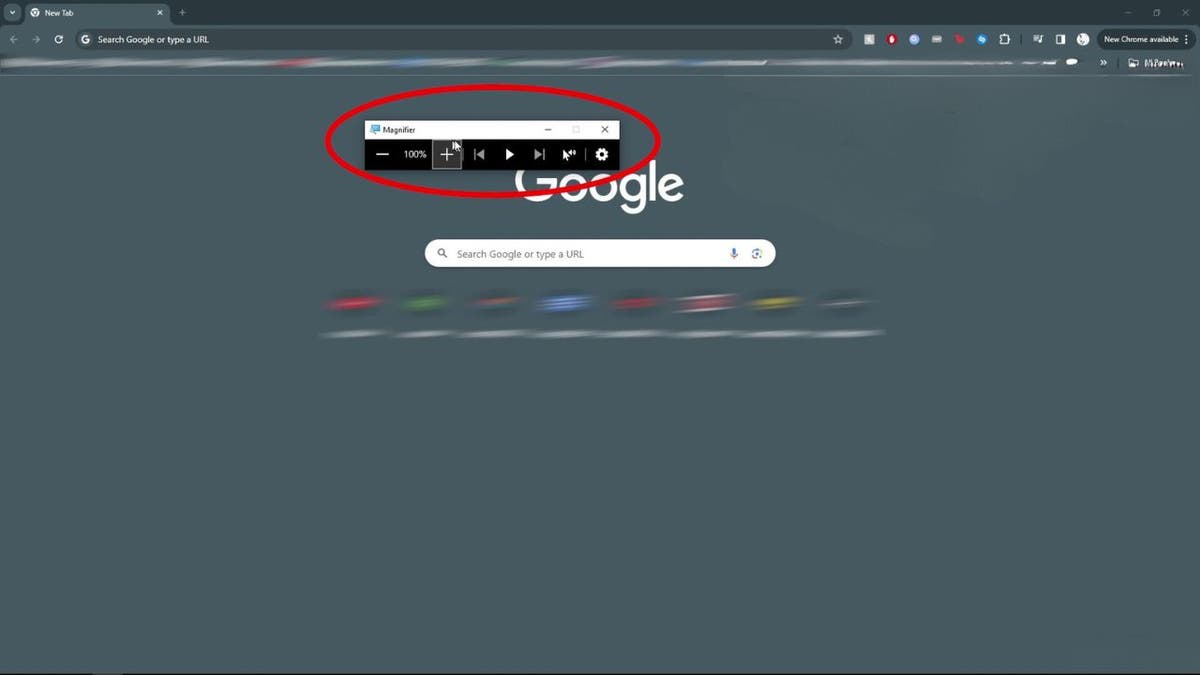

The Magnifier: Beyond the browser

Sometimes, you need to see more than just the browser text on your PC.

- For full-screen magnification, hold down the Windows button and press the Plus (+) key.

Using the Magnifier tool to zoom in and out on PC (Kurt “CyberGuy” Knutsson)

- This will open the Magnifier tool.

Using the Magnifier tool to zoom in and out on PC (Kurt “CyberGuy” Knutsson)

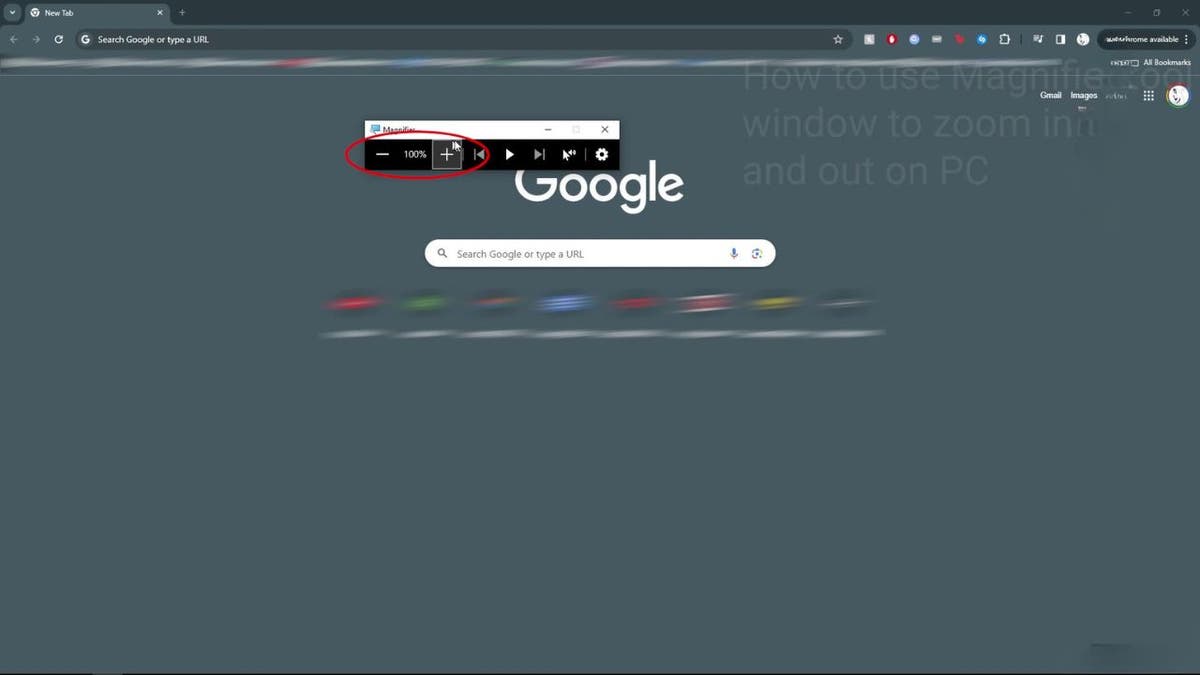

- The Magnifier Tool allows you to zoom in and out by pressing the Plus (+) or Minus (-).

Using the Magnifier tool to zoom in and out on PC (Kurt “CyberGuy” Knutsson)

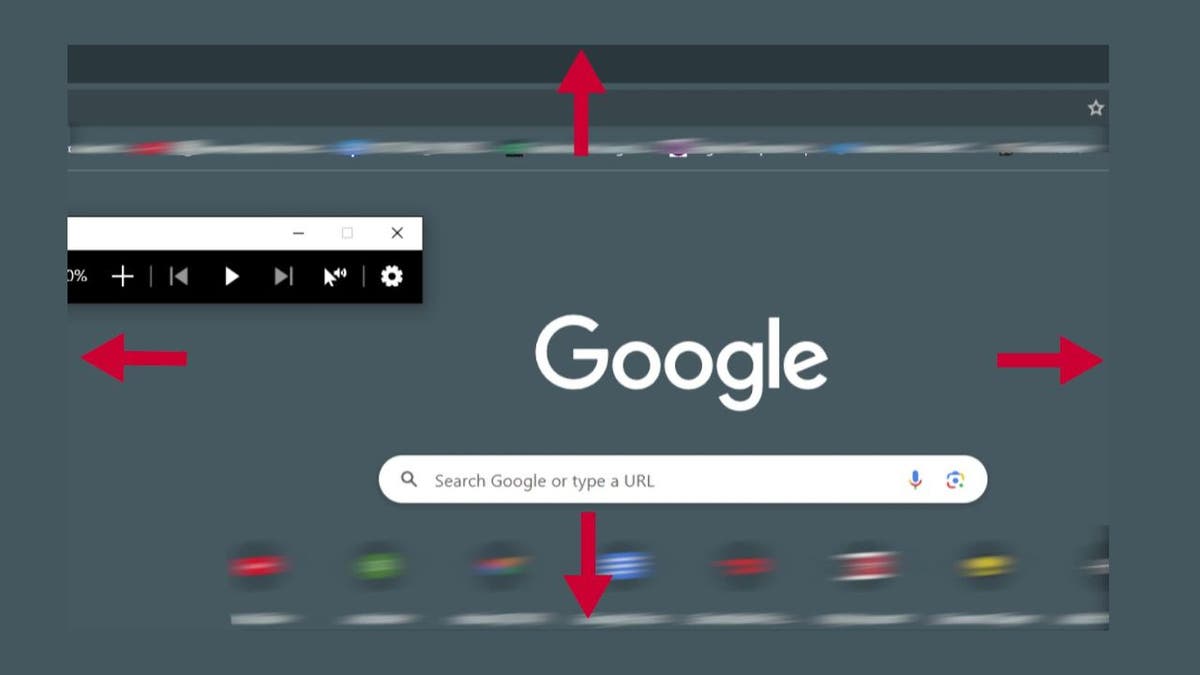

- You can also move your cursor to the edges of the screen to navigate around.

Using the Magnifier tool to zoom in and out on PC (Kurt “CyberGuy” Knutsson)

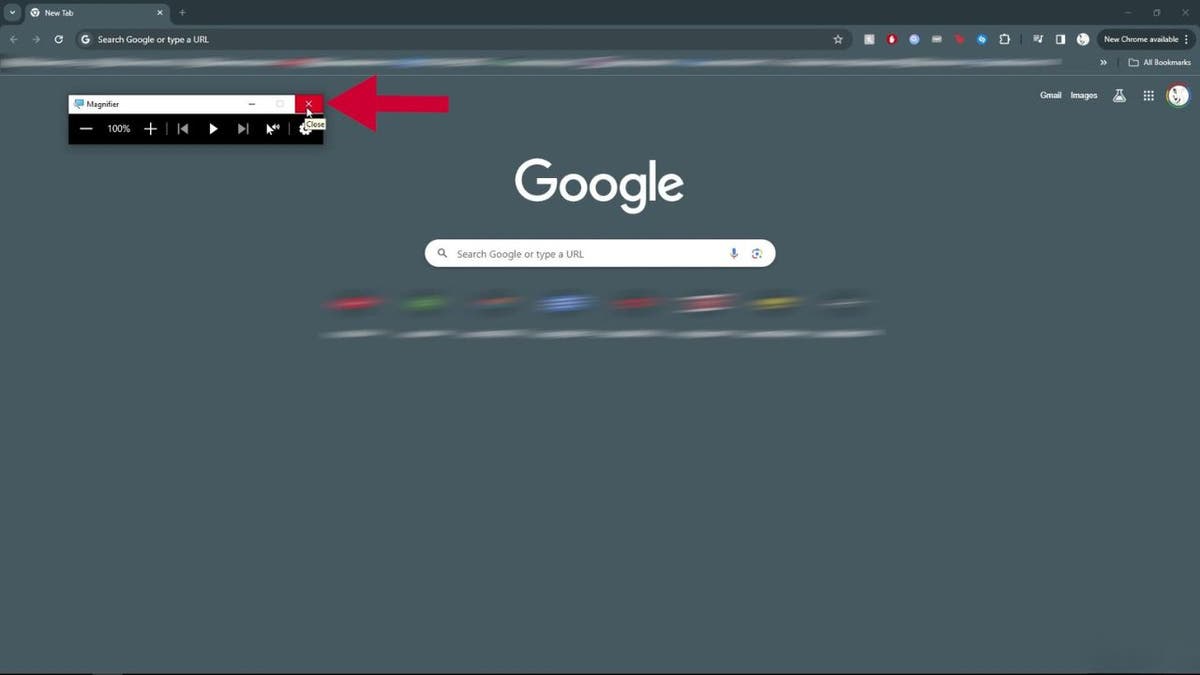

- When you’re done, simply click the X on the window to exit zoom mode.

Click the X to exit zoom mode (Kurt “CyberGuy” Knutsson)

MORE: HOW TO TIDY UP YOUR DESKTOP ON A PC OR MAC

Kurt’s key takeaways

Zooming in on your computer screen is more than just a trick; it’s a way to adapt technology to your needs. Whether it’s for accessibility or comfort, the ability to zoom in and out with ease ensures that everything you need to see is within view. So the next time you’re struggling to read that small print or want a closer look at a web page, remember these simple shortcuts.

See my Best Laptops for 2024 here.

How important is it for you to have control over visual elements like size, contrast and layout when using various devices, and how does this affect your choice of technology? Let us know by writing us at Cyberguy.com/Contact.

For more of my tech tips & security alerts, subscribe to my free CyberGuy Report Newsletter by heading to Cyberguy.com/Newsletter.

Ask Kurt a question or let us know what stories you’d like us to cover.

Answers to the most asked CyberGuy questions:

Copyright 2024 CyberGuy.com. All rights reserved.

Technology

Razer’s Kishi Ultra gaming controller brings haptics to your USB-C phone, PC, or tablet

/cdn.vox-cdn.com/uploads/chorus_asset/file/25407815/Screen_Shot_2024_04_18_at_4.13.30_PM.png)

Razer’s latest mobile gaming controller just released today, the Kishi Ultra, is an all-rounder that can switch between multiple devices. The controller has a built-in USB-C port that can work with the iPhone 15 series as well as most Android smartphones (Razer says it’s compatible with the Galaxy 23 series, Pixel 6 and up, the Razer Edge, and “many other Android devices.”) It also seems to work perfectly fine with Galaxy Z Fold 5 and other foldables. The controller can expand to fit your iPad Mini and any 8-inch Android tablets, and you can also tether it to your PC.

One interesting feature in the Kishi Ultra is the inclusion of Razer’s Sensa HD immersive haptics, which the company claims can take any audio — whether that be a game, movie, or music — and convert it to haptics. We saw the same haptics in Razer’s Project Esther concept gaming chair that it unveiled at CES. The Kishi Ultra is the first commercially available Razer product to feature the Sensa haptics, so it’ll give the general public a chance to try them out. The Sensa haptics won’t support iOS — it currently only works with Android 12 or above and Windows 11. The controller is also outfitted with a small pair of Chroma RGB lights, right below the joysticks.

Note that you’ll need to download the Razer Nexus app (available for both iOS and Android) for the Kishi Ultra to work. The app can launch mobile games, and is integrated with Apple Arcade, Xbox Game Pass, and the Google Play Store.

Razer also announced a new version of its less expensive Kishi V2 with a USB-C port for iPhone 15 and Android, one which similarly supports wired play on PC and the iPad.

Both the Razer Kishi Ultra and Kishi V2 USB-C are available in stores or online now, and are priced at $150 and $99, respectively.

-

/cdn.vox-cdn.com/uploads/chorus_asset/file/25382021/V4_Pro_Beta_PressKit_LaunchImage.jpg)

/cdn.vox-cdn.com/uploads/chorus_asset/file/25382021/V4_Pro_Beta_PressKit_LaunchImage.jpg) Technology1 week ago

Technology1 week agoAdobe overhauls Frame.io to make it a little more Trello-like

-

World1 week ago

World1 week agoEU migration reform faces tight vote as party divisions deepen

-

Movie Reviews1 week ago

Movie Reviews1 week agoCivil War Movie Review: Alex Garland Offers ‘Dystopian’ Future

-

News1 week ago

News1 week agoFor communities near chemical plants, EPA's new air pollution rule spells relief

-

News1 week ago

News1 week agoSee Maps of Where Eclipse Seekers Flocked and the Traffic That Followed

-

Politics1 week ago

Politics1 week agoArizona Supreme Court upholds near-total abortion ban

-

Politics1 week ago

Politics1 week agoWhat to know about the Arizona Supreme Court's reinstatement of an 1864 near-total abortion ban

-

News1 week ago

News1 week agoVideo: Biden Hosts Japan’s Prime Minister at the White House